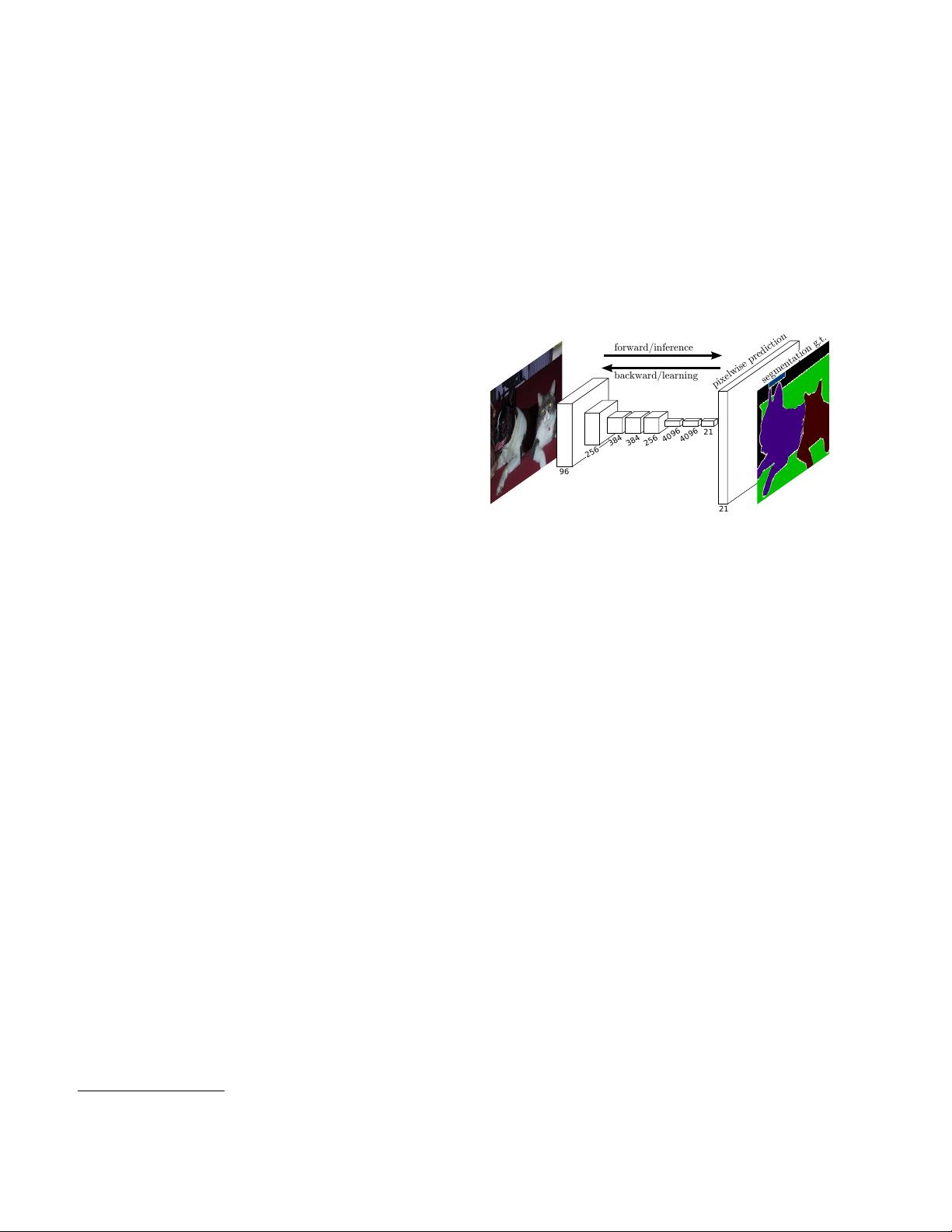

local-to-global pyramid. We define a skip architecture to

take advantage of this feature spectrum that combines deep,

coarse, semantic information and shallow, fine, appearance

information in Section 4.2 (see Figure 3).

In the next section, we review related work on deep clas-

sification nets, FCNs, and recent approaches to semantic

segmentation using convnets. The following sections ex-

plain FCN design and dense prediction tradeoffs, introduce

our architecture with in-network upsampling and multi-

layer combinations, and describe our experimental frame-

work. Finally, we demonstrate state-of-the-art results on

PASCAL VOC 2011-2, NYUDv2, and SIFT Flow.

2. Related work

Our approach draws on recent successes of deep nets

for image classification [22, 34, 35] and transfer learning

[5, 41]. Transfer was first demonstrated on various visual

recognition tasks [5, 41], then on detection, and on both

instance and semantic segmentation in hybrid proposal-

classifier models [12, 17, 15]. We now re-architect and fine-

tune classification nets to direct, dense prediction of seman-

tic segmentation. We chart the space of FCNs and situate

prior models, both historical and recent, in this framework.

Fully convolutional networks To our knowledge, the

idea of extending a convnet to arbitrary-sized inputs first

appeared in Matan et al. [28], which extended the classic

LeNet [23] to recognize strings of digits. Because their net

was limited to one-dimensional input strings, Matan et al.

used Viterbi decoding to obtain their outputs. Wolf and Platt

[40] expand convnet outputs to 2-dimensional maps of de-

tection scores for the four corners of postal address blocks.

Both of these historical works do inference and learning

fully convolutionally for detection. Ning et al. [30] define

a convnet for coarse multiclass segmentation of C. elegans

tissues with fully convolutional inference.

Fully convolutional computation has also been exploited

in the present era of many-layered nets. Sliding window

detection by Sermanet et al. [32], semantic segmentation

by Pinheiro and Collobert [31], and image restoration by

Eigen et al. [6] do fully convolutional inference. Fully con-

volutional training is rare, but used effectively by Tompson

et al. [38] to learn an end-to-end part detector and spatial

model for pose estimation, although they do not exposit on

or analyze this method.

Alternatively, He et al. [19] discard the non-

convolutional portion of classification nets to make a

feature extractor. They combine proposals and spatial

pyramid pooling to yield a localized, fixed-length feature

for classification. While fast and effective, this hybrid

model cannot be learned end-to-end.

Dense prediction with convnets Several recent works

have applied convnets to dense prediction problems, includ-

ing semantic segmentation by Ning et al. [30], Farabet et al.

[9], and Pinheiro and Collobert [31]; boundary prediction

for electron microscopy by Ciresan et al. [3] and for natu-

ral images by a hybrid convnet/nearest neighbor model by

Ganin and Lempitsky [11]; and image restoration and depth

estimation by Eigen et al. [6, 7]. Common elements of these

approaches include

• small models restricting capacity and receptive fields;

• patchwise training [30, 3, 9, 31, 11];

• post-processing by superpixel projection, random field

regularization, filtering, or local classification [9, 3, 11];

• input shifting and output interlacing for dense output [32,

31, 11];

• multi-scale pyramid processing [9, 31, 11];

• saturating tanh nonlinearities [9, 6, 31]; and

• ensembles [3, 11],

whereas our method does without this machinery. However,

we do study patchwise training 3.4 and “shift-and-stitch”

dense output 3.2 from the perspective of FCNs. We also

discuss in-network upsampling 3.3, of which the fully con-

nected prediction by Eigen et al. [7] is a special case.

Unlike these existing methods, we adapt and extend deep

classification architectures, using image classification as su-

pervised pre-training, and fine-tune fully convolutionally to

learn simply and efficiently from whole image inputs and

whole image ground thruths.

Hariharan et al. [17] and Gupta et al. [15] likewise adapt

deep classification nets to semantic segmentation, but do

so in hybrid proposal-classifier models. These approaches

fine-tune an R-CNN system [12] by sampling bounding

boxes and/or region proposals for detection, semantic seg-

mentation, and instance segmentation. Neither method is

learned end-to-end. They achieve state-of-the-art segmen-

tation results on PASCAL VOC and NYUDv2 respectively,

so we directly compare our standalone, end-to-end FCN to

their semantic segmentation results in Section 5.

We fuse features across layers to define a nonlinear local-

to-global representation that we tune end-to-end. In con-

temporary work Hariharan et al. [18] also use multiple lay-

ers in their hybrid model for semantic segmentation.

3. Fully convolutional networks

Each layer of data in a convnet is a three-dimensional

array of size h × w × d, where h and w are spatial dimen-

sions, and d is the feature or channel dimension. The first

layer is the image, with pixel size h × w, and d color chan-

nels. Locations in higher layers correspond to the locations

in the image they are path-connected to, which are called

their receptive fields.

Convnets are built on translation invariance. Their ba-

sic components (convolution, pooling, and activation func-

tions) operate on local input regions, and depend only on

relative spatial coordinates. Writing x

ij

for the data vector

at location (i, j) in a particular layer, and y

ij

for the follow-

WQL2572022-01-11哪里翻译了,全是英文

WQL2572022-01-11哪里翻译了,全是英文 我的内容管理

展开

我的内容管理

展开

我的资源

快来上传第一个资源

我的资源

快来上传第一个资源

我的收益 登录查看自己的收益

我的收益 登录查看自己的收益 我的积分

登录查看自己的积分

我的积分

登录查看自己的积分

我的C币

登录后查看C币余额

我的C币

登录后查看C币余额

我的收藏

我的收藏  我的下载

我的下载  下载帮助

下载帮助

前往需求广场,查看用户热搜

前往需求广场,查看用户热搜

信息提交成功

信息提交成功