没有合适的资源?快使用搜索试试~ 我知道了~

温馨提示

内容概要:本文提出了一种利用跨域数据源进行迁移学习的深层神经网络框架来解决软件早期开发阶段的冷启动问题。该方法结合了历史漏洞数据和合成测试用例两种异构数据来源,并使用双通道双向长短期记忆(Bi-LSTM)网络对漏洞模式进行了提取。通过对多种开源项目实验证明,在仅有少量标签或无标签的情况下,提出的框架显著提升了漏洞检测性能,尤其是在缓冲区错误和数值型错误检测方面效果尤为突出。文章还比较了静态分析工具如Flawfinder,结果显示新方法能更好地识别未知漏洞并提高检出率。 适合人群:从事信息安全或漏洞分析的专业人士,特别是在使用机器学习技术和深度学习模型的研究人员。 使用场景及目标:适用于软件安全性和质量保证过程中快速识别代码潜在缺陷的应用场景。目的是减少人工特征工程的工作量,并提高自动化程度,从而改善软件项目的长期稳定性和安全性。 其他说明:该研究成果强调了结合实际案例学习的重要性,以及利用迁移学习应对初始阶段缺少足够样本的问题。尽管该系统目前主要关注静态分析领域的内部调用级代码段漏洞检测,未来将探索改进对更复杂间断性漏洞的支持能力。

资源推荐

资源详情

资源评论

1545-5971 (c) 2019 IEEE. Personal use is permitted, but republication/redistribution requires IEEE permission. See http://www.ieee.org/publications_standards/publications/rights/index.html for more information.

This article has been accepted for publication in a future issue of this journal, but has not been fully edited. Content may change prior to final publication. Citation information: DOI 10.1109/TDSC.2019.2954088, IEEE

Transactions on Dependable and Secure Computing

1

Software Vulnerability Discovery via Learning

Multi-domain Knowledge Bases

Guanjun Lin, Jun Zhang*, Senior Member, IEEE, Wei Luo, Lei Pan, Member, IEEE,

Olivier De Vel, Paul Montague, and Yang Xiang Senior Member, IEEE

Abstract—Machine learning (ML) has great potential in automated code vulnerability discovery. However, automated discovery

application driven by off-the-shelf machine learning tools often performs poorly due to the shortage of high-quality training data. The

scarceness of vulnerability data is almost always a problem for any developing software project during its early stages, which is referred

to as the cold-start problem. This paper proposes a framework that utilizes transferable knowledge from pre-existing data sources. In

order to improve the detection performance, multiple vulnerability-relevant data sources were selected to form a broader base for

learning transferable knowledge. The selected vulnerability-relevant data sources are cross-domain, including historical vulnerability

data from different software projects and data from the Software Assurance Reference Database (SARD) consisting of synthetic

vulnerability examples and proof-of-concept test cases. To extract the information applicable in vulnerability detection from the

cross-domain data sets, we designed a deep-learning-based framework with Long-short Term Memory (LSTM) cells. Our framework

combines the heterogeneous data sources to learn unified representations of the patterns of the vulnerable source codes. Empirical

studies showed that the unified representations generated by the proposed deep learning networks are feasible and effective, and are

transferable for real-world vulnerability detection. Our experiments demonstrated that by leveraging two heterogeneous data sources,

the performance of our vulnerability detection outperformed the static vulnerability discovery tool Flawfinder. The findings of this paper

may stimulate further research in ML-based vulnerability detection using heterogeneous data sources.

Index Terms—Vulnerability detection, Representation Learning, Deep learning.

F

1 INTRODUCTION

Many cybersecurity incidents and data breaches are caused

by exploitable vulnerabilities in software [21, 41]. Discov-

ering and detecting software vulnerabilities has been an

important research direction. Automated techniques such as

rules-based analysis [9, 47], symbolic execution [4], and fuzz

testing [42] have been proposed to enhance the vulnerability

search. However, these techniques are inefficient when used

on a large code base in practice [48]. To improve efficiency,

machine learning (ML) techniques were applied to automate

the detection of software vulnerabilities and to accelerate the

code inspection process.

ML algorithms are capable of learning latent patterns

indicative of vulnerable/defective code, potentially outper-

forming the rules derived from experience, with a signifi-

cantly improved level of generalization. Nevertheless, the

application of traditional ML techniques to vulnerability

detection still requires human experts to define features,

which largely relies on human experience, level of expertise

and depth of domain knowledge [18]. With deep learning,

code fragments can be directly used for learning without

the need for manual feature extraction and thus relieving

Jun Zhang is the corresponding author.

Guanjun Lin, Jun Zhang, and Yang Xiang are with School of Software and

Electrical Engineering, Swinburne University of Technology, Melbourne, VIC

3122, Australia (e-mail: {glin, junzhang, yxiang}@swin.edu.au)

Wei Luo and Lei Pan are with School of Information Technology,

Deakin University, Geelong, VIC 3216, Australia (e-mail: {wei.luo,

l.pan}@deakin.edu.au).

Olivier De Vel and Paul Montague are with the Defence Science & Technology

Group (DSTG), Department of Defence, Australia (e-mail:{Olivier.DeVel,

Paul.Montague}@dst.defence.gov.au)

experts from the time-consuming and possibly error-prone

feature engineering tasks. Recent studies have utilized neu-

ral networks for automated learning of semantic feature [45]

and high-level representations [18, 20] that could indicate

potential vulnerabilities in Java and C/C++ source code.

However, the existing ML-based vulnerability/defect

detection approaches, such as [18, 45, 53], have been con-

structed based on the assumption of the availability of suf-

ficient labeled training data from the homogeneous sources.

Unfortunately, this assumption is not always valid. There

are no known publicly available software vulnerability

repositories that provide real-world vulnerability data with

code and label pairs [18, 20]. The relative scarcity of real-

world vulnerability data exacerbates the shortcomings of

the existing ML-based solutions on real-world software

projects, especially for the software projects with a few

historical instances of the detected vulnerabilities. Due to

the expensive process of manual software vulnerability col-

lection, the lack of training data causes the supervised ML-

based vulnerability detection approaches to be challenging

to apply. Hence, in practice, manual efforts are still required

for the vulnerability discovery task [44].

To enhance the automation of software vulnerability

discovery, we propose a deep learning based framework

with the capability of leveraging multiple heterogeneous

vulnerability-relevant data sources for effectively and auto-

matically learning latent vulnerable programming patterns.

On the one hand, the automated learning of vulnerable

programming patterns can relieve human experts of the

tedious and error-prone feature engineering tasks. On the

other hand, the learned latent representations of the vul-

1545-5971 (c) 2019 IEEE. Personal use is permitted, but republication/redistribution requires IEEE permission. See http://www.ieee.org/publications_standards/publications/rights/index.html for more information.

This article has been accepted for publication in a future issue of this journal, but has not been fully edited. Content may change prior to final publication. Citation information: DOI 10.1109/TDSC.2019.2954088, IEEE

Transactions on Dependable and Secure Computing

nerable patterns from the combination of heterogeneous

vulnerability-relevant data sources can be used as useful

features to leverage for the shortage of labeled data. A

vulnerability-relevant data source is generally one that is

publicly available and that includes quasi real-world vul-

nerability samples as, for example, the vulnerability samples

in the Software Assurance Reference Dataset (SARD) project

[30]. The vulnerability samples that we used from the SARD

project are mostly synthetic test cases presented as proof-of-

concept vulnerabilities and patches for a human program-

mer to learn. We assume that the deep learning algorithms

can derive “basic patterns” from the artificially constructed

vulnerabilities. The other vulnerability data source includes

a limited number of historical vulnerability data instances

collected from some popular open-source software projects.

By combining the two cross-domain data sources, we design

the algorithms to collectively extract the useful information

not only from the real-world vulnerability data but also

from the synthetic data sets for improving the vulnerability

detection performance.

To utilize the heterogeneous data sources, the proposed

framework consists of two independent deep learning net-

works. Each network is trained independently using one

of the data sources. Based on the findings of our previous

work [20], a Long Short-Term Memory (LSTM) [13] network

trained by the historical vulnerability data source could

be used as a feature extractor to generate useful features

which contain the vulnerable information learned from the

vulnerability data source. By using the generated features

for training, a classifier can maintain its performance with

the lack of labeled data. In this paper, we explore the transfer

representation learning capability of a neural network fur-

ther by learning vulnerable patterns from two vulnerability-

relevant data sources. We hypothesize that the source for

learning latent vulnerable code patterns should not be lim-

ited to the historical vulnerability data source containing

real-world software projects. A vulnerability-related data

source (i.e., the SARD project) containing the artificial vul-

nerability samples should also be used as a vulnerability

knowledge base. By using two independent neural net-

works to learn the vulnerability-relevant knowledge from

two data sources, respectively, we can combine the learned

knowledge to complement the shortage of labeled data and

to enhance the vulnerability detection capability.

Firstly, we train two networks using the aforementioned

two vulnerability-relevant data sources. Then, both trained

networks are used as feature extractors. Given a project with

limited labeled data, we feed the data to each trained net-

work for deriving a subset of vulnerability knowledge rep-

resentations as features. Secondly, we combine the learned

representations from each network as features by concate-

nating the representations. Then, we train a random forest

classifier based on the combined representations. Lastly, the

trained classifier can be used for detecting vulnerabilities

(see Fig. 2). Even for a given project without any labeled

data, we can still use one of the trained networks as the

classifier for vulnerability detection (see Fig. 1). To ensure

the reproducibility, we have publicized our code and the

sorted data at Github

1

. In summary, the contributions of

this paper are three-fold:

• We propose a deep learning framework utilizing het-

erogeneous vulnerability-relevant data sources based

on two independent deep representation learning

networks capable of extracting the useful features for

software vulnerable code detection.

• We validate the design of our framework through

experiments and demonstrate that using neural net-

works as feature extractors and a separated classi-

fier to train on the extracted features improve the

vulnerability detection performance — a maximum

improvement of 60% in precision and 24% in recall

was observed.

• Our empirical studies found that the aggregated

representations learned by the two independent net-

works lead to optimal detection performance when

compared with the settings of using any single net-

work — a maximum improvement of 5% in precision

and 4% in recall was observed. The performance

of the proposed framework outperformed Flawfinder

[47] and our previous work [20]. It implies that the

proposed framework can be further extended to cater

to multiple data sources.

The rest of this paper is organized as follows: Section

2 provides an overview of the proposed approach and

how data sources are collected. Section 3 presents the im-

plementations of the learning of high-level representations

from the cross-domain data sources. Our experiments and

resulting evaluations are presented in Section 4, followed by

a discussion of the limitations of our approach in Section 5.

Section 6 lists some related studies, and Section 7 concludes

this paper.

2 APPROACH OVERVIEW AND DATA COLLECTION

This section provides an overview of our proposed frame-

work for software vulnerability detection by describing

a workflow of how the code feature representations are

learned for vulnerability detection. Following this, we intro-

duce the code data sources and the data collection process.

2.1 Problem Formulation

The proposed method takes a list of functions from a pro-

gram as input and outputs a function ranking list based

on the likelihood of the input functions being vulnerable.

Let F = {a

1

, a

2

, a

3

, ...a

n

} be all C source code functions

(both existing and to-be-developed) in the given software

project. We aim to find a function-level vulnerability de-

tector D : F 7→ [0, 1], where “1” stands for definitely

vulnerable, and “0” stands for definitely non-vulnerable,

such that D(a

i

) measures the probability of function a

i

containing vulnerable code. Often it suffices to treat D(a

i

)

as a vulnerability score so that we can investigate a small

number of functions of the top risk.

1. https://github.com/DanielLin1986/RepresentationsLearningFro

mMulti domain

2

1545-5971 (c) 2019 IEEE. Personal use is permitted, but republication/redistribution requires IEEE permission. See http://www.ieee.org/publications_standards/publications/rights/index.html for more information.

This article has been accepted for publication in a future issue of this journal, but has not been fully edited. Content may change prior to final publication. Citation information: DOI 10.1109/TDSC.2019.2954088, IEEE

Transactions on Dependable and Secure Computing

Results

Bi-LSTM

1

Train

A software project with

no labeled data

Trained Bi-LSTM

1

Test

Training

Test

Real-world

vulnerability

data source

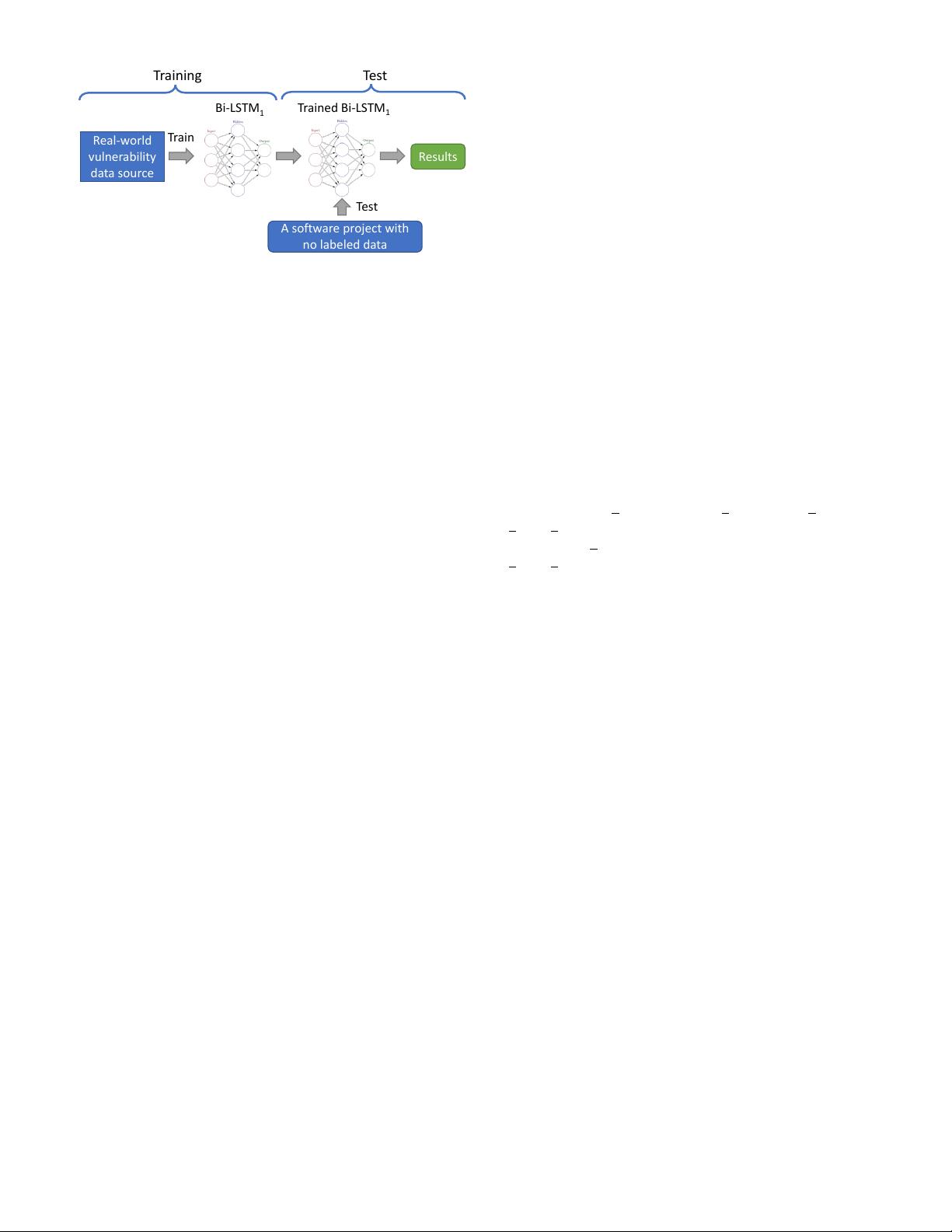

Fig. 1: Scenario 1: In this scenario, a target software project

has no labeled data. We train a Bi-LSTM network using the

real-world historical vulnerability data from other software

projects (the source projects) and feed the target project’s

code directly to the trained network for classification.

2.2 Workflow

The proposed framework handles different scenarios of the

vulnerability detection process. In the first scenario (Sce-

nario 1), we hypothesize that a target software project has

no labeled vulnerability data (see Fig. 1). By using trans-

fer learning, our network utilizes the relevant knowledge

learned from one task to be applied to a different but related

task. In this scenario, we use the historical vulnerability data

which are real-world vulnerabilities for training a neural

network. We hypothesize that the vulnerable functions of

the source projects contain the project-independent vulner-

able patterns shared among the vulnerabilities across differ-

ent software projects and these patterns are discoverable by

using the trained neural network for vulnerability detection

on a target project.

In the second scenario (Scenario 2), we hypothesize that

the target software project has some labeled data available,

but the amount of labeled data is insufficient to train a statis-

tically robust classifier. Hence, we exploit the representation

learning capability of deep learning algorithm to learn from

other vulnerability-relevant data sources, which remedies

the shortage of the labeled vulnerability data of the target

project.

We divide Scenario 2 into three stages, as depicted in

Fig. 2. In the first stage, we train two independent deep

learning networks, one for each data source. For the de-

tails of the data sources, please refer to Section 2.3. We

hypothesize that the trained networks with initialized pa-

rameters or weights can capture a broader vulnerability

“knowledge” from both vulnerability-relevant data sources.

That is, the trained deep networks have learned the hidden

patterns in the respective data sources, and the learned

patterns from these networks should contain the more

generic vulnerability-relevant information than the patterns

obtained from an isolated network trained with a single data

source. In addition, we found that an alternative solution

which uses one single network to learn from both the

vulnerability-relevant data sources resulted in suboptimal

performance.

The second stage of the scenario obtains the learned

patterns or representations from the trained networks. This

stage, namely the feature representation learning stage,

uses the available labeled data from the target software

project and feeds them to the two trained deep networks

to obtain two groups of representations, respectively. Then,

we combine the representations by concatenating them to

form an aggregated feature set. For example, we feed a

sample to a network trained by one of the vulnerability-

relevant data sources. The generated representation by the

network is a vector v

1

= [r

1

, r

2

, r

3

]. We then feed the

same sample to the other network trained by another

vulnerability-relevant data source and obtain the represen-

tation denoted as the vector v

2

= [r

4

, r

5

, r

6

]. The combined

feature vector is derived by concatenating v

1

and v

2

, that is,

v

concat

= [r

1

, r

2

, r

3

, r

4

, r

5

, r

6

].

In the final stage, we use the remaining data from the

target code project as the test set and feed them to each

trained network. The obtained representations of the labeled

data are used as inputs to train a random forest classifier,

and the representations of the test set are fed to the trained

classifier to obtain the performance results.

To address the data imbalance between vulnerable and

non-vulnerable code samples in the real-world projects,

we apply cost-sensitive learning by incorporating differ-

ent weights of vulnerable and non-vulnerable classes into

the objective functions (a.k.a. loss functions) of classi-

fiers used in our experiments. In this paper, we use

the equation: class weight = total samples/(n classes ∗

one class samples) to calculate the weights for each

class, where n classes is the number of classes, and

one class samples is the number of samples in one class.

This equation is based on a heuristic proposed by King and

Zeng [17]. That is, the vulnerable class will have a larger

weight to penalize the misclassification cost of the vulnera-

ble class more than that of the non-vulnerable class. This

setup enables classifiers to overcome the data imbalance

challenge during the training phase.

2.3 Data Collection

To overcome the shortage of labeled real-world vulnerability

data, we introduce the synthetic vulnerability samples from

the SARD project together with real-world vulnerability

data sets in our experiments.

2.3.1 The synthetic vulnerability sources from the SARD

project

The synthetic vulnerability samples collected from the

SARD project were mainly artificially constructed test cases

either to simulate known vulnerable source code settings

and/or to provide proof-of-concept code demonstrations.

In this paper, we only used the C/C++ test cases for our

experiments. We developed a crawler to download all of

the relevant files. Each downloaded sample is a source

code file containing at least one function. According to the

SARD naming convention for each test case, the vulnerable

functions are named with the phrases such as “bad” or

“badSink”, and the non-vulnerable ones are with the names

containing words like “good” or “goodSink”. Therefore, we

extracted the functions from the source code files and la-

beled them as either vulnerable or non-vulnerable according

to the SARD naming convention. We hypothesize that the

synthetic vulnerable samples contain the proof-of-concept

code describing the “basic vulnerability patterns”, and that

3

1545-5971 (c) 2019 IEEE. Personal use is permitted, but republication/redistribution requires IEEE permission. See http://www.ieee.org/publications_standards/publications/rights/index.html for more information.

This article has been accepted for publication in a future issue of this journal, but has not been fully edited. Content may change prior to final publication. Citation information: DOI 10.1109/TDSC.2019.2954088, IEEE

Transactions on Dependable and Secure Computing

Fig. 2: Scenario 2: In this scenario there are some labeled data available for the target software project. This scenario

consists of three stages: the first stage trains two deep learning networks using two data sources; in the second stage, we

feed each trained network with the labeled data to obtain two groups of feature representations, and then combine both

groups of feature representations to train a random forest classifier; In the third stage, we obtain feature representations by

feeding each trained network with the test set (i.e., the unlabeled data) from the target project, and finally use the resulting

representations as inputs to the trained random forest classifier to obtain the classification result.

these patterns are discoverable by deep learning algorithms,

specifically by the bidirectional LSTM.

2.3.2 The Real-world vulnerability data

We chose to use the real-world vulnerability data source

collected by Lin et al. [20] because the granularity of this

data source is set at the function level. In this paper, we

augmented the data source by adding the vulnerabilities

disclosed until April 1, 2018. The data source contains

the vulnerable and non-vulnerable functions from the six

open-source projects, including FFmpeg, LibTIFF, LibPNG,

Pidgin, VLC media player, and Asterisk. The vulnerabil-

ity labels were obtained from the National Vulnerability

Database (NVD) [29] and from the Common Vulnerability

and Exposures (CVE) [26] websites. These function-level

vulnerability data allows us to build classifiers for function-

level vulnerability detection, thus providing a more fine-

grained detection capability than that can be achieved at the

file- or component-level. Before matching the labels with the

source code, we downloaded the corresponding versions of

each project’s source code from GitHub. Subsequently, each

vulnerable function in the software project was manually

located and labeled according to the information provided

by NVD and CVE websites. Lin et al. [20] discarded the

vulnerabilities that spanned across multiple functions or

multiple files (e.g., inter-procedural vulnerabilities). Exclud-

ing the identified vulnerable functions and the discarded

vulnerabilities, they treated the remaining functions as the

non-vulnerable ones (see Table 1). By using the function

extraction tool, they were able to extract approximately 90%

of non-vulnerable functions. We hypothesize that the vul-

nerable functions in real-world software projects written in

the same programming language share generic patterns of

the vulnerabilities that are project-agnostic and discoverable

by a Bi-LSTM network.

3 UNIFIED REPRESENTATION LEARNING

Although both data sources contain source code functions,

the samples from the two sources vary in types and com-

plexity. This Section describes how deep learning networks

are applied to handle the data sources of different types

TABLE 1: The data sources used in the experiments.

Data source Dataset/collection

# of functions used/collected

Vulnerable Non-vulnerable

Synthetic

samples/Test

cases from the

SARD project

C source code

samples

83,710 52,290

Real-world

Open source

projects

FFmpeg

213 5,701

LibTIFF

96 731

LibPNG

43 577

Pidgin

29 8,050

VLC media

player

42 3,636

Asterisk

56 14,648

(e.g., synthetic and real-world vulnerability data), and dif-

ferent processing methods (e.g., ASTs and source code) for

learning unified high-level representations with respect to

the vulnerabilities of interest. To process the heterogeneous

data sources, we feed them to different networks and use

one of the hidden layers’ output as the learned high-level

representations.

3.1 Raw Representations

Before feeding the data sources to the respective networks,

the data need to be in a format compatible with deep

neural networks. At this stage, we name the data as “raw

representations”. The data samples from the SARD projects

and the real-world vulnerability data sets are source code

samples written in the C/C++ languages. Many previous

studies, such as [49] and [36], have applied text mining and

natural language processing (NLP) techniques for source

code-level defect and vulnerability detection. The under-

pinning assumption is that source code is logical, structural

and semantically meaningful. The code can be treated as

a “special” language understood by machines (compilers)

and communicated by developers, resembling a natural lan-

guage. This assumption has been formalized by Allamanis

et al. [2] as the “naturalness hypothesis”. In this paper, we

agree that there is a strong semblance between the source

code files/functions and “paragraph”/“sentences” found in

4

剩余16页未读,继续阅读

资源评论

pk_xz123456

- 粉丝: 2874

- 资源: 4045

上传资源 快速赚钱

我的内容管理

展开

我的内容管理

展开

我的资源

快来上传第一个资源

我的资源

快来上传第一个资源

我的收益 登录查看自己的收益

我的收益 登录查看自己的收益 我的积分

登录查看自己的积分

我的积分

登录查看自己的积分

我的C币

登录后查看C币余额

我的C币

登录后查看C币余额

我的收藏

我的收藏  我的下载

我的下载  下载帮助

下载帮助

前往需求广场,查看用户热搜

前往需求广场,查看用户热搜最新资源

- 小伊工具箱小程序源码/趣味工具微信小程序源码

- 网络安全领域中关于防范钓鱼邮件导致的病毒入侵与应对措施探讨

- Build a Large Language Model - 2025

- 郑州升达大学2024-2025第一学期计算机视觉课程期末试卷,

- ztsc_109339.apk

- boost电路电压闭环仿真 有pi控制和零极点补偿器两种 仿真误差0.00705,仿真波形如图二所示 所搭建的模型输入电压5V,输出电压24伏

- COMSOL模拟动水条件联系裂隙注浆扩散,考虑粘度时变

- 学生信息管理系统,该程序用于管理学生的基本信息,包括姓名、年龄、性别和成绩 用户可以添加、删除、修改和查询学生信息

- XC7V2000T+TMS320C6678设计文件,包含原理图,PCB等文件,已验证,可直接生产

- 简易图书管理系统,该程序用于管理图书的基本信息,包括书名、作者、出版年份和库存数量 用户可以添加、删除、修改和查询图书信息

- 简易日程提醒系统, 该程序用于管理用户的日程提醒,包括事件名称、日期、时间和描述 用户可以添加、删除、修改和查询日程提醒

- 无线充电仿真 simulink 磁耦合谐振 无线电能传输 MCR WPT lcc ss llc拓扑补偿 基于matlab 一共四套模型: 1.llc谐振器实现12 24V恒压输出 带调频闭环控制 附

- 直流无刷电机,直径38mm,径向长23.8mm,转速25000rpm,功率200W,可用于磨头加工

- 47191 Python语言程序设计(第2版)(含视频教学)-课后习题答案.zip

- 信息系统管理师试题分享

- FreeRTOS学习之系统移植

资源上传下载、课程学习等过程中有任何疑问或建议,欢迎提出宝贵意见哦~我们会及时处理!

点击此处反馈

安全验证

文档复制为VIP权益,开通VIP直接复制

信息提交成功

信息提交成功