没有合适的资源?快使用搜索试试~ 我知道了~

温馨提示

内容概要:本文介绍了一种创新性的基于语义文本引导的图像融合方法Text-IF,该方法能够解决低质量源图像中存在的退化问题,并支持交互式的图像融合任务。文中提出了一个集成图像融合管道和文本互作用指导架构的框架,通过跨模态特征融合和语义指导模块,有效地实现了高精度、自定义化的图像融合效果,显著提升了现有图像融合技术和修复技术的性能和灵活性。同时,实验结果表明Text-IF在处理各种类型的图像退化(如光照不足、噪声干扰)时具有明显的优势,能够产生高质量、符合用户需求的融合图像。 适合人群:对数字图像处理及深度学习有基本认识的研发人员、图像处理领域的专业人员和学者。 使用场景及目标:用于解决红外和可见光图像融合过程中存在的质量问题,特别是在面对低质量源图像时,能够提供一种更加灵活、高适应性和用户友好的解决方案。适用于安全监控、医学成像等多个实际应用场景,满足不同用户对于特定融合结果的需求。 其他说明:作者还展示了该方法在高级视觉任务(如目标检测)中的潜在价值。

资源推荐

资源详情

资源评论

Text-IF: Leveraging Semantic Text Guidance for Degradation-Aware and

Interactive Image Fusion

Xunpeng Yi, Han Xu, Hao Zhang, Linfeng Tang, Jiayi Ma

*

Electronic Information School, Wuhan University, Wuhan 430072, China

{yixunpeng, xu han}@whu.edu.cn, {zhpersonalbox, linfeng0419, jyma2010}@gmail.com

Abstract

Image fusion aims to combine information from differ-

ent source images to create a comprehensively representa-

tive image. Existing fusion methods are typically helpless in

dealing with degradations in low-quality source images and

non-interactive to multiple subjective and objective needs.

To solve them, we introduce a novel approach that leverages

semantic text guidance image fusion model for degradation-

aware and interactive image fusion task, termed as Text-

IF. It innovatively extends the classical image fusion to the

text guided image fusion along with the ability to harmo-

niously address the degradation and interaction issues dur-

ing fusion. Through the text semantic encoder and semantic

interaction fusion decoder, Text-IF is accessible to the all-

in-one infrared and visible image degradation-aware pro-

cessing and the interactive flexible fusion outcomes. In this

way, Text-IF achieves not only multi-modal image fusion,

but also multi-modal information fusion. Extensive exper-

iments prove that our proposed text guided image fusion

strategy has obvious advantages over SOTA methods in the

image fusion performance and degradation treatment. The

code is available at https://github.com/XunpengYi/Text-IF.

1. Introduction

Image fusion is a prominent field within the domain of dig-

ital image processing [15, 27, 35]. Single-modal images

can only capture partial representation of the scene. Multi-

modal images allow for the effective acquisition of more

comprehensive representation. As an important represen-

tative, visible images provide the reflectance-based visual

information, akin to human vision. Infrared images provide

thermal radiation-based information, more valuable for de-

tecting thermal targets and observing nighttime activities.

The infrared and visible image fusion focuses on fusing the

complementary information of infrared and visible images,

yielding high-quality fusion images [18–20, 28, 38, 39, 43].

*

Corresponding author

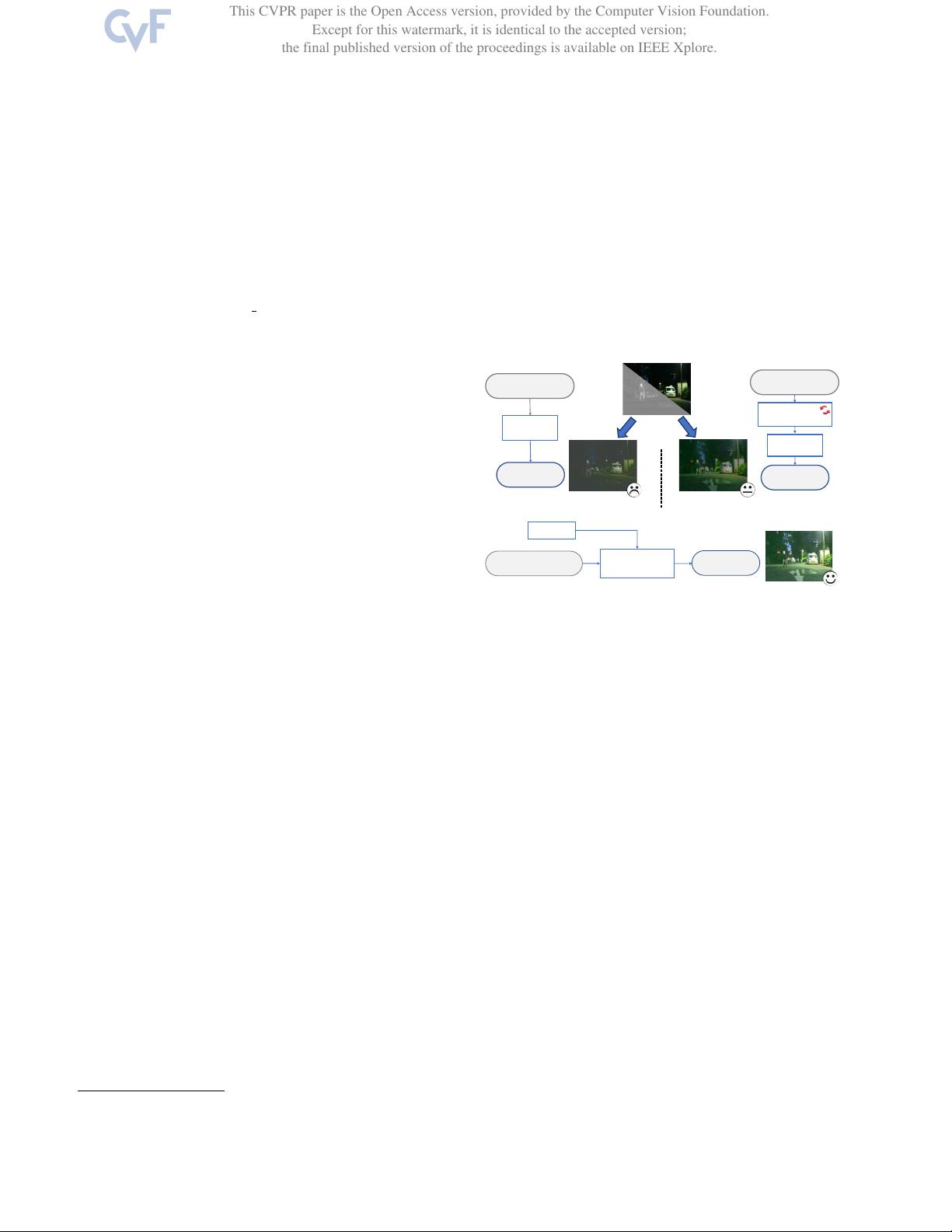

low-quality

fusion image

low-quality infrared

and visible images

Fusion

Network

predefined

fusion loss

(a) Simple fusion approach

(b) Separated approach

fusion image

low-quality infrared

and visible images

SOTA Image

Restoration Model

user-required

fusion image

Text-Image Fusion

Network (all in one)

low-quality infrared

and visible images

(c) Proposed text guided image fusion approach

Text

semantic guidance

Fusion

Network

not well-done,

tedious and

non-interactive

helpless

and

non-interactive

great and interactive!

complex scenes,

degradation

Figure 1. Fusion approaches for complex scenes with degrada-

tions. (a) simple fusion approach: treating image fusion with

predefined fusion loss and not applicable to complex scenes with

degradations. (b) separated approach: requiring frequent restora-

tion methods switching according to the type of degradations,

which is troublesome and not well-done. (c) proposed text guided

image fusion approach: achieving interactive and high-quality fu-

sion image without tedious replacement of models.

Limited by the conditions of environments, the origi-

nally acquired infrared and visible images may suffer from

degradations and show low fusion image quality. The vis-

ible images are susceptible to degradation issues, e.g., low

light, over exposure, etc. The infrared images are inevitably

affected by noise (including thermal, electronic, and envi-

ronmental noise), diminished contrast, and other associated

effects. Current fusion methods lack the capability to adap-

tively solve the degradations, leading to the low-quality fu-

sion image. Furthermore, relying on manual pre-processing

to enhance the image has the problems of flexibility and ef-

ficiency [29]. Therefore, it is of practical interest to study

a model that harmonises degradation-aware processing and

interactive fusion.

Designing a model for individualized degradation to

achieve image enhancement and fusion is feasible. How-

ever, most of image fusion tasks need to be carried out in

various complex conditions around the clock. As shown in

This CVPR paper is the Open Access version, provided by the Computer Vision Foundation.

Except for this watermark, it is identical to the accepted version;

the final published version of the proceedings is available on IEEE Xplore.

27026

资源评论

pk_xz123456

- 粉丝: 2127

- 资源: 1561

上传资源 快速赚钱

我的内容管理

展开

我的内容管理

展开

我的资源

快来上传第一个资源

我的资源

快来上传第一个资源

我的收益 登录查看自己的收益

我的收益 登录查看自己的收益 我的积分

登录查看自己的积分

我的积分

登录查看自己的积分

我的C币

登录后查看C币余额

我的C币

登录后查看C币余额

我的收藏

我的收藏  我的下载

我的下载  下载帮助

下载帮助

前往需求广场,查看用户热搜

前往需求广场,查看用户热搜最新资源

资源上传下载、课程学习等过程中有任何疑问或建议,欢迎提出宝贵意见哦~我们会及时处理!

点击此处反馈

安全验证

文档复制为VIP权益,开通VIP直接复制

信息提交成功

信息提交成功