1

Fundamentals of Computer Design

1

And now for something completely different.

Monty Python’s Flying Circus

1.1 Introduction 1

1.2 The Task of a Computer Designer 8

1.3 Technology Trends 11

1.4 Cost, Price and their Trends 14

1.5 Measuring and Reporting Performance 25

1.6 Quantitative Principles of Computer Design 40

1.7 Putting It All Together: Performance and Price-Performance 49

1.8 Another View: Power Consumption and Efficiency as the Metric 58

1.9 Fallacies and Pitfalls 59

1.10 Concluding Remarks 69

1.11 Historical Perspective and References 70

Exercises 77

Computer technology has made incredible progress in the roughly 55 years since

the first general-purpose electronic computer was created. Today, less than a

thousand dollars will purchase a personal computer that has more performance,

more main memory, and more disk storage than a computer bought in 1980 for

$1 million. This rapid rate of improvement has come both from advances in the

technology used to build computers and from innovation in computer design.

Although technological improvements have been fairly steady, progress aris-

ing from better computer architectures has been much less consistent. During the

first 25 years of electronic computers, both forces made a major contribution; but

beginning in about 1970, computer designers became largely dependent upon in-

tegrated circuit technology. During the 1970s, performance continued to improve

at about 25% to 30% per year for the mainframes and minicomputers that domi-

nated the industry.

The late 1970s saw the emergence of the microprocessor. The ability of the

microprocessor to ride the improvements in integrated circuit technology more

closely than the less integrated mainframes and minicomputers led to a higher

rate of improvement—roughly 35% growth per year in performance.

1.1

Introduction

2 Chapter 1 Fundamentals of Computer Design

This growth rate, combined with the cost advantages of a mass-produced

microprocessor, led to an increasing fraction of the computer business being

based on microprocessors. In addition, two significant changes in the computer

marketplace made it easier than ever before to be commercially successful with a

new architecture. First, the virtual elimination of assembly language program-

ming reduced the need for object-code compatibility. Second, the creation of

standardized, vendor-independent operating systems, such as UNIX and its

clone, Linux, lowered the cost and risk of bringing out a new architecture.

These changes made it possible to successfully develop a new set of architec-

tures, called RISC (Reduced Instruction Set Computer) architectures, in the early

1980s. The RISC-based machines focused the attention of designers on two criti-

cal performance techniques, the exploitation of instruction-level parallelism (ini-

tially through pipelining and later through multiple instruction issue) and the use

of caches (initially in simple forms and later using more sophisticated organiza-

tions and optimizations). The combination of architectural and organizational en-

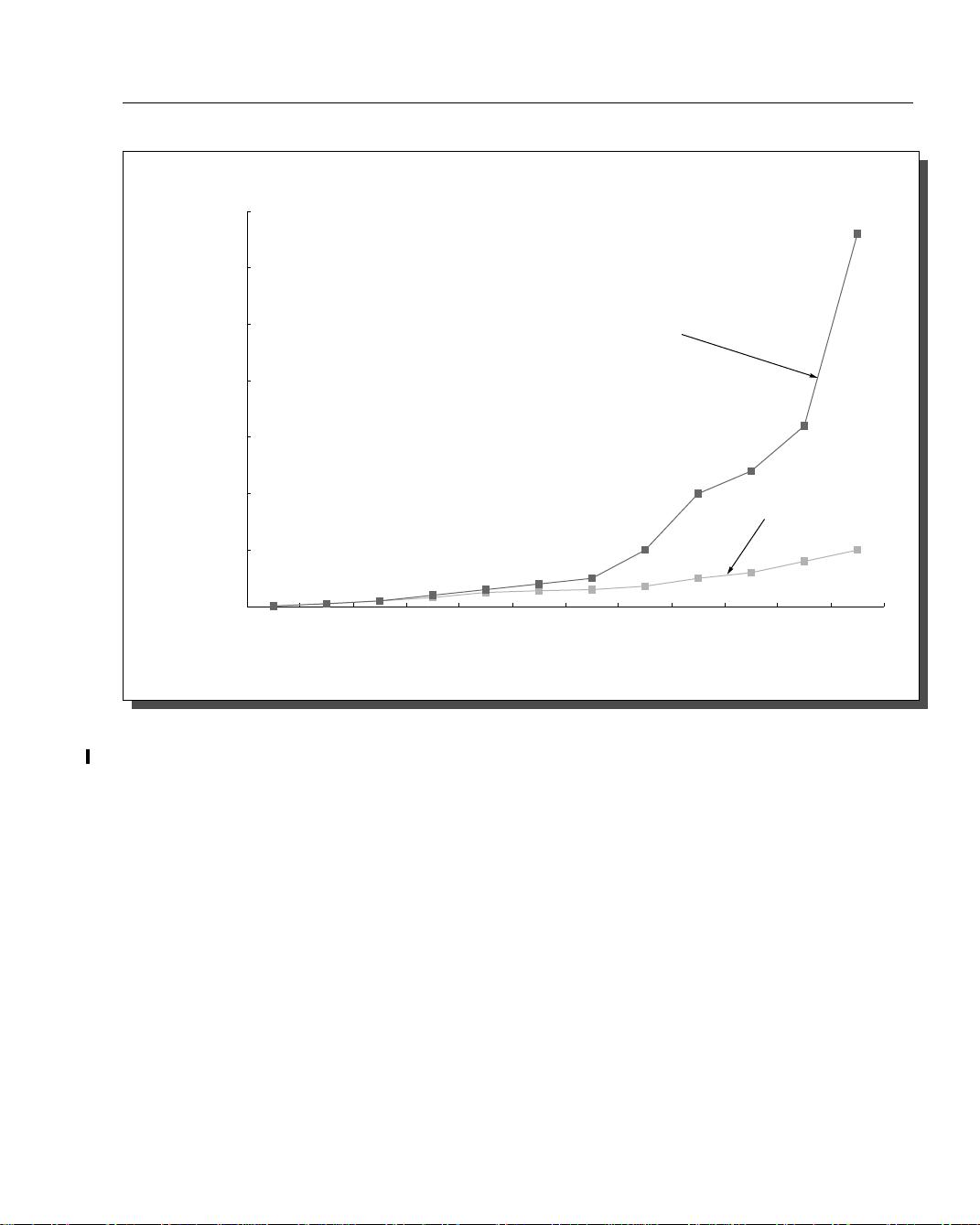

hancements has led to 20 years of sustained growth in performance at an annual

rate of over 50%. Figure 1.1 shows the effect of this difference in performance

growth rates.

The effect of this dramatic growth rate has been twofold. First, it has signifi-

cantly enhanced the capability available to computer users. For many applica-

tions, the highest performance microprocessors of today outperform the

supercomputer of less than 10 years ago.

Second, this dramatic rate of improvement has led to the dominance of micro-

processor-based computers across the entire range of the computer design. Work-

stations and PCs have emerged as major products in the computer industry.

Minicomputers, which were traditionally made from off-the-shelf logic or from

gate arrays, have been replaced by servers made using microprocessors. Main-

frames have been almost completely replaced with multiprocessors consisting of

small numbers of off-the-shelf microprocessors. Even high-end supercomputers

are being built with collections of microprocessors.

Freedom from compatibility with old designs and the use of microprocessor

technology led to a renaissance in computer design, which emphasized both ar-

chitectural innovation and efficient use of technology improvements. This renais-

sance is responsible for the higher performance growth shown in Figure 1.1—a

rate that is unprecedented in the computer industry. This rate of growth has com-

pounded so that by 2001, the difference between the highest-performance micro-

processors and what would have been obtained by relying solely on technology,

including improved circuit design, is about a factor of fifteen.

In the last few years, the tremendous imporvement in integrated circuit capa-

bility has allowed older less-streamlined architectures, such as the x86 (or IA-32)

architecture, to adopt many of the innovations first pioneered in the RISC de-

signs. As we will see, modern x86 processors basically consist of a front-end that

fetches and decodes x86 instructions and maps them into simple ALU, memory

access, or branch operations that can be executed on a RISC-style pipelined pro-

1.1 Introduction 3

FIGURE 1.1 Growth in microprocessor performance since the mid 1980s has been substantially higher than in ear-

lier years as shown by plotting SPECint performance.

This chart plots relative performance as measured by the SPECint

benchmarks with base of one being a VAX 11/780. (Since SPEC has changed over the years, performance of newer ma-

chines is estimated by a scaling factor that relates the performance for two different versions of SPEC (e.g. SPEC92 and

SPEC95.) Prior to the mid 1980s, microprocessor performance growth was largely technology driven and averaged about

35% per year. The increase in growth since then is attributable to more advanced architectural and organizational ideas. By

2001 this growth leads to about a factor of 15 difference in performance. Performance for floating-point-oriented calculations

has increased even faster.

Change this figure as follows:

!1. the y-axis should be labeled “Relative Performance.”

2. Plot only even years

3. The following data points should changed/added:

a. 1992 136 HP 9000; 1994 145 DEC Alpha; 1996 507 DEC Alpha; 1998 879 HP 9000; 2000 1582 Intel

Pentium III

4. Extend the lower line by increasing by 1.35x each year

0

50

100

150

200

250

300

350

1984

1985

1986

1987

1988

1989

1990

1991

1992

1993

1994

1995

Year

1.58x per year

1.35x per year

SUN4

MIPS

R2000

MIPS

R3000

IBM

Power1

HP

9000

IBM Power2

DEC Alpha

DEC Alpha

DEC Alpha

SPECint rating

4 Chapter 1 Fundamentals of Computer Design

cessor. Beginning in the end of the 1990s, as transistor counts soared, the over-

head in transistors of interpreting the more complex x86 architecture became

neglegible as a percentage of the total transistor count of a modern microproces-

sor.

This text is about the architectural ideas and accompanying compiler improve-

ments that have made this incredible growth rate possible. At the center of this

dramatic revolution has been the development of a quantitative approach to com-

puter design and analysis that uses empirical observations of programs, experi-

mentation, and simulation as its tools. It is this style and approach to computer

design that is reflected in this text.

Sustaining the recent improvements in cost and performance will require con-

tinuing innovations in computer design, and the authors believe such innovations

will be founded on this quantitative approach to computer design. Hence, this

book has been written not only to document this design style, but also to stimu-

late you to contribute to this progress.

In the 1960s, the dominant form of computing was on large mainframes, ma-

chines costing millions of dollars and stored in computer rooms with multiple op-

erators overseeing their support. Typical applications included business data

processing and large-scale scientific computing. The 1970s saw the birth of the

minicomputer, a smaller sized machine initially focused on applications in scien-

tific laboratories, but rapidly branching out as the technology of timesharing,

multiple users sharing a computer interactively through independent terminals,

became widespread. The 1980s saw the rise of the desktop computer based on

microprocessors, in the form of both personal computers and workstations. The

individually owned desktop computer replaced timesharing and led to the rise of

servers, computers that provided larger-scale services such as: reliable, long-term

file storage and access, larger memory, and more computing power. The 1990s

saw the emergence of the Internet and the world-wide web, the first successful

handheld computing devices (personal digital assistants or PDAs), and the emer-

gence of high-performance digital consumer electronics, varying from video

games to set-top boxes.

These changes have set the stage for a dramatic change in how we view com-

puting, computing applications, and the computer markets at the beginning of the

millennium. Not since the creation of the personal computer more than twenty

years ago have we seen such dramatic changes in the way computers appear and

in how they are used. These changes in computer use have led to three different

computing markets each characterized by different applications, requirements,

and computing technologies.

1.2

The Changing Face of Computing and the

Task of the Computer Designer