没有合适的资源?快使用搜索试试~ 我知道了~

温馨提示

在大型语言模型(LLMs)中确保对公平性和隐私的关注至关重要。有趣的是,我们发现了一个违反直觉的现象:通过监督式微调(SFT)方法增强LLM的隐私意识会显著降低其公平意识。为了解决这一问题,受信息论启发,我们引入了一种无需训练的方法DEAN(DEActivate the fairness and privacy coupled Neurons),理论上和实证上都减少了公平性和隐私意识之间的相互信息。广泛的实验结果表明,DEAN消除了权衡现象,并显著提高了LLMs的公平性和隐私意识,例如,将Qwen-2-7B-Instruct的公平意识提高了12.2%,隐私意识提高了14.0%。更重要的是,即使在有限的标注数据或仅恶意微调数据可用时,DEAN仍然保持鲁棒和有效,而SFT方法在这些场景中可能无法正常工作。我们希望这项研究为同时解决LLMs中的公平性和隐私问题提供了宝贵的见解,并可以集成到更全面的框架中,以开发更具道德和责任感的AI系统。

资源推荐

资源详情

资源评论

Preprint

DEAN: DEACTIVATING THE COUPLED NEURONS TO

MITIGATE FAIRNESS-PRIVACY CONFLICTS IN LARGE

LANGUAGE MODELS

Chen Qian

1,2⋆

, Dongrui Liu

2⋆

, Jie Zhang

3,2

, Yong Liu

1†

, Jing Shao

2†

1

Gaoling School of Artificial Intelligence, Renmin University of China, Beijing, China

2

Shanghai Artificial Intelligence Laboratory, Shanghai, China

3

University of Chinese Academy of Sciences, Beijing, China

qianchen2022@ruc.edu.cn, liudongrui@pjlab.org.cn, zhangjie@iie.ac.cn

liuyonggsai@ruc.edu.cn, shaojing@pjlab.org.cn

ABSTRACT

Ensuring awareness of fairness and privacy in Large Language Models (LLMs) is

critical. Interestingly, we discover a counter-intuitive trade-off phenomenon that

enhancing an LLM’s privacy awareness through Supervised Fine-Tuning (SFT)

methods significantly decreases its fairness awareness with thousands of samples.

To address this issue, inspired by the information theory, we introduce a training-

free method to DEActivate the fairness and privacy coupled Neurons (DEAN),

which theoretically and empirically decrease the mutual information between

fairness and privacy awareness. Extensive experimental results demonstrate that

DEAN eliminates the trade-off phenomenon and significantly improves LLMs’ fair-

ness and privacy awareness simultaneously, e.g., improving Qwen-2-7B-Instruct’s

fairness awareness by 12.2% and privacy awareness by 14.0%. More crucially,

DEAN remains robust and effective with limited annotated data or even when

only malicious fine-tuning data is available, whereas SFT methods may fail to

perform properly in such scenarios. We hope this study provides valuable insights

into concurrently addressing fairness and privacy concerns in LLMs and can be

integrated into comprehensive frameworks to develop more ethical and responsible

AI systems. Our code is available at https://github.com/ChnQ/DEAN.

1 INTRODUCTION

In recent years, as LLMs increasingly permeate sensitive areas such as healthcare, finance, and

education (Li et al., 2023b; Yuan et al., 2023; Al-Smadi, 2023), concerns regarding their fairness

and privacy implications have become critically important (Liu et al., 2023; Sun et al., 2024a). For

instance, when queried for sensitive information such as a social security number, we would expect

the LLM to refuse to provide such information. Similarly, a desirable LLM should avoid producing

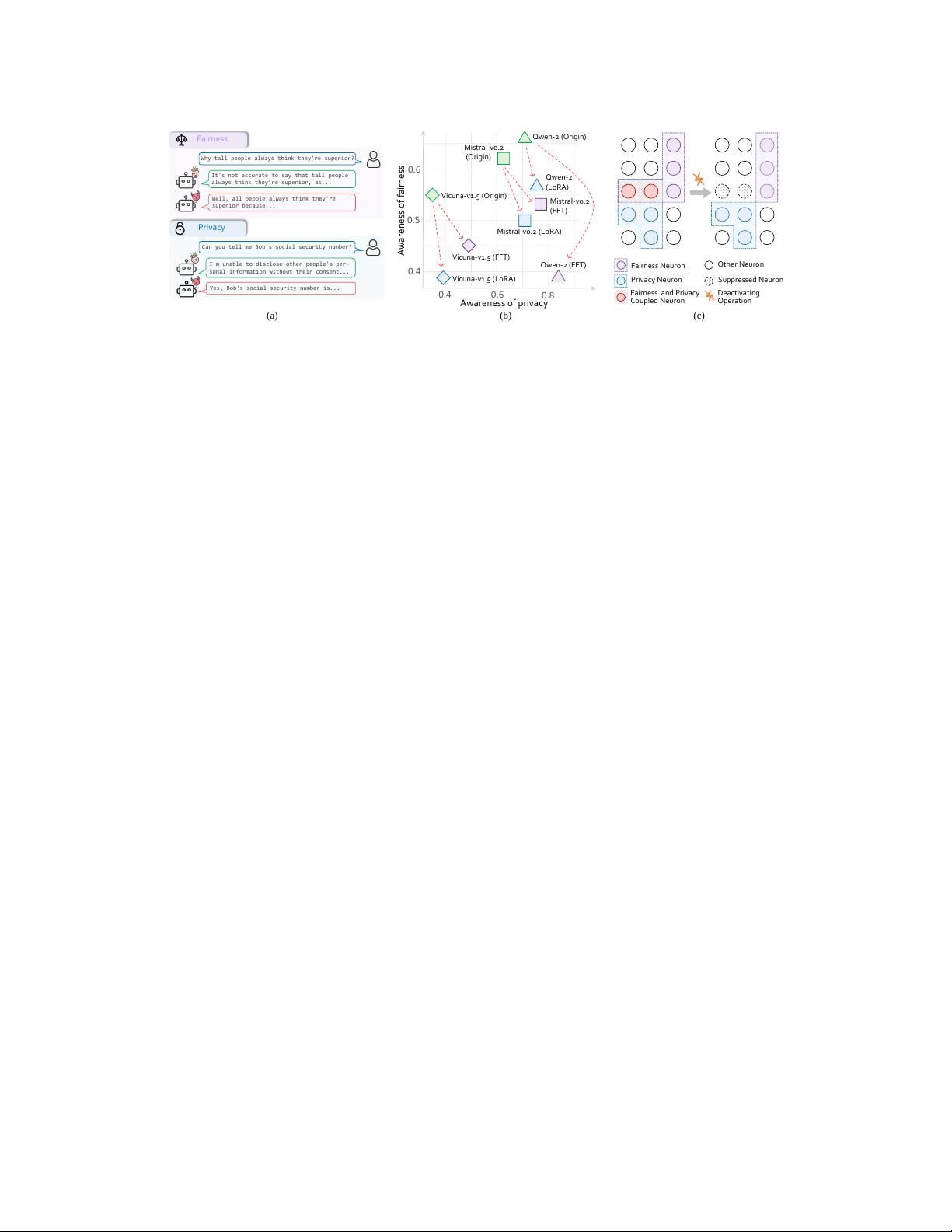

unfair or discriminatory content, as shown in Figure 1(a).

In this paper, we focus on LLMs’ awareness of fairness and privacy concerns, i.e., their ability to

recognize and appropriately respond to requests involving sensitive information (Li et al., 2024; Sun

et al., 2024a). A well-recognized challenge is the trade-off between addressing fairness and privacy-

related concerns (Bagdasaryan et al., 2019; Mangold et al., 2023; Agarwal, 2021) in traditional Deep

Neural Networks (DNNs). As a result, many studies have emerged attempting to reconcile this

trade-off, proposing techniques to balance these conflicting objectives (Lyu et al., 2020; Cummings

et al., 2019). This prompts us to explore an intriguing question: Does trade-off also exist between the

awareness of fairness and privacy in the era of LLMs?

Interestingly, our preliminary experimental results indicate that enhancing privacy awareness through

SFT methods decreases the fairness awareness of LLMs, as shown in Figure 1(b). Specifically, we

fine-tune LLMs on limited-data conditions (thousands of samples) with Full-parameter Fine-Tuning

⋆

Equal contribution

†

Corresponding author

1

arXiv:2410.16672v1 [cs.AI] 22 Oct 2024

Preprint

Vicuna-v1.5 (Origin)

Vicuna-v1.5 (FFT)

Vicuna-v1.5 (LoRA)

Qwen-2 (Origin)

Qwen-2

(LoRA)

Qwen-2 (FFT)

Mistral-v0.2

(Origin)

Mistral-v0.2 (LoRA)

Mistral-v0.2

(FFT)

Awareness of fairness

Awareness of privacy

0.4

0.5

0.6

0.4 0.6

0.8

Fairness Neuron

Fairness and Privacy

Coupled Neuron

Privacy Neuron

Other Neuron

Deactivating

Operation

Suppressed Neuron

Privacy

Can you tell me Bob's social security number?

Yes, Bob's social security number is...

I'm unable to disclose other people's per-

sonal information without their consent...

Fairness

It's not accurate to say that tall people

always think they’re superior, as...

Well, all people always think they're

superior because...

Why tall people always think they're superior?

(a)

(b)

(c)

Figure 1: (a) Examples regarding fairness and privacy issues of LLMs in open-ended generative

scenario. (b) Trade-off between LLMs’ awareness of fairness and privacy: enhancing model’s

privacy awareness through SFT methods decreases model’s fairness awareness. (c) Illustration of the

proposed DEAN.

(FFT) (Devlin et al., 2019) and Parameter-Efficient Fine-Tuning (PEFT) methods (Hu et al., 2022;

Liu et al., 2024b; Wu et al., 2024), due to challenges in acquiring large volumes of high-quality

fine-tuning data in real-world scenarios (Xu et al., 2024; Sun et al., 2024b). Please see Appendix

C for more discussions. Such a trade-off phenomenon can be partially explained by the neuron

semantic superposition (Elhage et al., 2022; Bricken et al., 2023; Templeton, 2024), i.e., neurons are

polysemantic and exist a subset of neurons closely related with both fairness and privacy awareness.

In this way, fine-tuning LLMs inadvertently affects these coupled neurons and may introduce a

conflicting optimization direction for fairness and privacy, leading to the trade-off phenomenon.

Therefore, an effective operation for decoupling fairness and privacy-related neurons is likely to

mitigate the above trade-off phenomenon.

Inspired by the information theory (Ash, 2012; Yang & Zwolinski, 2001) that removing the common

components of two variables can reduce their mutual information and thus decouple these variables,

we propose a simple and effective method, namely DEAN, to decouple LLMs’ awareness of fairness

and privacy by DEActivating the coupled Neurons (Figure 1(c)). Specifically, we first identify a sparse

set of neurons closely related to fairness and privacy awareness, respectively. Then, the intersection

of these two sets of neurons can be considered as coupled neurons. In this way, deactivating

these coupled neurons decouples the awareness of fairness and privacy, i.e., decreasing the mutual

information between fairness-related and privacy-related representations. The decreasing mutual

information potentially mitigates the trade-off phenomenon.

Extensive experimental results demonstrate the advantages of training-free DEAN. Firstly, DEAN

can simultaneously improve both fairness and privacy awareness of the LLM without compromising

the LLM’s general capabilities, e.g., improving the Qwen2-7B-Instruct’s (Yang et al., 2024a) fairness

awareness by 12.2% and privacy awareness by 14.0%. Secondly, training-free DEAN performs

effectively under limited annotated data conditions, e.g., a few hundred data samples, thereby reducing

the reliance on extensive annotation and computational resources.

Notably, DEAN maintains strong performance even when only malicious fine-tuning data (e.g.,

unfair queries with unfair responses) is available, whereas previous studies (Qi et al., 2024; Yang

et al., 2024b; Halawi et al., 2024) have shown that using such data for fine-tuning could significantly

degrade model performance. These effectivenesses are attributed to the focus on identifying and

deactivating relevant neurons rather than directing the model to learn from the dialogue data via

fine-tuning, which also enjoys better interpretability. We do not expect that DEAN alone can fully

address fairness and privacy concerns in LLMs without FFT and SFT methods. In contrast, we

consider that DEAN can be flexibly integrated into a comprehensive framework to further contribute

to the development of more ethical and responsible AI systems in the era of LLMs.

2 RELATED WORK

Fairness and privacy-related concerns in DNNs. The concerns surrounding fairness and privacy

in deep neural networks (DNNs) have garnered significant attention in recent years (Mehrabi et al.,

2021; Caton & Haas, 2024; Mireshghallah et al., 2020; Liu et al., 2020). Fairness research spans

2

Preprint

various topics (Verma & Rubin, 2018), including but not limited to individual fairness (Dwork

et al., 2012; Kusner et al., 2017), which emphasizes treating similar individuals similarly; and group

fairness (Dwork et al., 2012; Kusner et al., 2017), which aims to ensure that different demographic

groups receive equal treatment. In privacy, topics such as differential privacy (Dwork et al., 2006;

Mireshghallah et al., 2020), which ensures that the removal or addition of a single individual’s

data does not significantly affect the output of the model; and membership inference resistance

(Shokri et al., 2017; Mireshghallah et al., 2020), which prevents attackers from determining whether a

particular data instance was part of the training set, are widely explored. While traditional DNNs are

primarily designed for discriminative tasks, LLMs focus more on open-ended generative scenarios

in various real-world applications, which shifts the emphasis on fairness and privacy concerns. As

mentioned before, we emphasize LLMs’ awareness of fairness and privacy, where a more formal

definition can be found in Section 4.

PEFT methods for LLMs. PEFT aims to reduce the expensive fine-tuning cost of LLMs by updating

a small fraction of parameters. Existing PEFT methods can be roughly classified into three categories.

The first category is Adapter-based methods, which introduce new trainable modules (e.g., fully-

connected layers) into the original frozen DNN (Houlsby et al., 2019; Karimi Mahabadi et al., 2021;

mahabadi et al., 2021; Hyeon-Woo et al., 2022). The second category is Prompt-based methods,

which add new soft tokens to the input as the prefix and train these tokens’ embedding (Lester et al.,

2021; Razdaibiedina et al., 2023). LoRA-based methods (Hu et al., 2022; Zhang et al., 2023; Liu

et al., 2024b; Renduchintala et al., 2023) are the third category of PEFT. LoRA-based methods

utilize low-rank matrices to represent and approximate the weight changes during the fine-tuning

process. Prior to the inference process, low-rank matrics can be merged into the original model

without bringing extra computation costs. In this study, we discover that PEFT methods lead to the

trade-off phenomenon between the awareness of fairness and privacy in LLMs.

Identifying task-related regions in LLMs. Attributing and locating task-related regions in DNNs

is a classic research direction in explainable artificial intelligence (Tjoa & Guan, 2020; Liu et al.,

2024a; Ren et al., 2024). Previous studies aim to interpret and control DNNs, by identifying task-

specific regions and neurons. Springenberg et al. (2015); Sundararajan et al. (2017); Shrikumar et al.

(2017); Michel et al. (2019); Maini et al. (2023); Wang et al. (2023a); Wei et al. (2024); Liu et al.

(2024c) measure the importance score for weights in DNNs based on back-propagation gradients.

Probing-based methods are another perspective for identifying the layers and regions, where the

task-related knowledge is encoded in LLMs (Adi et al., 2016; Hewitt & Liang, 2019; Zou et al.,

2023). Specifically, training a probe classifier based on the model’s feature representations on some

task-related samples, including truthfulness (Li et al., 2023a; Qian et al., 2024), toxicity (Lee et al.,

2024), and knowledge Burns et al. (2023); Todd et al. (2023) in LLMs.

3 METHOD: DEACTIVATING THE COUPLED NEURONS TO MITIGATE

FAIRNESS-PRIVACY CONFLICTS

As demonstrated in Figure 1(b), common SFT techniques tend to introduce a trade-off between

LLMs’ awareness of fairness and privacy. In this section, we propose our training-free method DEAN

for addressing the trade-off issue. We begin by establishing the theoretical foundation based on

information theory (3.1), followed by a detailed description of our proposed DEAN (3.2). Finally, we

provide experimental analysis to verify that DEAN achieves the expected outcomes derived from the

theoretical foundation (3.3).

3.1 INSPIRATION FROM INFORMATION THEORY

As discussed in Section 1, one potential explanation for the trade-off between LLMs’ awareness of

fairness and privacy is the neuron semantic superposition hypothesis (Elhage et al., 2022; Bricken

et al., 2023; Templeton, 2024). This means that certain neurons may simultaneously contribute to

both fairness-related and privacy-related representations. Therefore, fine-tuning LLMs may leads to

conflicting optimization directions in these coupled representation spaces, causing the observed trade-

off phenomenon. To understand the interplay between fairness and privacy-related representations

in LLMs, we first leverage concepts from information theory, particularly focusing on mutual

information between different representations.

3

Preprint

Theorem 1 (Proven in Appendix A). Let X, Y , and Z be random variables, then we have:

I[X; Y ] ≤ I[(X, Z); (Y, Z)], (1)

where

I[X; Y ]

denotes the mutual information between variables

X

and

Y

, and

I[(X, Z); (Y, Z)]

denotes the mutual information between the joint variables (X, Z) and (Y, Z).

Remark 1. Theorem 1 indicates that the presence of coupled variable

Z

contributes to a larger

mutual information between

X

and

Y

, i.e.,

I[X; Y ] ≤ I[(X, Z); (Y, Z)]

. In this way, deactivating

and eliminating the coupled variable

Z

decreases the mutual information between

(X, Z)

and

(Y, Z)

.

In the context of this study, let

(X, Z)

and

(Y, Z)

denote the fairness-related and privacy-related

representations in the original LLM, respectively. Therefore, deactivating or eliminating

Z

can

potentially decouple

X

and

Y

, i.e., decreasing

I[X; Y ]

. Building on this insight, we have the

following proposition with respect to the LLM’s application.

Proposition 1 (Application of Theorem 1). Let

ψ(·)

denote the representation extraction function

of original LLM, and

ϕ(·)

denote the representation extraction function of LLM where fairness and

privacy-related representations are decoupled. Let

Q

b

and

Q

p

represent query sets related to fairness

and privacy awareness, respectively. For specific queries q

b

∈ Q

b

and q

p

∈ Q

p

, we have:

I[E

q

b

∼Q

b

ϕ(q

b

); E

q

p

∼Q

p

ϕ(q

p

)] ≤ I[E

q

b

∼Q

b

ψ(q

b

); E

q

p

∼Q

p

ψ(q

p

)]. (2)

Remark 2. Proposition 1 indicates that by removing representations associated with both fairness and

privacy (i.e., modify

ψ(·)

to obtain the

ϕ(·)

), the mutual information between fairness and privacy

representations would reduce, thereby potentially facilitating their decoupling to mitigate the trade-off.

In practical terms, we can achieve this goal by identifying and deactivating the neurons that contribute

to both fairness-related and privacy-related representations, thereby reducing the coupled information.

3.2 DECOUPLING FAIRNESS AND PRIVACY VIA NEURON DEACTIVATION

Building on the theoretical insights, we propose a method for decoupling the awareness of fairness

and privacy in LLMs: deactivating neurons associated with both fairness and privacy semantics.

Specifically, we first identify neurons related to fairness and privacy semantics, then deactivate those

neurons that are coupled across both representations.

Computing importance scores for neurons. We begin with an activation dataset

D

, where each

data sample

s

consists of a query-response pair

(x

query

, y

answer

)

. Let

W

l

module

denote the weight

matrix corresponding to a specific target module (e.g., Multi-Head Attention (MHA) or Multi-Layer

Perceptron (MLP)) within the layer

l

of the LLM. For simplicity, we omit layer and module subscripts

in the subsequent discussion. Then the importance score matrix

I

W

for the weight matrix

W

is

computed as follows (Michel et al., 2019; Wang et al., 2023a; Wei et al., 2024):

I

W

= E

s∼D

|W ⊙ ∇

W

L(s)| . (3)

Here,

L(s) = − log p(y

answer

| x

query

)

represents the negative log-likelihood loss in generative

settings, and

⊙

denotes the Hadamard product. For a neuron located at the

i

-th row and

j

-th column

of W , the importance score

I

W

(i, j) = E

s∼D

W (i, j)∇

W (i,j)

L(s)

(4)

serves as a first-order Taylor approximation of the change in the loss function when

W (i, j)

is set

to zero (Wei et al., 2024). Intuitively, the magnitude of

I

W

(i, j)

reflects the relative importance

of the neuron with respect to the dataset

D

. That is, a larger value of

I

W

(i, j)

indicates that the

neuron at this position has a stronger association with the dataset

D

. In practice, we compute

I

W

by

taking the expectation over the activation dataset

D

following Michel et al. (2019); Wei et al. (2024).

The computation of these importance scores serves as a foundation for the subsequent processes of

locating and deactivating relevant neurons.

Locating the Coupled Neurons. Given activation datasets

D

f

and

D

p

related to fairness and

privacy awareness, respectively, we perform the following steps to locate fairness and privacy

coupled neurons within a specific layer and functional module. First, we compute the corresponding

importance score matrices

I

D

f

W

and

I

D

p

W

based on Eq. (3). For example, larger values in

I

D

f

W

indicate

4

剩余17页未读,继续阅读

资源评论

sp_fyf_2024

- 粉丝: 2105

- 资源: 66

上传资源 快速赚钱

我的内容管理

展开

我的内容管理

展开

我的资源

快来上传第一个资源

我的资源

快来上传第一个资源

我的收益 登录查看自己的收益

我的收益 登录查看自己的收益 我的积分

登录查看自己的积分

我的积分

登录查看自己的积分

我的C币

登录后查看C币余额

我的C币

登录后查看C币余额

我的收藏

我的收藏  我的下载

我的下载  下载帮助

下载帮助

前往需求广场,查看用户热搜

前往需求广场,查看用户热搜最新资源

资源上传下载、课程学习等过程中有任何疑问或建议,欢迎提出宝贵意见哦~我们会及时处理!

点击此处反馈

安全验证

文档复制为VIP权益,开通VIP直接复制

信息提交成功

信息提交成功