Generic author design sample pages 1998/07/09 14:59

11 Making Large-Scale SVM Learning Practical

Thorsten Joachims

Universitat Dortmund, Informatik, AI-Unit

Thorsten Joachims@cs.uni-dortmund.de

http://www-ai.cs.uni-dortmund.de/PERSONAL/joachims.html

Tobe published in: 'Advances in Kernel Methods - Support Vector Learning',

Bernhard Scholkopf, Christopher J. C. Burges, and Alexander J. Smola (eds.),

MIT Press, Cambridge, USA, 1998.

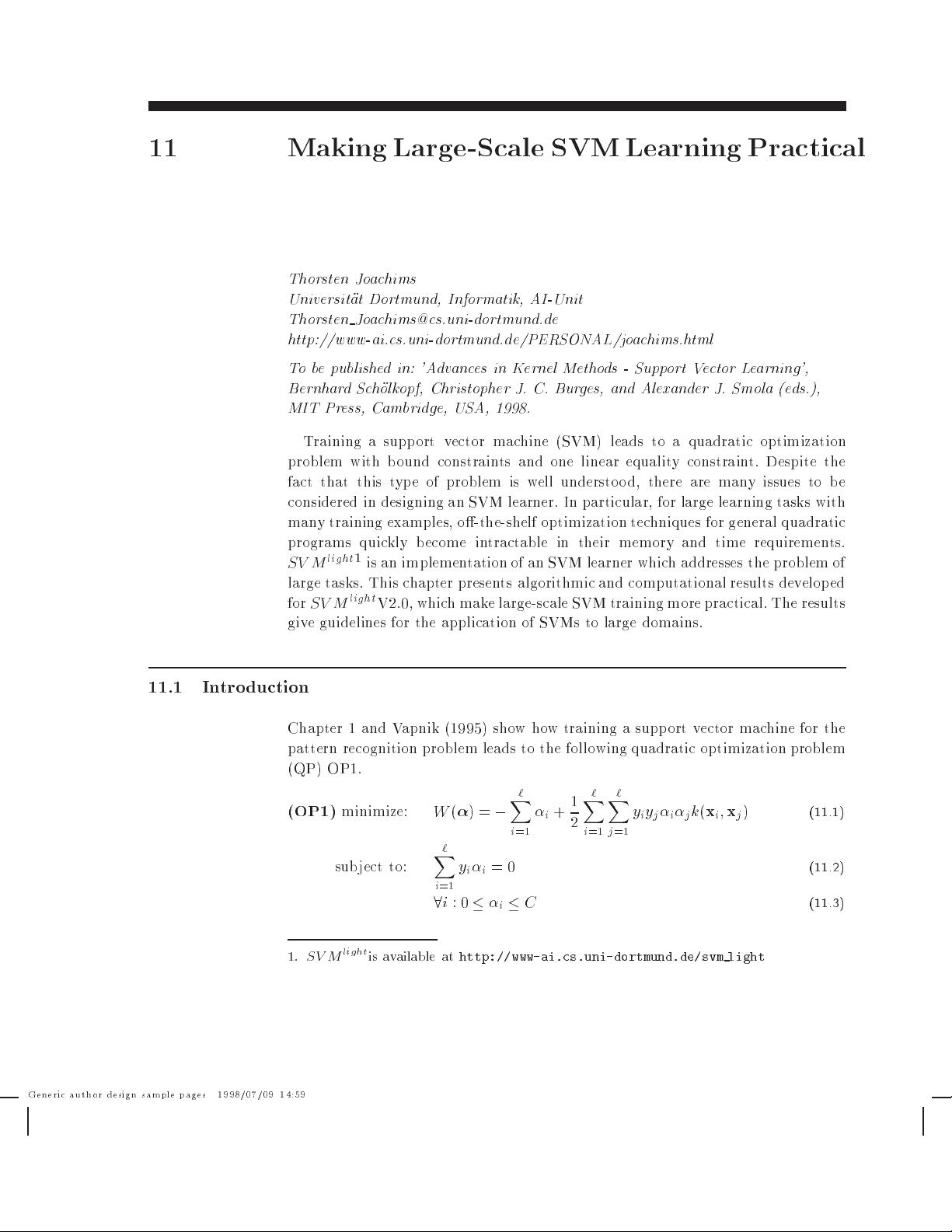

Training a support vector machine (SVM) leads to a quadratic optimization

problem with b ound constraints and one linear equality constraint. Despite the

fact that this type of problem is well understoo d, there are many issues to b e

considered in designing an SVM learner. In particular, for large learning tasks with

many training examples, o-the-shelf optimization techniques for general quadratic

programs quickly b ecome intractable in their memory and time requirements.

SV M

light

1

is an implementation of an SVM learner which addresses the problem of

large tasks. This chapter presents algorithmic and computational results developed

for

SV M

light

V2.0, which make large-scale SVM training more practical. The results

give guidelines for the application of SVMs to large domains.

11.1 Introduction

Chapter 1 and Vapnik (1995) showhow training a support vector machine for the

pattern recognition problem leads to the following quadratic optimization problem

(QP) OP1.

(OP1)

minimize:

W

(

)=

,

`

X

i

=1

i

+

1

2

`

X

i

=1

`

X

j

=1

y

i

y

j

i

j

k

(

x

i

;

x

j

)

(11.1)

sub ject to:

`

X

i

=1

y

i

i

=0

(11.2)

8

i

:0

i

C

(11.3)

1.

SV M

light

is available at

http://www-ai.cs.uni-dortmund.de/svm light

- 1

- 2

- 3

前往页