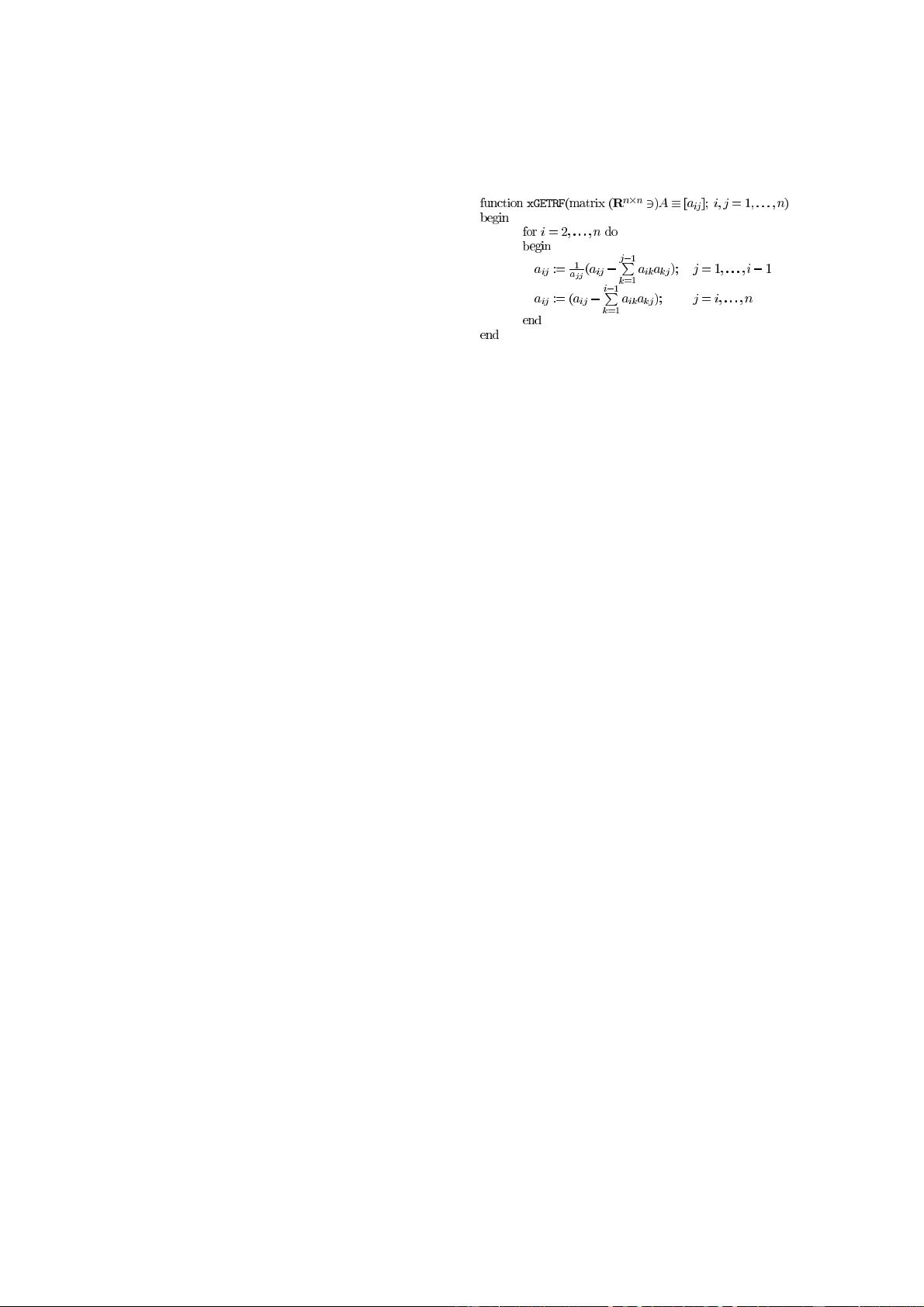

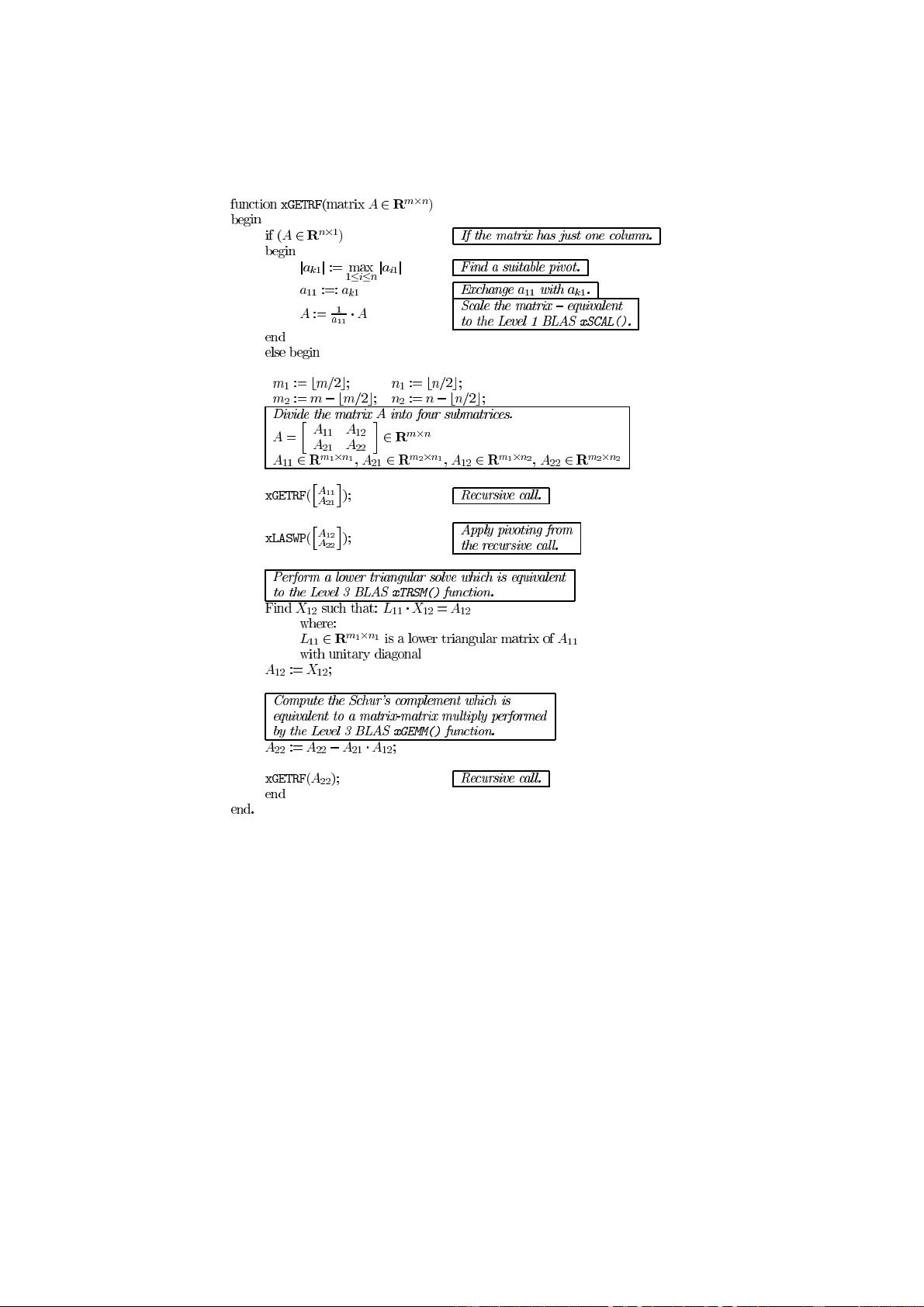

并行计算在现代科学计算和工程应用中扮演着至关重要的角色,特别是在解决大规模的稀疏线性系统问题时。稀疏线性系统是指线性方程组中的大部分元素为零,这种特性使得处理这类问题时可以大幅减少计算量,提高效率。在本主题中,我们将深入探讨并行计算在稀疏线性系统求解中的应用。 一、稀疏矩阵的表示与存储 1. 稀疏矩阵的存储通常采用压缩存储方式,如三元组(Triple)格式、压缩行存储(CRS:Compressed Row Storage)或压缩列存储(CCS:Compressed Column Storage)。这些方法能够有效地节省存储空间,降低内存占用,尤其是在处理大型稀疏矩阵时。 2. 对于并行计算,CRS(压缩行存储)更为常用,因为它在处理行操作时具有更好的性能,适合于分布式内存系统中的并行计算。 二、并行计算策略 1. 分布式内存并行:在这种策略中,稀疏矩阵被分割成多个块,每个处理节点负责处理一部分子问题。例如,使用MPI (Message Passing Interface) 进行进程间通信,协同求解整个系统。 2. 共享内存并行:多线程可以在同一台机器上共享内存资源,通过OpenMP等库实现线程间的同步和通信,对矩阵的不同部分进行并行计算。 3. GPU加速:图形处理器(GPU)拥有大量的计算核心,适合执行并行计算。CUDA或OpenACC等编程模型可以利用GPU的并行能力,实现高效求解稀疏线性系统。 三、并行算法 1. 直接法:如LU分解、Cholesky分解或QR分解。这些方法将线性系统转换为更容易求解的形式。并行版本通常涉及分块处理,以实现矩阵分解的并行化。 2. 迭代法:包括CG(Conjugate Gradient)、GMRES(Generalized Minimum Residual)、BiCGStab(Biconjugate Gradient Stabilized)等。这些方法迭代求解,适合大规模问题,因为它们不需要完整的矩阵乘法。并行策略可以并行化迭代过程、预处理步骤或者部分矩阵运算。 四、并行效率优化 1. 负载均衡:确保所有计算节点的工作负载尽可能均匀,避免某些节点过早完成而其他节点还在忙碌,影响整体效率。 2. 局部性优化:利用数据局部性原理,尽量减少不必要的数据传输,提高缓存命中率。 3. 并行度调整:根据硬件资源和问题规模,合理选择并行度,避免过度并行导致的通信开销过大。 五、应用领域 1. 工程模拟:如流体力学、电磁学、结构力学等领域的大规模数值模拟。 2. 数据科学:机器学习、深度学习中的优化问题,往往涉及到稀疏矩阵的求解。 3. 互联网技术:推荐系统、搜索引擎排名等场景,也常常遇到稀疏线性系统的求解问题。 "并行计算稀疏线性系统求解"是一个涉及多个层次的复杂主题,涵盖了稀疏矩阵的存储、并行计算策略、并行算法的选择以及性能优化等多个方面。理解和掌握这些知识,对于解决实际问题和提升计算效率具有重要意义。

sparseLinearSystemSolverAndLU.7z (3个子文件)

sparseLinearSystemSolverAndLU.7z (3个子文件)  sparseLinearSystemSolverAndLU

sparseLinearSystemSolverAndLU  PARALLEL DIRECT METHODS FOR SPARSE LINEAR SYSTEMS by Michael T Heath.pdf 224KB

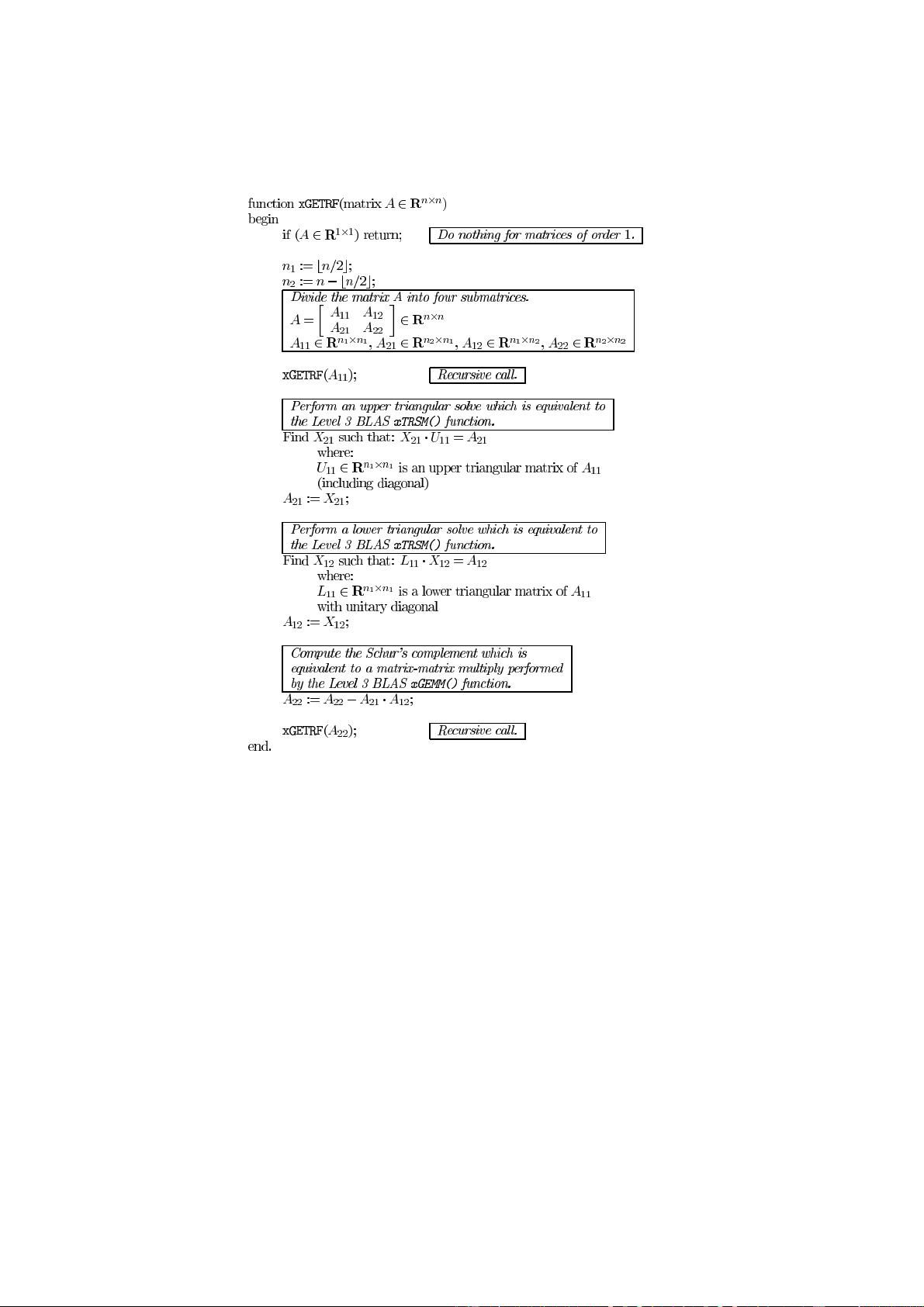

PARALLEL DIRECT METHODS FOR SPARSE LINEAR SYSTEMS by Michael T Heath.pdf 224KB Recursive approach in sparse matrix LU 569670.pdf 1.5MB

Recursive approach in sparse matrix LU 569670.pdf 1.5MB Recursive Approach in Sparse Matrix LU Factorization 2001-recursive.pdf 197KB

Recursive Approach in Sparse Matrix LU Factorization 2001-recursive.pdf 197KB- 1

- 粉丝: 1439

- 资源: 40

我的内容管理

展开

我的内容管理

展开

我的资源

快来上传第一个资源

我的资源

快来上传第一个资源

我的收益 登录查看自己的收益

我的收益 登录查看自己的收益 我的积分

登录查看自己的积分

我的积分

登录查看自己的积分

我的C币

登录后查看C币余额

我的C币

登录后查看C币余额

我的收藏

我的收藏  我的下载

我的下载  下载帮助

下载帮助

前往需求广场,查看用户热搜

前往需求广场,查看用户热搜最新资源

- 基于树莓派的人脸识别全部资料+详细文档+高分项目.zip

- 基于树莓派的甲醛,二氧化碳等环境监控全部资料+详细文档+高分项目.zip

- 基于树莓派的实时图传&数传(天空端)全部资料+详细文档+高分项目.zip

- 基于树莓派的食堂点餐系统嵌入式课设,全部资料+详细文档+高分项目.zip

- 基于树莓派的双目视觉智能小车全部资料+详细文档+高分项目.zip

- 基于树莓派的延时摄影程序全部资料+详细文档+高分项目.zip

- 基于树莓派和NODE的智能镜子项目全部资料+详细文档+高分项目.zip

- 基于树莓派的医疗语音识别应用全部资料+详细文档+高分项目.zip

- 基于树莓派使用运营商网络的免流量WIFI路由器全部资料+详细文档+高分项目.zip

- 基于树莓派网页控制LED和视频监控的项目全部资料+详细文档+高分项目.zip

- 基于树莓派实现ADIS16505 IMU的数据采集全部资料+详细文档+高分项目.zip

- 基于腾讯云IOT平台实现树莓派上面的蜂鸣器控制全部资料+详细文档+高分项目.zip

- 焊接机器人的分类及应用 - .pdf

- 焊接机器人工作站系统中焊接工艺的设计 - .pdf

- 焊接机器人工作站系统设计原则探讨 - .pdf

- 焊接机器人工作站在VHS高速列车转向架构架生产中的应用 - .pdf

信息提交成功

信息提交成功

评论0