as oversmoothing, due to their repeated aggregation of local information [

19

], and over-squashing,

due to the exponential blow-up in computation paths as the model depth increases [1].

As a result, there is a growing interest in deep learning techniques that encode graph structure as a soft

inductive bias, rather than as a hard-coded aspect of message passing [

14

,

24

]. A central issue with

message-passing paradigm is that input graph structure is encoded by restricting the structure of the

model’s computation graph, inherently limiting its flexibility. This reminds us of how early recurrent

neural networks (RNNs) encoded sequential structure via their computation graph—a strategy that

leads to well-known pathologies such as the inability to model long-range dependencies [20].

There is a growing trend across deep learning towards more flexible architectures, which avoid strict

and structural inductive biases. Most notably, the exceptionally successful Transformer architecture

removes any structural inductive bias by encoding the structure via soft inductive biases, such as

positional encodings [

36

]. In the context of GNNs, the self-attention mechanism of a Transformer

can be viewed as passing messages between all nodes, regardless of the input graph connectivity.

Prior work has proposed to use attention in GNNs in different ways. First, the GAT model [

37

]

proposed local attention on pairs of nodes that allows a learnable convolutional kernel. The GTN work

[

42

] has improved on the GAT for node and link predictions while keeping a similar architecture,

while other message-passing approaches have used enhancing spectral features [

8

,

13

] . More

recently, the GT model [

14

] was proposed as a generalization of Transformers to graphs, where they

experimented with sparse and full graph attention while providing low-frequency eigenvectors of the

Laplacian as positional encodings.

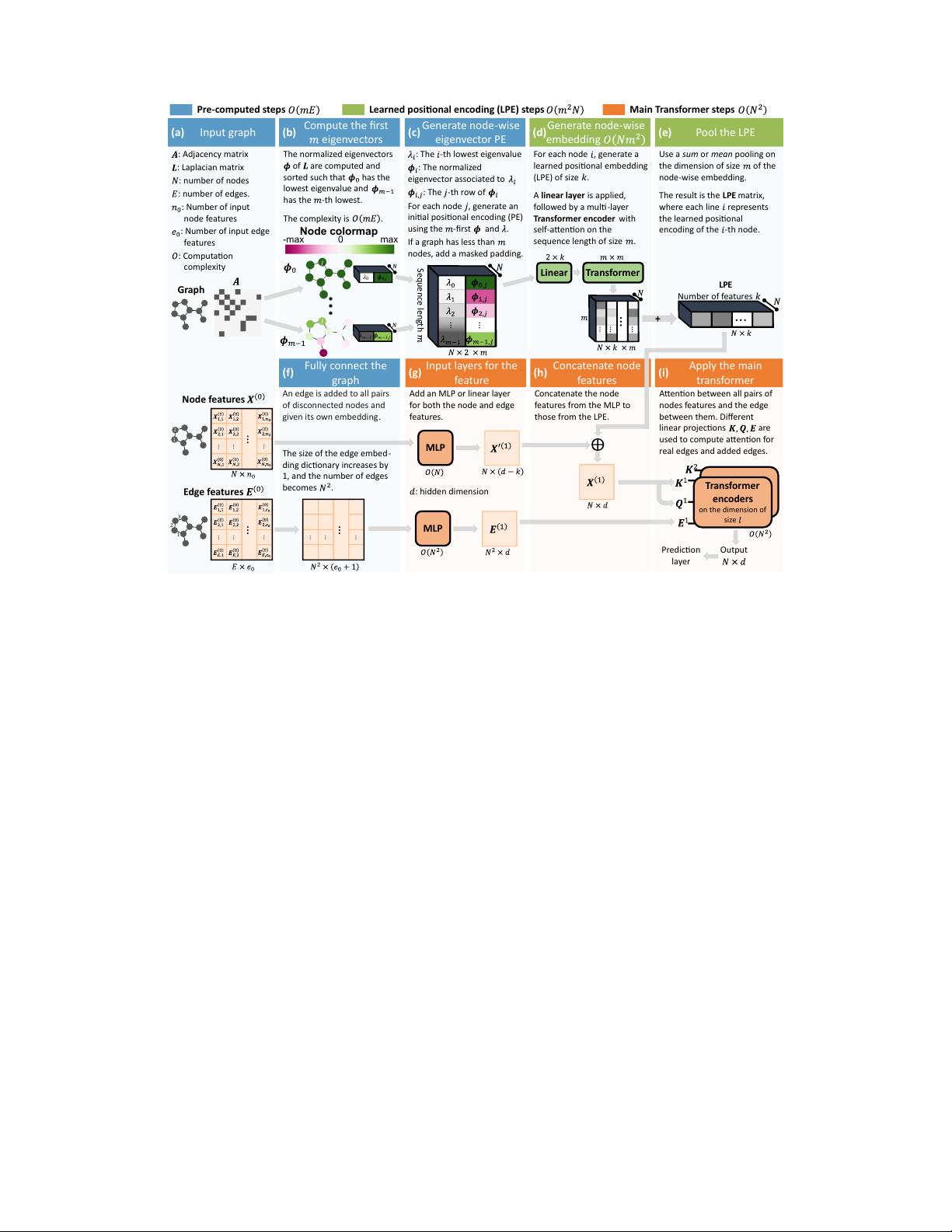

In this work, we offer a principled investigation of how Transformer architectures can be applied

in graph representation learning.

Our primary contribution

is the development of novel and

powerful learnable positional encoding methods, which are rooted in spectral graph theory. Our

positional encoding technique — and the resulting spectral attention network (SAN) architecture —

addresses key theoretical limitations in prior graph Transformer work [

14

] and provably exceeds the

expressive power of standard message-passing GNNs. We show that full Transformer-style attention

provides consistent empirical gains compared to an equivalent sparse message-passing model, and

we demonstrate that our SAN architecture is competitive with or exceeding the state-of-the-art on

several well-known graph benchmarks. An overview of the entire method is presented in Figure 1,

with a link to the code here: https://github.com/DevinKreuzer/SAN.

2 Theoretical Motivations

There can be a significant loss in structural information if naively generalizing Transformers to graphs.

To preserve this information as well as local connectivity, previous studies [

37

,

14

] have proposed to

use the eigenfunctions of their Laplacian as positional encodings. Taking this idea further by using

the full expressivity of eigenfunctions as positional encodings, we can propose a principled way

of understanding graph structures using their spectra. The advantages of our methods compared to

previous studies [37, 14] are shown in Table 1.

Table 1: Comparison of the properties of different graph Transformer models.

MODELS GAT [37] GT sparse [14] GT full [14] SAN (Node LPE)

Preserves local structure in attention 3 3 7 3

Uses edge features 7 3 7 3

Connects non-neighbouring nodes 7 7 3 3

Uses eigenvector-based PE for attention 7 3 3 3

Use a PE with structural information 7 3 7

2

3

Considers the ordering of the eigenvalues 7 3 3 3

Invariant to the norm of the eigenvector - 3 3 3

Considers the spectrum of eigenvalues 7 7 7 3

Considers variable # of eigenvectors - 7 7 3

Aware of eigenvalue multiplicities - 7 7 3

Invariant to the sign of the eigenvectors - 7 7 7

1

Presented results add full connectivity before computing the eigenvectors, thus losing the structural informa-

tion of the graph.

2

我的内容管理

展开

我的内容管理

展开

我的资源

快来上传第一个资源

我的资源

快来上传第一个资源

我的收益 登录查看自己的收益

我的收益 登录查看自己的收益 我的积分

登录查看自己的积分

我的积分

登录查看自己的积分

我的C币

登录后查看C币余额

我的C币

登录后查看C币余额

我的收藏

我的收藏  我的下载

我的下载  下载帮助

下载帮助

前往需求广场,查看用户热搜

前往需求广场,查看用户热搜

信息提交成功

信息提交成功