Rank & Sort Loss for Object Detection and Instance Segmentation

Kemal Oksuz, Baris Can Cam, Emre Akbas

∗

, Sinan Kalkan

*

Dept. of Computer Engineering, Middle East Technical University, Ankara, Turkey

{kemal.oksuz, can.cam, eakbas, skalkan}@metu.edu.tr

Abstract

We propose Rank & Sort (RS) Loss, a ranking-based loss

function to train deep object detection and instance seg-

mentation methods (i.e. visual detectors). RS Loss super-

vises the classifier, a sub-network of these methods, to rank

each positive above all negatives as well as to sort positives

among themselves with respect to (wrt.) their localisation

qualities (e.g. Intersection-over-Union - IoU). To tackle the

non-differentiable nature of ranking and sorting, we refor-

mulate the incorporation of error-driven update with back-

propagation as Identity Update, which enables us to model

our novel sorting error among positives. With RS Loss, we

significantly simplify training: (i) Thanks to our sorting ob-

jective, the positives are prioritized by the classifier with-

out an additional auxiliary head (e.g. for centerness, IoU,

mask-IoU), (ii) due to its ranking-based nature, RS Loss is

robust to class imbalance, and thus, no sampling heuris-

tic is required, and (iii) we address the multi-task nature

of visual detectors using tuning-free task-balancing coeffi-

cients. Using RS Loss, we train seven diverse visual detec-

tors only by tuning the learning rate, and show that it con-

sistently outperforms baselines: e.g. our RS Loss improves

(i) Faster R-CNN by

∼

3 box AP and aLRP Loss (ranking-

based baseline) by

∼

2 box AP on COCO dataset, (ii) Mask

R-CNN with repeat factor sampling (RFS) by 3.5 mask AP

(

∼

7 AP for rare classes) on LVIS dataset; and also out-

performs all counterparts. Code is available at: https:

//github.com/kemaloksuz/RankSortLoss.

1. Introduction

Owing to their multi-task (e.g. classification, box regres-

sion, mask prediction) nature, object detection and instance

segmentation methods rely on loss functions of the form:

L

V D

=

X

k∈K

X

t∈T

λ

k

t

L

k

t

, (1)

which combines L

k

t

, the loss function for task t on stage

k (e.g. |K| = 2 for Faster R-CNN [32] with RPN and R-

*

Equal contribution for senior authorship.

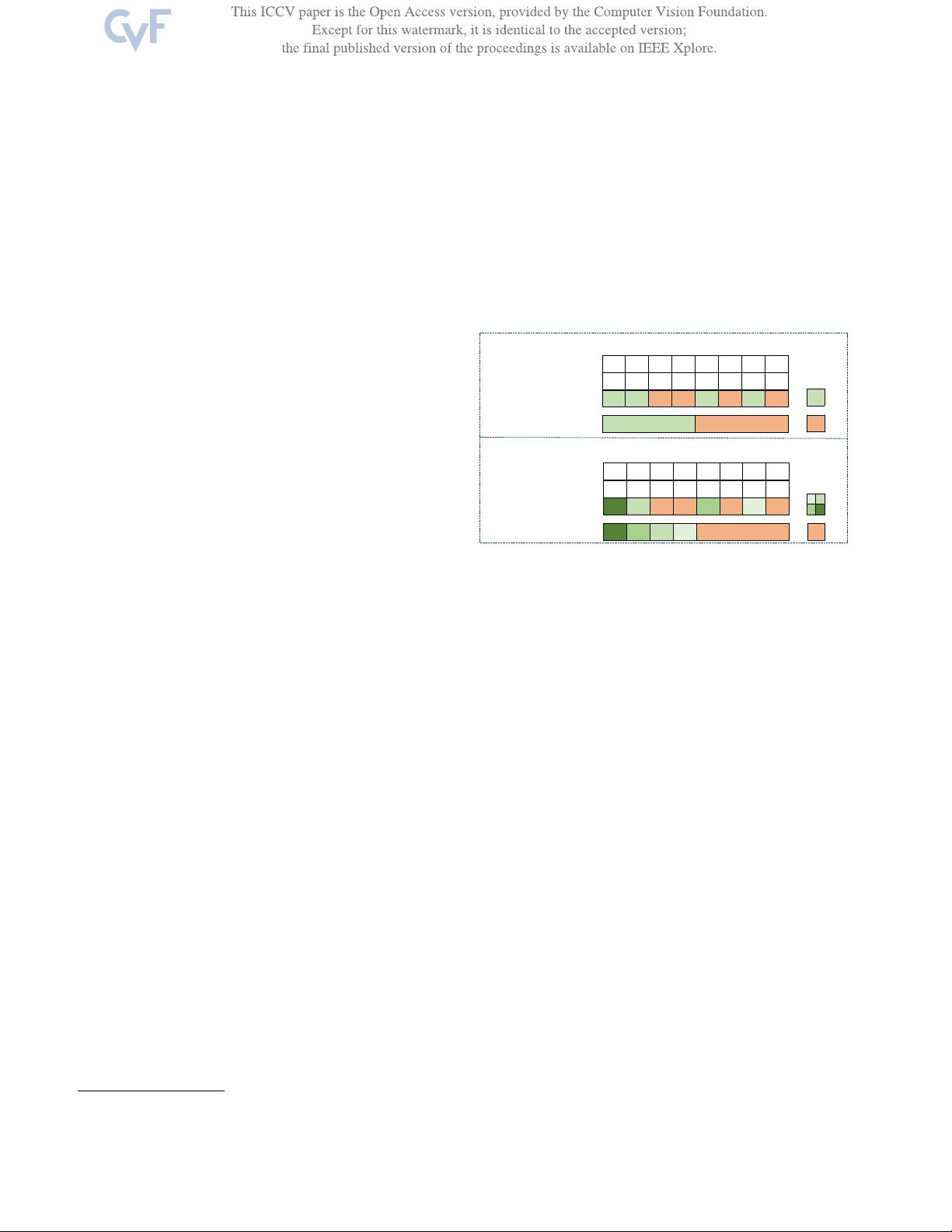

Classification Logits

0 1 2 3 4 5 6 7

Binary Labels

1 1 0 0 1 0 1 0

Target Ranking (𝑖)

Anchor ID (𝑖 )

(a) Ranking positives (+) above negatives (-)

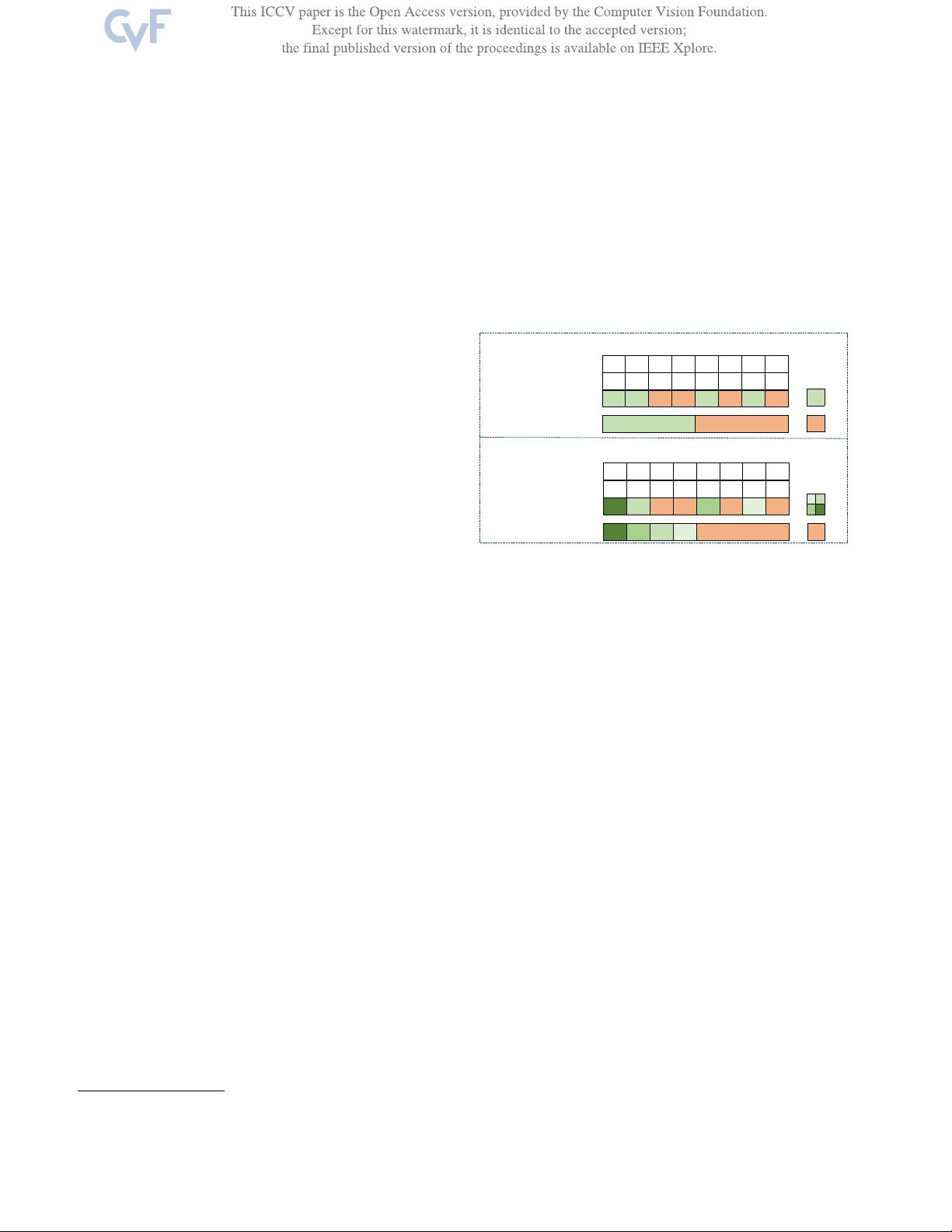

(b) Rank&Sort Loss: Rank (+) above (-) & Sort (+) wrt their IoU labels

Classification Logits

0 1 2 3 4 5 6 7

RS Loss Target Ranking (𝑖) 0 4 1 6

Anchor ID (𝑖 )

(+)

(-)

(+)

(-)

Figure 1. A ranking-based classification loss vs RS Loss. (a) En-

forcing to rank positives above negatives provides a useful objec-

tive for training, however, it ignores ordering among positives. (b)

Our RS Loss, in addition to raking positives above negatives, aims

to sort positives wrt. their continuous IoUs (positives: a green tone

based on its label, negatives: orange). We propose Identity Update

(Section 3), a reformulation of error-driven update with backprop-

agation, to tackle these ranking and sorting operations which are

difficult to optimize due to their non-differentiable nature.

CNN), weighted by a hyper-parameter λ

k

t

. In such formu-

lations, the number of hyper-parameters can easily exceed

10 [27], with additional hyper-parameters arising from task-

specific imbalance problems [28], e.g. the positive-negative

imbalance in the classification task, and if a cascaded ar-

chitecture is used (e.g. HTC [7] employs 3 R-CNNs with

different λ

k

t

). Thus, although such loss functions have led

to unprecedented successes, they require tuning, which is

time consuming, leads to sub-optimal solutions and makes

fair comparison of methods challenging.

Recently proposed ranking-based loss functions, namely

“Average Precision (AP) Loss” [6] and “average Locali-

sation Recall Precision (aLRP) Loss” [27], offer two im-

portant advantages over the classical score-based functions

(e.g. Cross-entropy Loss and Focal Loss [22]): (1) They di-

rectly optimize the performance measure (e.g. AP), thereby

providing consistency between training and evaluation ob-

jectives. This also reduces the number of hyper-parameters

as the performance measure (e.g. AP) does not typically

have any hyper-parameters. (2) They are robust to class-

3009

我的内容管理

展开

我的内容管理

展开

我的资源

快来上传第一个资源

我的资源

快来上传第一个资源

我的收益 登录查看自己的收益

我的收益 登录查看自己的收益 我的积分

登录查看自己的积分

我的积分

登录查看自己的积分

我的C币

登录后查看C币余额

我的C币

登录后查看C币余额

我的收藏

我的收藏  我的下载

我的下载  下载帮助

下载帮助

前往需求广场,查看用户热搜

前往需求广场,查看用户热搜

信息提交成功

信息提交成功