Solutions for Introduction to algorithms

second edition

Philip Bille

The author of this document takes absolutely no responsibility for the contents. This is merely

a vague suggestion to a solution to some of the exercises posed in the book Introduction to algo-

rithms by Cormen, Leiserson and Rivest. It is very likely that there are many errors and that the

solutions are wrong. If you have found an error, have a better solution or wish to contribute in

some constructive way please send a message to beetle@it.dk.

It is important that you try hard to solve the exercises on your own. Use this document only as

a last resort or to check if your instructor got it all wrong.

Please note that the document is under construction and is updated only sporadically. Have

fun with your algorithms.

Best regards,

Philip Bille

Last update: December 9, 2002

1.2 − 2

Insertion sort beats merge sort when 8n

2

< 64n lg n, ⇒n < 8 lg n, ⇒2

n/8

< n. This is true for

2 6 n 6 43 (found by using a calculator).

Rewrite merge sort to use insertion sort for input of size 43 or less in order to improve the

running time.

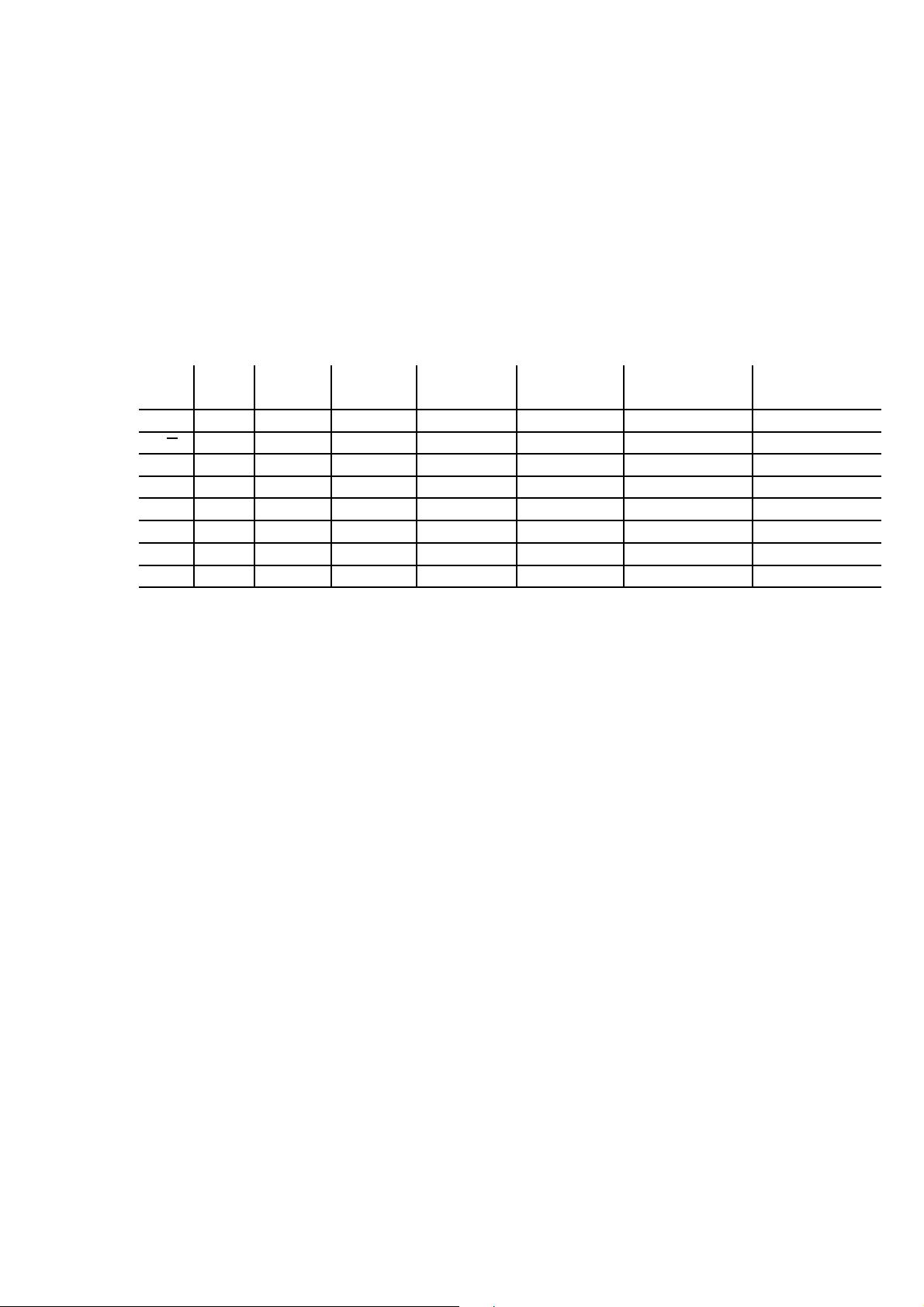

1 − 1

We assume that all months are 30 days and all years are 365.

1 1 1 1 1 1 1

second minute hour day month year century

lgn 2

10

6

2

6·10

7

2

36·10

8

2

864·10

8

2

2592·10

9

2

94608·10

10

2

94608·10

12

√

n 10

12

36 · 10

14

1296 · 10

16

746496 · 10

16

6718464 · 10

18

8950673664 · 10

20

8950673664 · 10

24

n 10

6

6 · 10

7

36 · 10

8

864 · 10

8

2592 · 10

9

94608 · 10

10

94608 · 10

12

n lg n 62746 2801417 ?? ?? ?? ?? ??

n

2

10

3

24494897 6 · 10

4

293938 1609968 30758413 307584134

n

3

10

2

391 1532 4420 13736 98169 455661

2

n

19 25 31 36 41 49 56

n! 9 11 12 13 15 17 18

2

2.1 − 2

In line 5 of INSERTION-SORT alter A[i] > key to A[i] < key in order to sort the elements in

nonincreasing order.

2.1 − 3

Algorithm 1 LINEAR-SEARCH(A, v)

Input: A = ha

1

, a

2

, . . . a

n

i and a value v.

Output: An index i such that v = A[i] or nil if v 6∈ A

for i ←1 to n do

if A[i] = v then

return i

end if

end for

return nil

As a loop invariant we say that none of the elements at index A[1, . . . , i − 1] are equal to v.

Clearly, all properties are fullfilled by this loop invariant.

2.2 − 1

n

3

/1000 − 100n

2

− 100n + 3 = Θ(n

3

).

2.2 − 2

Assume that FIND -MIN(A, r, s) returns the index of the smallest element in A between indices r

and s. Clearly, this can be implemented in O(s − r) time if r > s.

Algorithm 2 SELECTION-SORT(A)

Input: A = ha

1

, a

2

, . . . a

n

i

Output: sorted A.

for i ←1 to n − 1 do

j ←FIND -MIN(A, i, n)

A[j] ↔A[i]

end for

As a loop invariant we choose that A[1, . . . , i − 1] are sorted and all other elements are greater

than these. We only need to iterate to n − 1 since according to the invariant the nth element will

then the largest.

The n calls of FIND -MIN gives the following bound on the time complexity:

Θ

n

X

i=1

i

!

= Θ(n

2

)

This holds for both the best- and worst-case running time.

2.2 − 3

Given that each element is equally likely to be the one searched for and the element searched for is

present in the array, a linear search will on the average have to search through half the elements.

This is because half the time the wanted element will be in the first half and half the time it will

be in the second half. Both the worst-case and average-case of LINEAR-SEARCH is Θ(n).

3

2.2 − 4

One can modify an algorithm to have a best-case running time by specializing it to handle a best-

case input efficiently.

2.3 − 5

A recursive version of binary search on an array. Clearly, the worst-case running time is Θ(lg n).

Algorithm 3 BINARY-SEARCH(A, v, p, r)

Input: A sorted array A and a value v.

Output: An index i such that v = A[i] or nil.

if p > r and v 6= A[p] then

return nil

end if

j ←A[b(r − p)/2c]

if v = A[j] then

return j

else

if v < A[j] then

return BINARY-SEARCH(A, v, p, j)

else

return BINARY-SEARCH(A, v, j, r)

end if

end if

2.3 − 7

Give a Θ(n lg n) time algorithm for determining if there exist two elements in an set S whose sum

is exactly some value x.

Algorithm 4 CHECKSUMS(A, x)

Input: An array A and a value x.

Output: A boolean value indicating if there is two elements in A whose sum is x.

A ←SORT(A)

n ←length[A]

for i ←to n do

if A[i] > 0 and BINARY-SEARCH(A, A[i] − x, 1, n) then

return true

end if

end for

return false

Clearly, this algorithm does the job. (It is assumed that nil cannot be true in the if-statement.)

4

3.1 − 1

Let f(n), g(n) be asymptotically nonnegative. Show that max(f(n), g(n)) = Θ(f(n) + g(n)). This

means that there exists positive constants c

1

, c

2

and n

0

such that,

0 6 c

1

(f(n) + g(n)) 6 max(f(n), g(n)) 6 c

2

(f(n) + g(n))

for all n > n

0

.

Selecting c

2

= 1 clearly shows the third inequality since the maximum must be smaller than

the sum. c

1

should be selected as 1/2 since the maximum is always greater than the weighted

average of f(n) and g(n). Note the significance of the “asymptotically nonnegative” assumption.

The first inequality could not be satisfied otherwise.

3.1 − 4

2

n+1

= O(2

n

) since 2

n+1

= 2 · 2

n

6 2 · 2

n

! However 2

2n

is not O(2

n

): by definition we have

2

2n

= (2

n

)

2

which for no constant c asymptotically may be less than or equal to c ·2

n

.

3 − 4

Let f(n) and g(n) be asymptotically positive functions.

a. No, f(n) = O(g(n)) does not imply g(n) = O(f(n)). Clearly, n = O(n

2

) but n

2

6= O(n).

b. No, f(n) + g(n) is not Θ(min(f(n), g(n))). As an example notice that n + 1 6= Θ(min(n, 1)) =

Θ(1).

c. Yes, if f(n) = O(g(n)) then lg(f(n)) = O(lg(g(n))) provided that f(n) > 1 and lg(g(n)) > 1

are greater than or equal 1. We have that:

f(n) 6 cg(n) ⇒lg f(n) 6 lg(cg(n)) = lg c + lg g(n)

To show that this is smaller than b lg g(n) for some constant b we set lg c + lg g(n) = b lg g(n).

Dividing by lg g(n) yields:

b =

lg(c) + lg g(n)

lg g(n)

=

lg c

lg g(n)

+ 1 6 lg c + 1

The last inequality holds since lg g(n) > 1.

d. No, f(n) = O(g(n)) does not imply 2

f(n)

= O(2

g(n)

). If f(n) = 2n and g(n) = n we have that

2n 6 2 · n but not 2

2n

6 c2

n

for any constant c by exercise 3.1 − 4.

e. Yes and no, if f(n) < 1 for large n then f(n)

2

< f(n) and the upper bound will not hold.

Otherwise f(n) > 1 and the statement is trivially true.

f. Yes, f(n) = O(g(n)) implies g(n) = Ω(f(n)). We have f(n) 6 cg(n) for positive c and thus

1/c · f(n) 6 g(n).

g. No, clearly 2

n

66 c2

n/2

= c

√

2

n

for any constant c if n is sufficiently large.

h. By a small modification to exercise 3.1−1 we have that f(n)+o(f(n)) = Θ(max(f(n), o(f(n)))) =

Θ(f(n)).

5