没有合适的资源?快使用搜索试试~ 我知道了~

《AFR-Net: Attention-Driven Fingerprint Recognition Network》论文

0 下载量 193 浏览量

2024-12-09

11:31:01

上传

评论

收藏 15.65MB PDF 举报

温馨提示

《AFR-Net: Attention-Driven Fingerprint Recognition Network》论文

资源推荐

资源详情

资源评论

30 IEEE TRANSACTIONS ON BIOMETRICS, BEHAVIOR, AND IDENTITY SCIENCE, VOL. 6, NO. 1, JANUARY 2024

AFR-Net: Attention-Driven Fingerprint

Recognition Network

Steven A. Grosz and Anil K. Jain , Life Fellow, IEEE

Abstract—The use of vision transformers (ViT) in computer

vision is increasing due to its limited inductive biases (e.g., local-

ity, weight sharing, etc.) and increased scalability compared

to other deep learning models. This has led to some initial

studies on the use of ViT for biometric recognition, includ-

ing fingerprint recognition. In this work, we improve on these

initial studies by i.) evaluating additional attention-based archi-

tectures, ii.) scaling to larger and more diverse training and

evaluation datasets, and iii.) combining the complimentary rep-

resentations of attention-based and CNN-based embeddings for

improved state-of-the-art (SOTA) fingerprint recognition (both

authentication and identification). Our combined architecture,

AFR-Net (Attention-Driven Fingerprint Recognition Network),

outperforms several baseline models, including a SOTA com-

mercial fingerprint system by Neurotechnology, Verifinger v12.3,

across intra-sensor, cross-sensor, and latent to rolled fingerprint

matching datasets. Additionally, we propose a realignment strat-

egy using local embeddings extracted from intermediate feature

maps within the networks to refine the global embeddings in

low certainty situations, which boosts the overall recognition

accuracy significantly. This realignment strategy requires no

additional training and can be applied as a wrapper to any

existing deep learning network (including attention-based, CNN-

based, or both) to boost its performance in a variety of computer

vision tasks.

Index Terms—Fingerprint embeddings, fingerprint recogni-

tion, attention, vision transformers, fixed-length fingerprint

representations, cross-sensor fingerprint recognition, sensor inter-

operability, universal representation.

I. INTRODUCTION

A

UTOMATED fingerprint recognition systems have con-

tinued to permeate many facets of everyday life, appear-

ing in many civilian and governmental applications over the

last several decades [1]. As an example, India’s Aadhaar

civil registration system is used to authenticate approximately

70 million transactions per day, primarily with fingerprints.

1

Due to the impressive accuracy of fingerprint recognition

algorithms (0.626% False Non-Match Rate at a False Match

Rate of 0.01% on the FVC-ongoing 1:1 hard benchmark [2]),

researchers have turned their attention to addressing difficult

Manuscript received 2 May 2023; accepted 15 September 2023. Date of

publication 19 September 2023; date of current version 8 March 2024. This

article was recommended for publication by Associate Editor S. Schuckers

upon evaluation of the reviewers’ comments. (Corresponding author:

Steven A. Grosz.)

The authors are with the Department of Computer Science and Engineering,

Michigan State University, East Lansing, MI 48824 USA (e-mail: groszste@

cse.msu.edu; jain@cse.msu.edu).

Digital Object Identifier 10.1109/TBIOM.2023.3317303

1

https://uidai.gov.in/aadhaar_dashboard/auth_trend.php

edge-cases where accurate recognition remains challenging,

such as partial overlap between two candidate fingerprint

images and cross-sensor interoperability (e.g., optical to capac-

itive, contact to contactless, latent to rolled fingerprints, etc.),

as well as other practical problems like template encryp-

tion, privacy concerns, and matching latency for large-scale

(gallery sizes on the order of tens or hundreds of millions)

identification.

For many reasons, some of which mentioned above

(e.g., template encryption and latency), methods for extract-

ing fixed-length fingerprint embeddings using various deep

learning approaches have been proposed. Some of these

methods were proposed for specific fingerprint-related tasks,

such as minutiae extraction [3], [4] and fingerprint index-

ing [5], [6], whereas others were aimed at extracting a single

“global” embedding [7], [8], [9]. Of these methods, the most

common architecture employed is the convolutional neural

network (CNN), often utilizing domain knowledge (e.g., minu-

tiae [8]) and other tricks (e.g., specific loss functions, such as

triplet loss [10]) to improve fingerprint recognition accuracy.

More recently, motivated by the success of attention-based

Transformers [11] in natural language processing, the com-

puter vision field has seen an influx of the use of the vision

transformer (ViT) architecture for various computer vision

tasks [12], [13], [14], [15].

In fact, two studies have already explored the use of ViT

for learning discriminative fingerprint embeddings [16], [17];

albeit, with the following limitations: i.) the authors of [16]

supervised their ViT model using a pretrained CNN as a

teacher model and thus did not give the transformer archi-

tecture the freedom to learn its own representation and ii.) the

authors of [17] were limited in the data and choice of loss

function used to supervise their transformer model, thereby

limiting the fingerprint recognition accuracy compared to the

baseline ResNet50 model. Nonetheless, the authors in [17] did

note the complimentary nature between the features learned by

the CNN-based ResNet50 model and the attention-based ViT

model. This motivated us to evaluate additional attention-based

models that bridge the gap between purely CNN and purely

attention-based models, in order to leverage the benefits of

each. Toward this end, we evaluate two ViT variants (vanilla

ViT [12] and Swin [15]) along with two variants of a CNN

model [18] (ResNet50 and ResNet101) for fingerprint recog-

nition. In addition, we propose our own architecture, AFR-Net

(Attention-Driven Fingerprint Recognition Network), consist-

ing of a shared feature extraction and parallel CNN and

attention classification layers.

2637-6407

c

2023 IEEE. Personal use is permitted, but republication/redistribution requires IEEE permission.

See https://www.ieee.org/publications/rights/index.html for more information.

Authorized licensed use limited to: SICHUAN UNIVERSITY. Downloaded on November 04,2024 at 13:06:08 UTC from IEEE Xplore. Restrictions apply.

GROSZ AND JAIN: AFR-Net 31

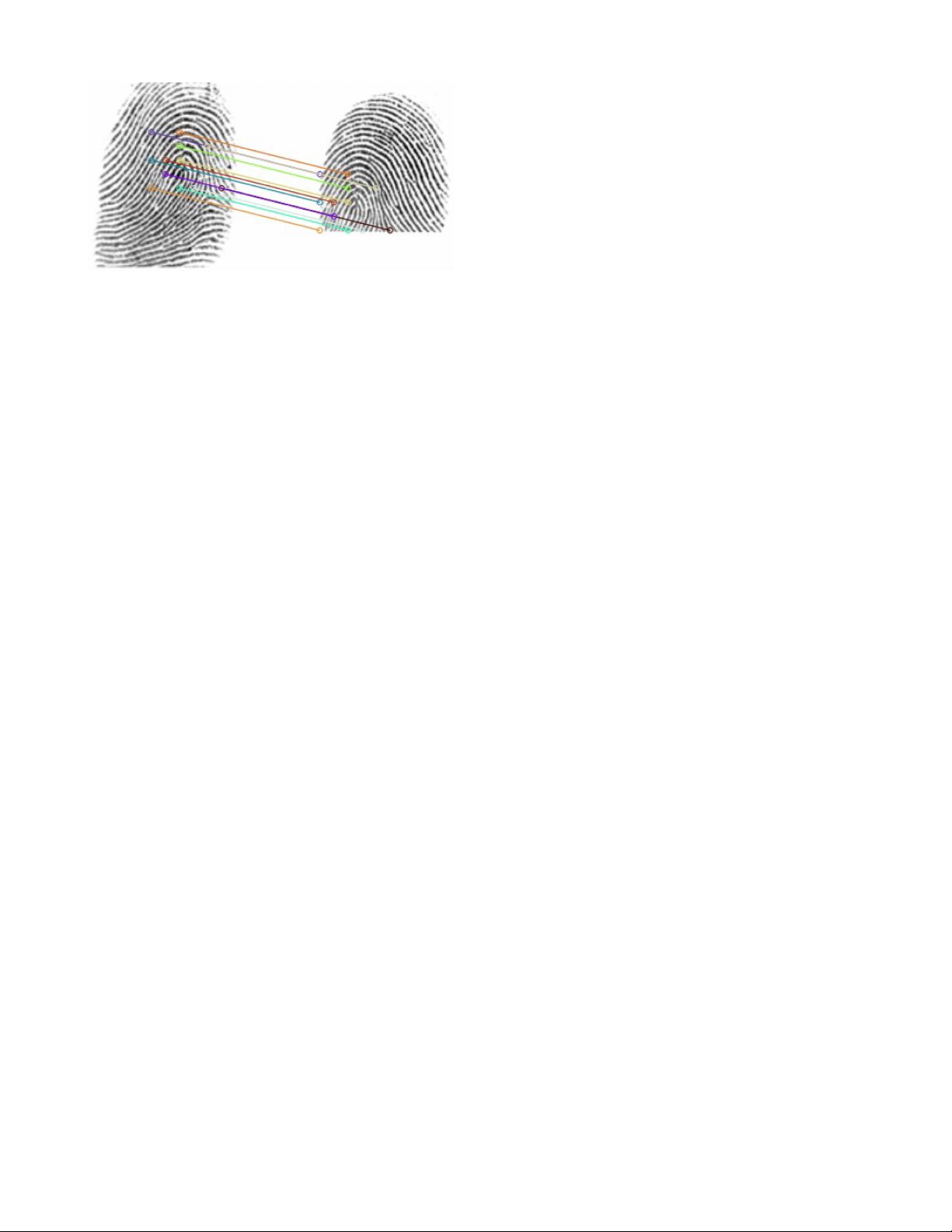

Fig. 1. Example correspondence between local features extracted from the

intermediate feature maps of our AFR-Net model for two images of the same

finger. Note, these local features are not necessarily the same as minutiae

points, which are commonly used in fingerprint recognition.

Even though these models are trained to extract a single,

global embedding representing the identity of a given finger-

print image, we make the observation that for both CNN-based

and attention-based models, the intermediate feature maps

encode local features that are also useful for relating two can-

didate fingerprint images. Correspondence between these local

features can be used to guide the network in placing atten-

tion on overlapping regions of the images in order to make a

more accurate determination of whether the images are from

the same finger. Additionally, these local features are useful

in explaining the similarity between two candidate images by

directly visualizing the corresponding keypoints, as shown in

Figure 1.

One remaining concern with regards to deep learning-based

fingerprint matchers is their generalization across different

fingerprint sensing technology (e.g., optical, capacitive, etc.),

fingerprint readers (e.g., CrossMatch, GreenBit, etc.) and fin-

gerprint impression types (e.g., rolled, plain, contactless, etc.).

This problem is often referred to as sensor interoperability,

which has received some attention in recent years [19], [20],

[21], [22]. In this paper, we demonstrate the generalizability of

our learned representations via extensive experiments across

a wide range of fingerprint sensors and types. As we show

in the ablation study in Section IV-E, much of the challenge

of sensor interoperability is mitigated by training on a large,

diverse training dataset; however, additional performance gains

are achieved by incorporating both of the complimentary CNN

and attention-based features into our network.

More concisely, the contributions of this research are as

follows:

• Analysis of various attention-based architectures for fin-

gerprint recognition.

• Novel architecture for fingerprint recognition, AFR-Net,

which incorporates attention layers into the ResNet archi-

tecture.

• State-of-the-art (SOTA) fingerprint recognition

performance (authentication and identification) across

several diverse benchmark datasets, including intra-

sensor, cross-sensor, contact to contactless, and latent to

rolled fingerprint matching.

• Novel use of local embeddings extracted from

intermediate feature maps to both improve the recognition

accuracy and explainability of the model.

• Ablation analysis demonstrating the importance of each

aspect of our model, including choice of loss function,

training dataset size, use of spatial alignment module, use

of both classification heads, and use of local embeddings

to refine the global embeddings.

II. R

ELATED WORK

Here we briefly discuss the prior literature in deep learning-

based fingerprint recognition and the use of vision transformer

models for computer vision. For a more in-depth discussion on

these topics, refer to one of the many survey papers available

(e.g., [23] for deep learning in biometrics and [24] for the use

of transformers in vision).

A. Deep Learning for Fingerprint Recognition

Over the last decade, deep learning has seen a plethora

of applications in fingerprint recognition, including minutiae

extraction [3], [4], fingerprint indexing [5], [6], presentation

attack detection [25], [26], [27], [28], synthetic fingerprint

generation [29], [30], [31], [32], and fixed-length finger-

print embeddings for recognition [7], [8], [9]. For purposes

of this paper, we limit our discussion to fixed-length (global)

embeddings for fingerprint recognition.

Among the first studies on extracting global fingerprint

embeddings using deep learning was proposed by Li et al. [7],

which used a fully convolutional neural network to produce a

final embedding of 256 dimensions. The authors of [8] then

showed improved performance of their fixed-length embedding

network by incorporating minutiae domain knowledge as an

additional supervision. Similarly, Lin and Kumar incorporated

additional fingerprint domain knowledge (minutiae and core

point regions) into a multi-Siamese CNN for contact to con-

tactless fingerprint matching [9]. More recently, [16] and [17]

proposed the use of vision transformer architecture for extract-

ing discriminative fixed-length fingerprint embeddings, both

showing that incorporating minutiae domain knowledge into

ViT improved the performance.

B. Vision Transformers for Biometric Recognition

Transformers have led to numerous applications across the

computer vision field in the past couple of years since they

were first introduced for computer vision applications by

Doesovitskiy et al. in 2021 [12]. The general principle of

transformers for computer vision is the use of the attention

mechanism for aggregating sets of features across the entire

image or within local neighborhoods of the image. The notion

of attention was originally introduced in 2015 for sequence

modeling by Bahdanau et al. [33] and has been shown to be

a useful mechanism in general for operations on a set of fea-

tures. Today, numerous variants of ViT have been proposed

for a wide range of computer vision tasks, including image

recognition, generative modeling, multi-model tasks, video

processing, low-level vision, etc. [24].

Some recent works have explored the use of transformers

for biometric recognition across several modalities including

face [34], finger vein [35], fingerprint [16], [17], ear [36],

gait [37], and keystroke recognition [38]. In this work,

Authorized licensed use limited to: SICHUAN UNIVERSITY. Downloaded on November 04,2024 at 13:06:08 UTC from IEEE Xplore. Restrictions apply.

32 IEEE TRANSACTIONS ON BIOMETRICS, BEHAVIOR, AND IDENTITY SCIENCE, VOL. 6, NO. 1, JANUARY 2024

Fig. 2. Overview of the AFR-Net architecture. First, input fingerprint images are passed through a spatial alignment module for better alignment of two

fingerprints under comparison, then passed through a shared feature extraction, followed by two classification heads (one CNN-based and the other attention-

based). For our implementation, we followed the ResNet50 architecture as our backbone and CNN classification head and used 12 multi-headed attention

transformer encoder blocks for the attention-based classification head.

we improve upon these previous uses of transformers by

evaluating additional attention-based architectures for extract-

ing global fingerprint embeddings.

III. AFR-N

ET:ATTENTION-DRIVEN FINGERPRINT

RECOGNITION MODEL

Our approach consists of i.) investigating several baseline

CNN and attention-based models for fingerprint recognition,

ii.) fusing a CNN-based architecture with attention into a sin-

gle model to leverage the complimentary representations of

each, iii.) a strategy to use intermediate local feature maps to

refine global embeddings and reduce uncertainty in challeng-

ing pairwise fingerprint comparisons, and iv.) use of spatial

alignment module to improve recognition performance. Details

of each component of our approach are given in the following

sections.

A. Baseline Methods

First, we improve on the initial studies [16], [17] apply-

ing ViT to fingerprint recognition to better establish a fair

baseline performance of ViT compared to CNN-based mod-

els. This is accomplished by removing the limitations of the

previous studies in terms of choice of supervision and size of

training dataset used to learn the parameters of the models.

We then compare ViT with two variants of the ResNet CNN-

based architecture, ResNet50 and ResNet101. For our specific

choice of ViT, we decided on the small version with patch size

of 16, number of attention heads of 6, and layer depth of 12.

We selected this architecture as it presents an adequate trade-

off in speed and accuracy compared to other ViT variants. In

addition, we compare the performance of a popular ViT suc-

cessor, Swin, which uses a hierarchical structure and shifted

windows for computing attention within local regions of the

image. Specifically, we used the small Swin architecture with

patch size of 4, window size of 7, and embedding dimension

of 96.

For additional baseline comparisons with previous methods,

we included the latest version of the commercial-of-the-shelf

(COTS) fingerprint recognition system from Neurotechnology,

Verifinger v12.3,

2

and DeepPrint [8], a fingerprint recogni-

tion network based on Inceptionv4 backbone that incorporates

fingerprint domain knowledge into the learning framework.

According to the FVC On-going competition, Verifinger is

the top performing algorithm for the 1:1 fingerprint verifica-

tion benchmark [2] and DeepPrint has also shown competitive

performance with Verifinger on some benchmark datasets [8].

B. Proposed AFR-Net Architecture

Based on previous research suggesting the complimentary

nature of ViT and ResNet embeddings, we were motivated

to merge the two into a single architecture, referred to as

AFR-Net. As shown in Figure 2, AFR-Net consists of a spa-

tial alignment module, shared CNN feature encoder, CNN

classification head, and an attention classification head. The

shared alignment module and feature encoder greatly reduces

the number of parameters compared to the fusion of the two

separate networks and also allows the two classification heads

to be trained jointly.

Due to the two classification heads, we have two bot-

tleneck classification layers which map each of the 384-d

embeddings, Z

c

and Z

a

, into a softmax output represent-

ing the probability of a sample belonging to one of N

classes (identities) in our training dataset. We employ the

Additive Angular Margin (ArcFace) loss function to encour-

age intra-class compactness and inter-class discrepancy of

the embeddings of each branch [39]. Through an ablation

study, presented in Section IV-E, we find that despite the

relatively little use of this loss function in previous finger-

print recognition papers [17], [40], the ArcFace loss function

makes an enormous difference in the performance of our

model.

2

https://neurotechnology.com/verifinger.html

Authorized licensed use limited to: SICHUAN UNIVERSITY. Downloaded on November 04,2024 at 13:06:08 UTC from IEEE Xplore. Restrictions apply.

剩余12页未读,继续阅读

资源评论

czlczl20020925

- 粉丝: 137

- 资源: 2

上传资源 快速赚钱

我的内容管理

展开

我的内容管理

展开

我的资源

快来上传第一个资源

我的资源

快来上传第一个资源

我的收益 登录查看自己的收益

我的收益 登录查看自己的收益 我的积分

登录查看自己的积分

我的积分

登录查看自己的积分

我的C币

登录后查看C币余额

我的C币

登录后查看C币余额

我的收藏

我的收藏  我的下载

我的下载  下载帮助

下载帮助

前往需求广场,查看用户热搜

前往需求广场,查看用户热搜最新资源

- 永磁同步电机传统直接转矩控制仿真,功况波形很好

- Python Flask搭建基于TiDB的RESTful库存管理系统实现

- 面向计算机科学专业学生的作业五任务解析与指引

- 医学图像处理与评估:色调映射及去噪技术的应用

- 有限元方法中Sobolev范数误差估计与Matlab程序改进及应用作业解析

- MATLAB分步傅里叶法仿真光纤激光器锁模脉冲产生 解决了可饱和吸收镜导致的脉冲漂移问题

- 基于java的产业园区智慧公寓管理系统设计与实现.docx

- 基于java的大学生考勤系统设计与实现.docx

- 基于java的本科生交流培养管理平台设计与实现.docx

- 基于java的大学校园生活信息平台设计与实现.docx

- 基于java的党员学习交流平台设计与实现.docx

- 光伏发电三相并网模型 光伏加+Boost+三相并网逆变器 PLL锁相环 MPPT最大功率点跟踪控制(扰动观察法) dq解耦控制, 电流内环电压外环的并网控制策略 电压外环控制直流母线电压稳住750V

- 基于java的多媒体信息共享平台设计与实现.docx

- 基于java的公司资产网站设计与实现.docx

- 基于java的二手物品交易设计与实现.docx

- 基于java的供应商管理系统设计与实现.docx

资源上传下载、课程学习等过程中有任何疑问或建议,欢迎提出宝贵意见哦~我们会及时处理!

点击此处反馈

安全验证

文档复制为VIP权益,开通VIP直接复制

信息提交成功

信息提交成功