没有合适的资源?快使用搜索试试~ 我知道了~

资源推荐

资源详情

资源评论

1382 IEEE TRANSACTIONS ON AUDIO, SPEECH, AND LANGUAGE PROCESSING, VOL. 19, NO. 5, JULY 2011

Single-Channel and Multi-Channel Sinusoidal Audio

Coding Using Compressed Sensing

Anthony Griffin, Toni Hirvonen, Christos Tzagkarakis, Athanasios Mouchtaris, Member, IEEE, and

Panagiotis Tsakalides, Member, IEEE

Abstract—Compressed sensing (CS) samples signals at a much

lower rate than the Nyquist rate if they are sparse in some basis.

In this paper, the CS methodology is applied to sinusoidally mod-

eled audio signals. As this model is sparse by definition in the fre-

quency domain (being equal to the sum of a small number of si-

nusoids), we investigate whether CS can be used to encode audio

signals at low bitrates. In contrast to encoding the sinusoidal pa-

rameters (amplitude, frequency, phase) as current state-of-the-art

methods do, we propose encoding few randomly selected samples

of the time-domain description of the sinusoidal component (per

signal segment). The potential of applying compressed sensing both

to single-channel and multi-channel audio coding is examined. The

listening test results are encouraging, indicating that the proposed

approach can achieve comparable performance to that of state-of-

the-art methods. Given that CS can lead to novel coding systems

where the sampling and compression operations are combined into

one low-complexity step, the proposed methodology can be consid-

ered as an important step towards applying the CS framework to

audio coding applications.

Index Terms—Audio coding, compressed sensing (CS), signal re-

construction, signal sampling, sinusoidal model.

I. INTRODUCTION

T

HE growing demand for audio content far outpaces the

corresponding growth in users’ storage space or band-

width. Thus, there is a constant incentive to further improve the

compression of audio signals. This can be accomplished either

by applying compression algorithms to the actual samples of a

digital audio signal, or using initially a signal model and then

encoding the model parameters as a second step. In this paper,

we propose a novel method for encoding the parameters of the

sinusoidal model.

Manuscript received December 24, 2009; revised May 05, 2010; accepted Oc-

tober 17, 2010. Date of publication November 09, 2010; date of current version

May 13, 2011. This work was supported in part by the Marie Curie TOK-DEV

“ASPIRE” grant and in part by the PEOPLE-IAPP “AVID-MODE” grant within

the 6th and 7th European Community Framework Programs, respectively. The

associate editor coordinating the review of this manuscript and approving it for

publication was Dr. Patrick Naylor.

A. Griffin, C. Tzagkarakis, A. Mouchtaris, and P. Tsakalides are with the In-

stitute of Computer Science, Foundation for Research and Technology-Hellas

(FORTH-ICS), and Department of Computer Science, University of Crete, Her-

aklion, Crete GR-70013, Greece (e-mail: agriffin@ics.forth.gr; tzagarak@ics.

forth.gr; mouchtar@ics.forth.gr; tsakalid@ics.forth.gr).

T. Hirvonen was with the Institute of Computer Science, Foundation for

Research and Technology-Hellas (FORTH-ICS), Heraklion, Crete GR-70013,

Greece. He is now with the Dolby Laboratories, Stockholm SE-113 30,

Sweden, (e-mail: toni.hirvonen@dolby.com).

Color versions of one or more of the figures in this paper are available online

at http://ieeexplore.ieee.org.

Digital Object Identifier 10.1109/TASL.2010.2090656

The sinusoidal model represents an audio signal using a

small number of time-varying sinusoids [1]. The remainder

error signal—often termed the residual signal—can also be

modeled to further improve the resulting subjective quality of

the sinusoidal model [2]. The sinusoidal model allows for a

compact representation of the original signal and for efficient

encoding and quantization. Extending the sinusoidal model to

multi-channel audio applications has also been proposed (e.g.,

[3]).

Various methods for quantization of the sinusoidal model

parameters (amplitude, phase, and frequency) have been pro-

posed in the literature. Initial methods in this area suggested

quantizing the parameters independently of each other [4]–[8].

The frequency locations of the sinusoids were quantized based

on research into the just noticeable differences in frequency

(JNDF), while the amplitudes were quantized based either on

the just noticeable differences in amplitude (JNDA) or the esti-

mated frequency masking thresholds. In these initial quantizers,

phases were uniformly quantized, or were not quantized at all

for low-bitrate applications. More recent quantizers operate

by jointly encoding all the sinusoidal parameters based on

high-rate theory and can be expressed analytically [9]–[12].

The bitrates achieved by these methods can be further reduced

using differential coding, e.g., [13]. It must be noted that all

the aforementioned methods encode the sinusoidal parameters

independently for each short-time segment of the audio signal.

Extensions of these methods, where the sinusoidal parameters

can be jointly quantized across neighboring segments, have

recently been proposed, e.g., [14].

In this paper, we propose using the emerging compressed

sensing (CS) [15], [16] methodology to encode and compress

the sinusoidally modeled audio signals. Compressed sensing

seeks to represent a signal using a number of linear, non-adap-

tive measurements. Usually, the number of measurements is

much lower than the number of samples needed if the signal

is sampled at the Nyquist rate. CS requires that the signal is

sparse in some basis—in the sense that it is a linear combina-

tion of a small number of basis functions—in order to correctly

reconstruct the original signal. Clearly, the sinusoidally mod-

eled part of an audio signal is a sparse signal, and it is thus nat-

ural to wonder how CS might be used to encode such a signal.

We present such an investigation of how CS can be applied to

encoding the time-domain signal of the model instead of the si-

nusoidal model parameters as state-of-the-art methods propose,

extending our recent work in [17], [18]. We extend our previous

work in terms of providing more results for the single-channel

audio coding case, but also we propose here a system which

1558-7916/$26.00 © 2010 IEEE

GRIFFIN et al.: SINGLE-CHANNEL AND MULTI-CHANNEL SINUSOIDAL AUDIO CODING USING CS 1383

applies CS to the case of sinusoidally modeled multi-channel

audio. At the same time, the paper proposes a psychoacoustic

modeling analysis for the selection of sinusoidal components in

a multi-channel audio recording, which provides a very com-

pact description of multi-channel audio and is very efficient for

low-bitrate applications.

This is, to our knowledge, the first attempt to exploit the

sparse representation of the sinusoidal model for audio signals

using compressed sensing, and many interesting and important

issues are raised in this context. The most important problems

encountered in this work are summarized in this paragraph.

The encoding operation is based on randomly sampling the

time-domain sinusoidal signal, which is obtained after applying

the sinusoidal model to a monophonic or multi-channel audio

signal. The random samples can be further encoded (here

scalar quantization is suggested, but other methods could be

used to improve performance). An issue that arises is that as

the encoding is performed in the time-domain—rather than

the Fourier domain—the quantization error is not localized in

frequency, and it is therefore more complicated to predict the

audio quality of the reconstructed signal; this was addressed

by suggesting a spectral whitening procedure for the sinu-

soidal amplitudes. Another issue is that the sinusoidal model

estimated frequencies should correspond to single bins of the

discrete Fourier transform, or else the sparsity requirement

cannot be satisfied. In practice, this translates into encoding

the sinusoidal parameters selected from a peak-picking proce-

dure (with the possible inclusion of a psychoacoustic model),

without further refinement of the estimated frequencies. This

important problem can be addressed (as explained in detail

later) by employing zero-padding in the Fourier analysis (i.e.,

improving the frequency resolution by shortening the bin

spacing), and also by employing interpolation techniques in the

decoder (since sparsity is not needed after the CS decoding).

The improved frequency resolution resulted in a need to in-

crease the number of CS measurements, and consequently the

bitrate, and this problem was alleviated by employing a process

termed “frequency mapping.” Another important problem

which was addressed in this paper is the fact that CS theory

allows for signal reconstruction with high probability but not

with certainty; three different ways of overcoming this problem

(termed “operating modes”) are suggested in this paper. In

summary, several practical problems were raised during our

research; by providing a complete end-to-end design of a

CS-based sinusoidal coding system, this paper both clarifies

several limitations of CS to audio coding, but also presents

ways to overcome them, and in this sense we believe that this

paper will be of interest to researchers working on applying the

CS theory to signal coding.

The paper deals only with encoding the sinusoidal part of the

model (i.e., there is no treatment for the residual signal). It is

noted that other than the proposed method, the authors are only

familiar with the work of [19] for applying the CS methodology

to audio coding in general. While our focus in this paper is on

exploiting the sinusoidal model in this context, in [19] the goal

was to exploit the excitation/filter model using CS.

The importance of applying CS theory to audio coding lies

mainly to the applicability of CS to sensor network applications.

Sensor-based local encoding of audio signals could enable a va-

riety of audio-related applications, such as environmental mon-

itoring, recording audio in large outdoor venues, and so forth.

This paper provides an important step towards applying CS to

audio coding, at least in low-bitrate audio applications where

the sinusoidal part of an audio signal provides sufficient quality.

It is shown here for multi-channel audio signals that, except for

one primary (reference) audio channel, a simple low-complexity

system can be used to encode the sinusoidal model for all re-

maining channels of the multi-channel recording. This is an im-

portant result given that research in CS is still at an early stage,

and its practical value in coding applications is still unclear.

The remainder of the paper is organized as follows. In

Section II, background information about the sinusoidal model

is given, and a novel psychoacoustic model for sinusoidal

modeling for multi-channel audio signals is proposed. Back-

ground information about the CS methodology is presented in

Section III. In Section IV, a detailed discussion about the prac-

tical implementation of the method is provided related to issues

such as alleviating the effects of quantization (Section IV-A);

bitrate improvements (Section IV-B); quantization and en-

tropy coding (Section IV-C); CS reconstruction algorithms

(Section IV-D); achieved bitrates (Section IV-E); operating

modes (Section IV-F); and complexity (Section IV-G). The

discussion of Section IV is then extended to the multi-channel

case in Section V. In Section VI, results from listening tests

demonstrate the audio quality achieved with the proposed

coding scheme for the single-channel (Section VI-A) and

the multi-channel case (Section VI-B), while in Section VII

concluding remarks are made.

II. S

INUSOIDAL MODEL

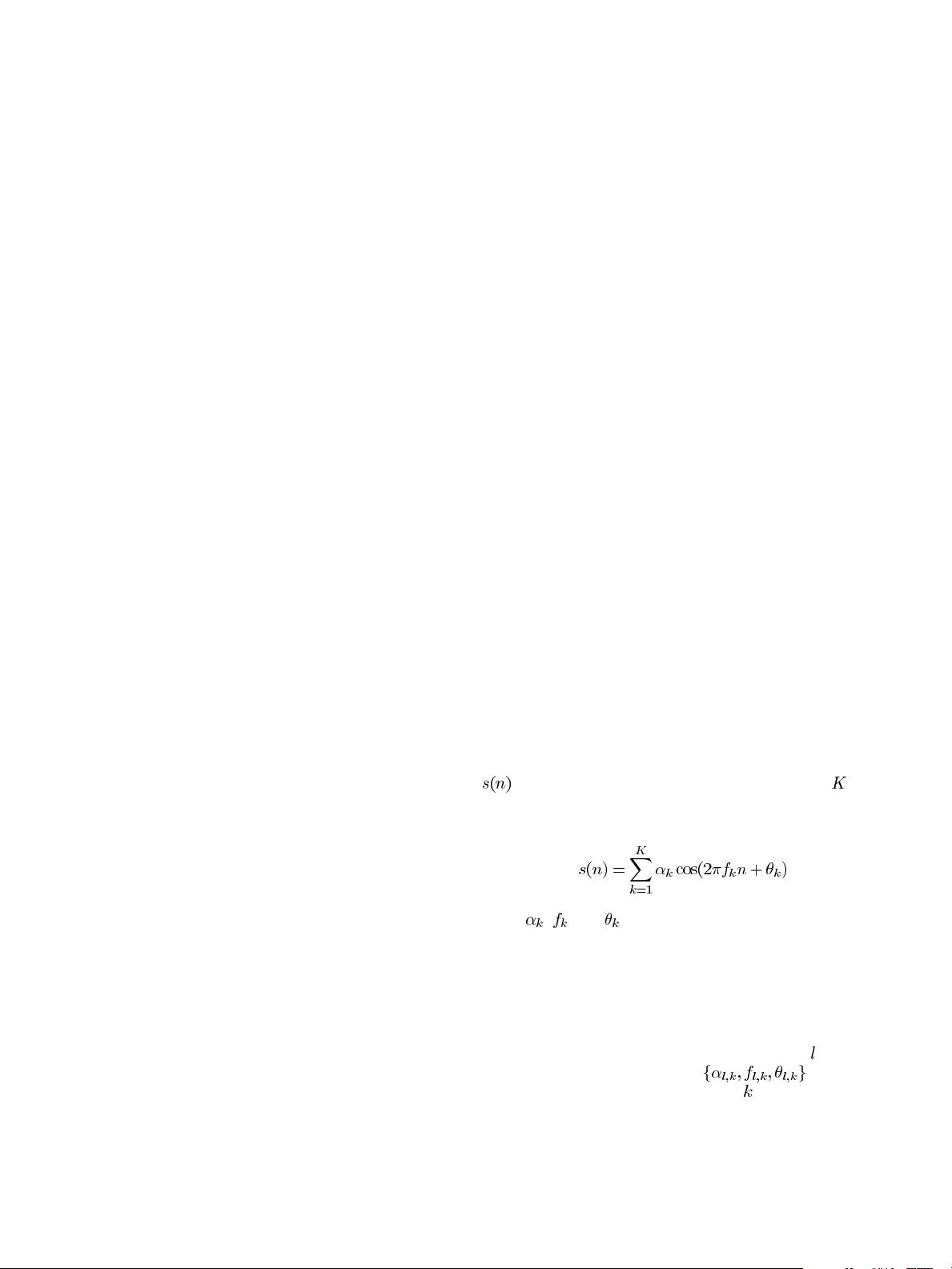

The sinusoidal model was initially used in the analysis/syn-

thesis of speech [1]. A short-time segment of an audio signal

is represented as the sum of a small number of sinu-

soids with time-varying amplitudes and frequencies. This can

be written as

(1)

where

, , and are the amplitude, frequency, and phase,

respectively. To estimate the parameters of the model, one needs

to segment the signal into a number of short-time frames and

compute a short-time frequency representation for each frame.

Consequently, the prominent spectral peaks are identified using

a peak detection algorithm (possibly enhanced by perceptual-

based criteria). Interpolation methods can be used to increase

the accuracy of the algorithm [2]. Each peak in the

th frame is

represented as a triad of the form

(amplitude,

frequency, phase), corresponding to the

th sinewave. A peak

continuation algorithm is usually employed in order to assign

each peak to a frequency trajectory by matching the peaks of

the previous frame to the current frame, using linear amplitude

interpolation and cubic phase interpolation.

A more accurate representation of audio signals is achieved

when a stochastic component is included in the model. This

1384 IEEE TRANSACTIONS ON AUDIO, SPEECH, AND LANGUAGE PROCESSING, VOL. 19, NO. 5, JULY 2011

model is usually called the sinusoids plus noise model, or deter-

ministic plus stochastic decomposition. In this model, the sinu-

soidal part corresponds to the “deterministic” part of the signal

due to the structured nature of this model. The remaining signal

is the sinusoidal noise component

, also referred to here as

residual or sinusoidal error signal, which is the “stochastic” part

of the audio signal, since it is very difficult to accurately model,

but at the same time essential for high-quality audio synthesis.

Accurately modeling the stochastic component has been exam-

ined both for the single-channel case, e.g., [2], [20], [21] and

the multi-channel audio case [3]. Practically, after the sinusoidal

parameters are estimated, the noise component is computed by

subtracting the sinusoidal component from the original signal.

Note that in this paper we are only interested in encoding the

sinusoidal part.

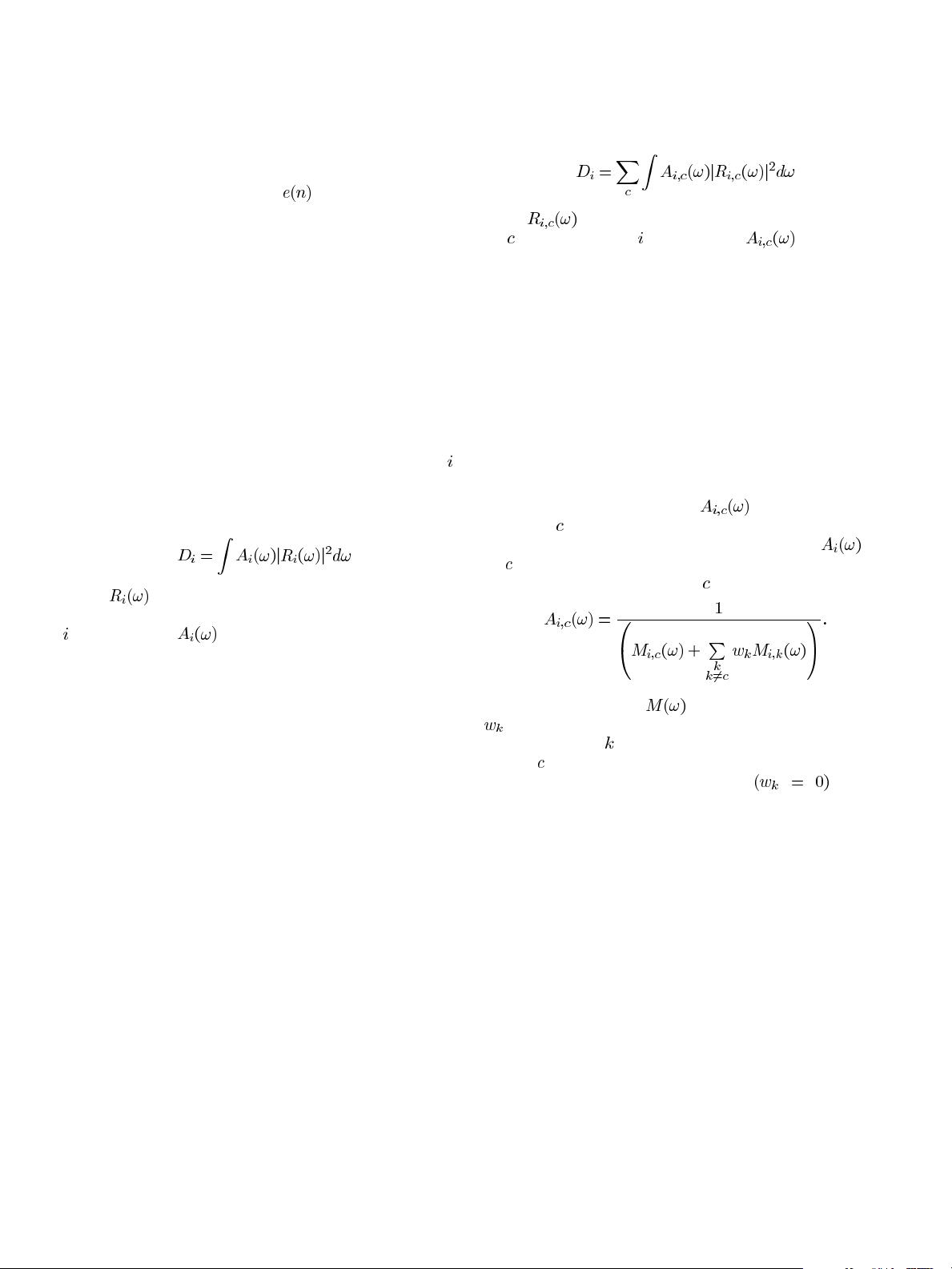

A. Single-Channel Sinusoidal Selection

To perform single-channel sinusoidal analysis, we employed

state-of-the-art psychoacoustic analysis based on [22]. In the

th

iteration, the algorithm picks a perceptually optimal sinusoidal

component frequency, amplitude, and phase. This choice mini-

mizes the perceptual distortion measure

(2)

where

is the Fourier transform of the residual signal

(original frame minus the currently selected sinusoids) after the

th iteration, and is a frequency weighting function set

as the inverse of the current masking threshold energy.

One issue with CS encoding is that no further refinement of

the sinusoid frequencies can be performed in the encoder, be-

cause frequencies which do not correspond to exact frequency

bins would result in loss of the sparsity in the frequency do-

main. This is an important problem, because it implies that we

must restrict the sinusoidal frequency estimation to the selection

of frequency bins (e.g., following a peak-picking procedure),

without the possibility of further refinement of the estimated fre-

quencies in the encoder. This can be alleviated by zero-padding

the signal frame, in other words improving the frequency res-

olution during the parameter estimation by reducing the bin

spacing. We have found, though, that for CS-based encoding

this can be performed to a limited degree, as zero-padding will

increase the number of measurements that must be encoded as

explained in Section IV (and consequently the bitrate). Fortu-

nately, this problem can be partly addressed by employing the

“frequency mapping” procedure, described in Section IV. Fur-

thermore, since the sparsity restriction need not hold after the

signal is decoded, frequency re-estimation can be performed in

the decoder, such as interpolation among frames.

B. Multi-Channel Sinusoidal Selection

To perform multi-channel sinusoidal analysis, we have

extended the sinusoidal modeling method presented in

[23]—which employs a matching pursuit algorithm to de-

termine the model parameters of each frame—to include the

psychoacoustic analysis of [22]. For the multichannel case,

in each iteration, the algorithm picks a sinusoidal compo-

nent frequency that is optimal for all channels, as well as

channel-specific amplitudes and phases. This choice minimizes

the perceptual distortion measure

(3)

where

is the Fourier transform of the residual signal of

the

th channel after the th iteration, and is a frequency

weighting function set as the inverse of the current masking

threshold energy. The contributions of each channel are simply

summed to obtain the final measure.

An important question is what masking model is suitable for

multi-channel audio where the different channels have different

binaural attributes in the reproduction. In transform coding, a

common problem is caused by binaural masking level differ-

ence (BMLD); sometimes quantization noise that is masked in

monaural reproduction is detectable because of binaural release,

and using separate masking analysis for different channels is not

suitable for loudspeaker rendering. However, this effect in para-

metric coding is not so well established.

We performed preliminary experiments using: 1) separate

masking analysis, i.e., individual

based on the masker

of channel

for each signal separately [see (3)]; 2) the masker

of the sum signal of all channel signals to obtain

for

all

; and 3) power summation of the other signals’ attenuated

maskers to the masker of channel

according to

(4)

In the above equation,

indicates the masker energy,

the estimated attenuation (panning) factor that was varied

heuristically, and

iterates through all channel signals ex-

cluding

. In this paper, we chose to use the first method, i.e.,

separate masking analysis for channels

, for the

reason that we did not find notable differencies in BMLD noise

unmasking, and that the sound quality seemed to be marginally

better with headphone reproduction. For loudspeaker reproduc-

tion, the second or third method may be more suitable.

The use of this psychoacoustic multi-channel sinusoidal

model resulted in sparser modeled signals, increasing the

effectiveness of our compressed sensing encoding.

III. C

OMPRESSED SENSING

Compressed sensing [15], [16]—also known as compressive

sensing or compressive sampling—is an emerging field which

has grown up in response to the increasing amount of data that

needs to be sensed, processed and stored. A great majority of

this data is compressed as soon as it has been sensed at the

Nyquist rate. The idea behind compressed sensing is to go di-

rectly from the full-rate, analog signal to the compact represen-

tation by using measurements in the sparse basis. Thus, the CS

theory is based on the assumption that the signal of interest is

sparse in some basis as it can be accurately and efficiently repre-

sented in that basis. This is not possible unless the sparse basis is

known in advance, which is generally not the case. Thus com-

pressed sensing uses random measurements in a basis that is

剩余13页未读,继续阅读

资源评论

boundles

- 粉丝: 0

- 资源: 3

上传资源 快速赚钱

我的内容管理

展开

我的内容管理

展开

我的资源

快来上传第一个资源

我的资源

快来上传第一个资源

我的收益 登录查看自己的收益

我的收益 登录查看自己的收益 我的积分

登录查看自己的积分

我的积分

登录查看自己的积分

我的C币

登录后查看C币余额

我的C币

登录后查看C币余额

我的收藏

我的收藏  我的下载

我的下载  下载帮助

下载帮助

前往需求广场,查看用户热搜

前往需求广场,查看用户热搜最新资源

- “人力资源+大数据+薪酬报告+涨薪调薪”

- PVE系统配置优化脚本

- “人力资源+大数据+薪酬报告+涨薪调薪”

- 含源码java Swing基于socket实现的五子棋含客户端和服务端

- 【java毕业设计】鹿幸公司员工在线餐饮管理系统的设计与实现源码(springboot+vue+mysql+LW).zip

- OpenCV C++第三方库

- 毕设分享:基于SpringBoot+Vue的礼服租聘系统-后端

- 复合铜箔:预计到2025年,这一数字将跃升至291.5亿元,新材料革命下的市场蓝海

- 【java毕业设计】流浪动物管理系统源码(springboot+vue+mysql+说明文档+LW).zip

- 【源码+数据库】采用纯原生的方式,基于mybatis框架实现增删改查

资源上传下载、课程学习等过程中有任何疑问或建议,欢迎提出宝贵意见哦~我们会及时处理!

点击此处反馈

安全验证

文档复制为VIP权益,开通VIP直接复制

信息提交成功

信息提交成功