Storage I/O Control Technical Overview

and Considerations for Deployment

T E C H N I C A L W H I T E PA P E R / 2

Executive Summary

Storage I/O Control (SIOC) provides storage I/O performance isolation for virtual machines, thus enabling VMware® vSphere™

(“vSphere”) administrators to comfortably run important workloads in a highly consolidated virtualized storage environment. It

protects all virtual machines from undue negative performance impact due to misbehaving I/O-heavy virtual machines, often known

as the “noisy neighbor” problem. Furthermore, the service level of critical virtual machines can be protected by SIOC by giving them

preferential I/O resource allocation during periods of congestion. SIOC achieves these benefits by extending the constructs of shares

and limits, used extensively for CPU and memory, to manage the allocation of storage I/O resources. SIOC improves upon the previous

host-level I/O scheduler by detecting and responding to congestion occurring at the array, and enforcing share-based allocation of I/O

resources across all virtual machines and hosts accessing a datastore.

With SIOC, vSphere administrators can mitigate the performance loss of critical workloads due to high congestion and storage latency

during peak load periods. The use of SIOC will produce better and more predictable performance behavior for workloads during

periods of congestion. Benefits of leveraging SIOC:

• Provides performance protection by enforcing proportional fairness of access to shared storage

• Detects and manages bottlenecks at the array

• Maximizes your storage investments by enabling higher levels of virtual-machine consolidation across your shared datastores

The purpose of this paper is to explain the basic mechanics of how SIOC, a new feature in vSphere 4.1, works and to discuss

considerations for deploying it in your VMware virtualized environments.

The Challenge of Shared Resources

Controlling the dynamic allocation of resources in distributed systems has been a long-standing challenge. Virtualized environments

introduce further challenges because of the inherent sharing of physical resources by many virtual machines. VMware has provided

ways to manage shared physical resources, such as CPU and memory, and to prioritize their use among all the virtual machines in the

environment. CPU and memory controls have worked well since memory and CPU resources are shared only at a local-host level, for

virtual machines residing within a single ESX® server.

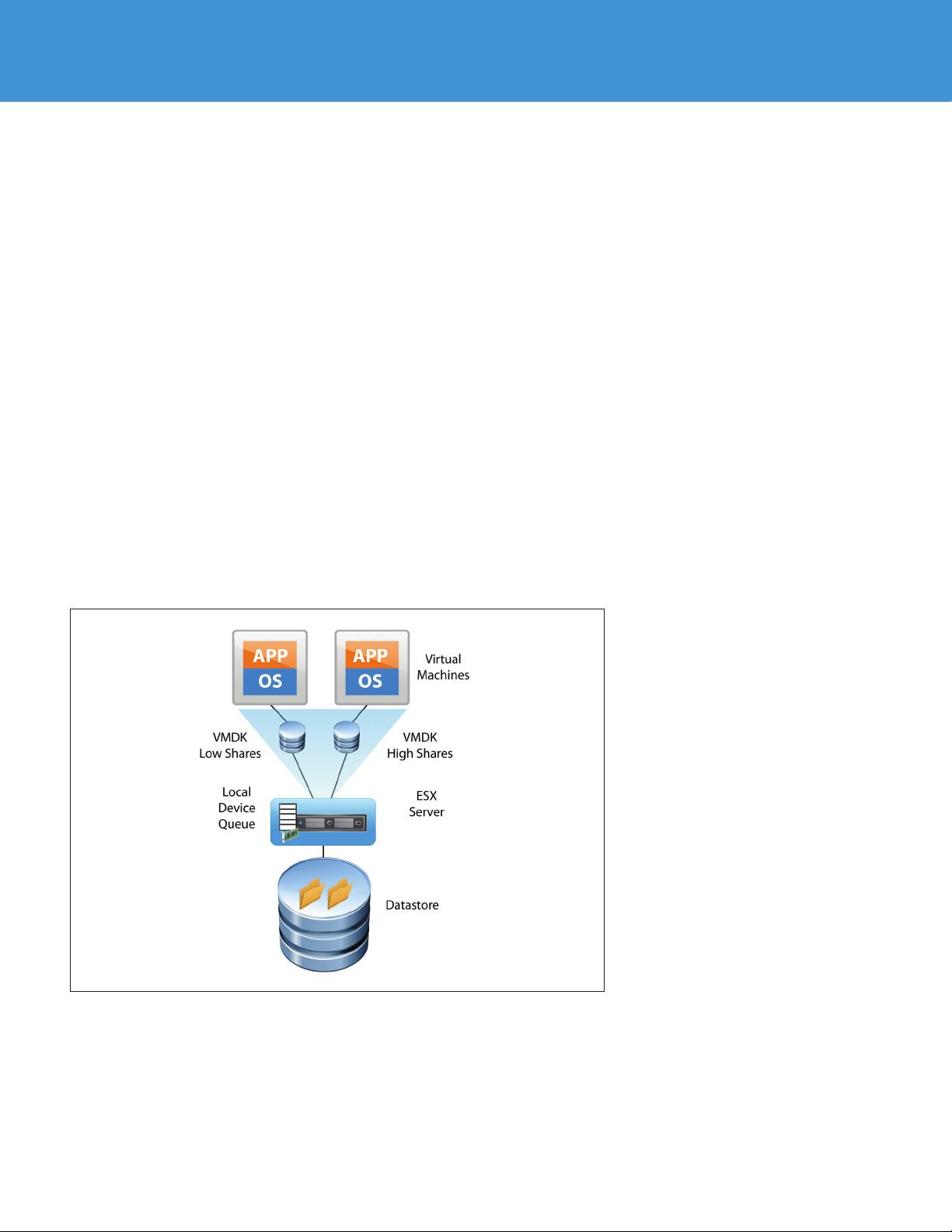

The task of regulating shared resources that span multiple ESX hosts, such as shared datastores, presents new challenges, because

these resources are accessed in a distributed manner by multiple ESX hosts. Previous disk shares did not address this challenge, as

the shares and limits were enforced only at a single ESX host level, and were only enforced in response to host-side HBA bottlenecks,

which occur rarely. This approach had the problem of potentially allowing lower-priority virtual machines greater access to storage

resources based on their placement across dierent ESX hosts, as well as neglecting to provide benefits in the case that the datastore

is congested but the host-side queue is not. An ideal I/O resource-management solution should provide the allocation of I/O resources

independent of the placement of virtual machines and with consideration of the priorities of all virtual machines accessing the shared

datastore. It should also be able to detect and control all instances of congestion happening at the shared resource.

The Storage I/O Control Solution

SIOC solves the problem of managing shared storage resources across ESX hosts. It provides a fine-grained storage-control

mechanism by dynamically managing the size of, and access to, ESX host I/O queues based on assigned shares. SIOC enhances the

disk-shares capabilities of previous releases of VMware ESX Server by enforcing these disk shares not only at the local-host level but

also at the per-datastore level. Additionally, for the first time, vSphere with SIOC provides storage-device latency monitoring and

control, with which SIOC can throttle back storage workloads according to their priority in order to maintain total storage-device

latency below a certain threshold.

我的内容管理

展开

我的内容管理

展开

我的资源

快来上传第一个资源

我的资源

快来上传第一个资源

我的收益 登录查看自己的收益

我的收益 登录查看自己的收益 我的积分

登录查看自己的积分

我的积分

登录查看自己的积分

我的C币

登录后查看C币余额

我的C币

登录后查看C币余额

我的收藏

我的收藏  我的下载

我的下载  下载帮助

下载帮助

前往需求广场,查看用户热搜

前往需求广场,查看用户热搜

信息提交成功

信息提交成功