PRE-PUBLICATION DRAFT, TO APPEAR IN IEEE TRANS. ON CIRCUITS AND SYSTEMS FOR VIDEO TECHNOLOGY, DEC. 2012

Copyright (c) 2012 IEEE. Personal use of this material is permitted. However, permission to use this material for any other purposes must be obtained from the

IEEE by sending an email to pubs-permissions@ieee.org.

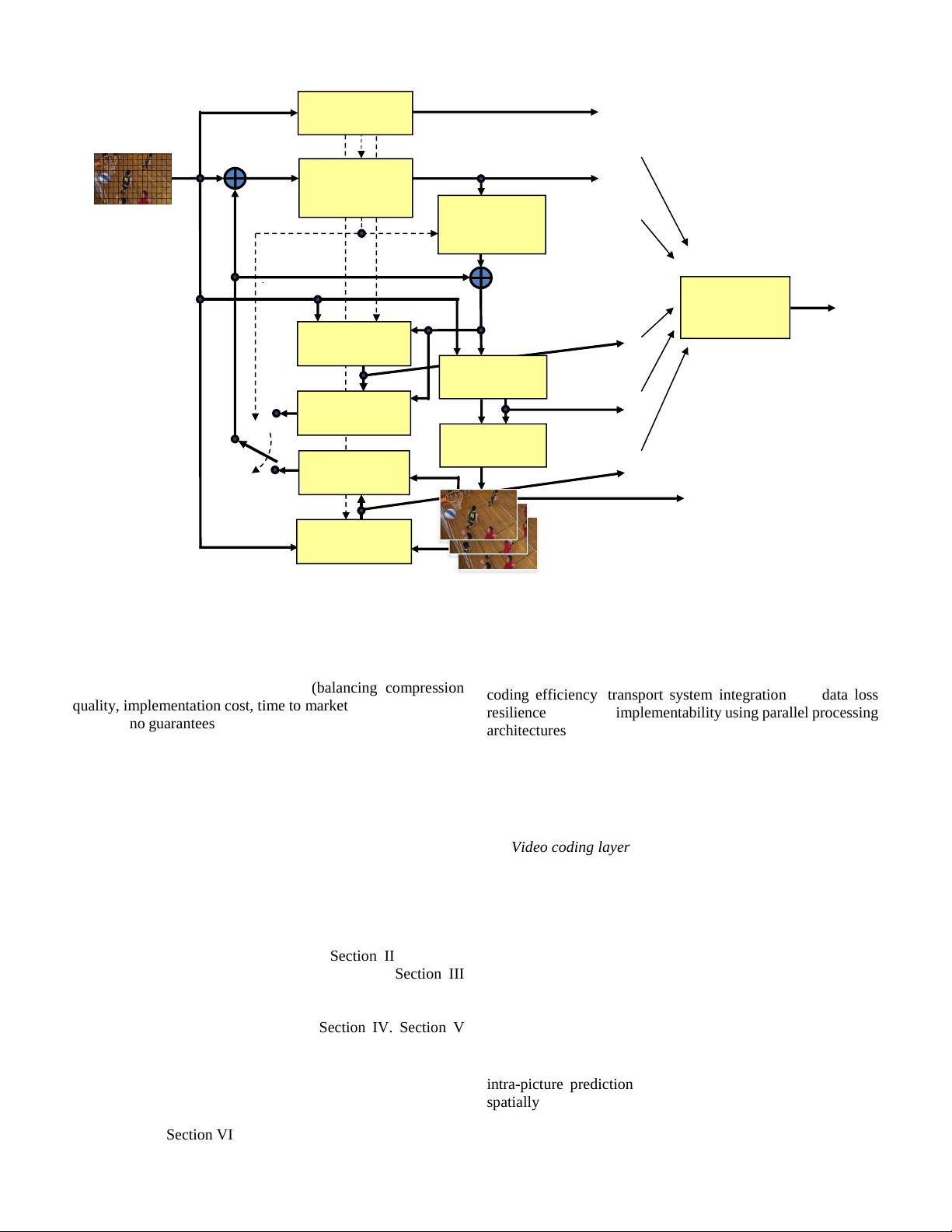

temporally-predictive coding modes are typically used for most

blocks. The encoding process for inter-picture prediction

consists of choosing motion data comprising the selected

reference picture and motion vector (MV) to be applied for

predicting the samples of each block. The encoder and decoder

generate identical inter prediction signals by applying motion

compensation (MC) using the MV and mode decision data,

which are transmitted as side information.

The residual signal of the intra or inter prediction, which is

the difference between the original block and its prediction, is

transformed by a linear spatial transform. The transform

coefficients are then scaled, quantized, entropy coded, and

transmitted together with the prediction information.

The encoder duplicates the decoder processing loop such that

both will generate identical predictions for subsequent data.

Therefore, the quantized transform coefficients are constructed

by inverse scaling and are then inverse transformed to duplicate

the decoded approximation of the residual signal. The residual

is then added to the prediction, and the result of that addition

may then be fed into one or two loop filters to smooth out

artifacts induced by the block-wise processing and quantization.

The final picture representation (which is a duplicate of the

output of the decoder) is stored in a decoded picture buffer to be

used for the prediction of subsequent pictures. In general, the

order of the encoding or decoding processing of pictures often

differs from the order in which they arrive from the source;

necessitating a distinction between the decoding order (a.k.a.

bitstream order) and the output order (a.k.a. display order) for

a decoder.

Video material to be encoded by HEVC is generally

expected to be input as progressive scan imagery (either due to

the source video originating in that format or resulting from

de-interlacing prior to encoding). No explicit coding features

are present in the HEVC design to support the use of interlaced

scanning, as interlaced scanning is no longer used for displays

and is becoming substantially less common for distribution.

However, metadata syntax has been provided in HEVC to

allow an encoder to indicate that interlace-scanned video has

been sent by coding each field (i.e. the even or odd numbered

lines of each video frame) of interlaced video as a separate

picture or that it has been sent by coding each interlaced frame

as an HEVC coded picture. This provides an efficient method

of coding interlaced video without burdening decoders with a

need to support a special decoding process for it.

In the following, the various features involved in hybrid

video coding using HEVC are highlighted:

Coding Tree Units and Coding Tree Block structure:

The core of the coding layer in previous standards was the

macroblock, containing a 16×16 block of luma samples

and, in the usual case of 4:2:0 color sampling, two

corresponding 8×8 blocks of chroma samples; whereas the

analogous structure in HEVC is the coding tree unit

(CTU), which has a size selected by the encoder and can be

larger than a traditional macroblock. The CTU consists of a

luma coding tree block (CTB) and the corresponding

chroma CTBs and syntax elements. The size LL of a luma

CTB can be chosen as L = 16, 32, or 64 samples, with the

larger sizes typically enabling better compression. HEVC

then supports a partitioning of the CTBs into smaller

blocks using a tree structure and quadtree-like

signaling [8].

Coding Units and Coding Blocks: The quadtree syntax of

the CTU specifies the size and positions of its luma and

chroma coding blocks (CBs). The root of the quadtree is

associated with the CTU. Hence, the size of the luma CTB

is the largest supported size for a luma CB. The splitting of

a CTU into luma and chroma CBs is signaled jointly. One

luma CB and ordinarily two chroma CBs, together with

associated syntax, form a Coding Unit (CU). A CTB may

contain only one CU or may be split to form multiple CUs,

and each CU has an associated partitioning into prediction

units (PUs) and a tree of transform units (TUs).

Prediction Units and Prediction Blocks: The decision

whether to code a picture area using inter-picture or

intra-picture prediction is made at the CU level. A

prediction unit (PU) partitioning structure has its root at

the CU level. Depending on the basic prediction type

decision, the luma and chroma CBs can then be further

split in size and predicted from luma and chroma

prediction blocks (PBs). HEVC supports variable PB sizes

from 64×64 down to 4×4 samples.

Transform Units and Transform Blocks: The prediction

residual is coded using block transforms. A transform unit

(TU) tree structure has its root at the CU level. The luma

CB residual may be identical to the luma transform block

(TB) or may be further split into smaller luma TBs. The

same applies to the chroma TBs. Integer basis functions

similar to those of a discrete cosine transform (DCT) are

defined for the square TB sizes 4×4, 8×8, 16×16, and

32×32. For the 4×4 transform of intra-picture prediction

residuals, an integer transform derived from a form of

discrete sine transform (DST) is alternatively specified.

Motion vector signaling: Advanced motion vector

prediction (AMVP) is used, including derivation of several

most probable candidates based on data from adjacent PBs

and the reference picture. A “merge” mode for MV coding

can be also used, allowing the inheritance of MVs from

neighboring PBs. Moreover, compared to H.264/MPEG-4

AVC, improved “skipped” and “direct” motion inference

are also specified.

Motion compensation: Quarter-sample precision is used

for the MVs, and 7-tap or 8-tap filters are used for

interpolation of fractional-sample positions (compared to

6-tap filtering of half-sample positions followed by

bi-linear interpolation of quarter-sample positions in

H.264/MPEG-4 AVC). Similar to H.264/MPEG-4 AVC,

multiple reference pictures are used. For each PB, either

one or two motion vectors can be transmitted, resulting

either in uni-predictive or bi-predictive coding,

respectively. As in H.264/MPEG-4 AVC, a scaling and

offset operation may be applied to the prediction signal(s)

in a manner known as weighted prediction.

Intra-picture prediction: The decoded boundary samples

of adjacent blocks are used as reference data for spatial

prediction in PB regions when inter-picture prediction is

not performed. Intra prediction supports 33 directional

我的内容管理

展开

我的内容管理

展开

我的资源

快来上传第一个资源

我的资源

快来上传第一个资源

我的收益 登录查看自己的收益

我的收益 登录查看自己的收益 我的积分

登录查看自己的积分

我的积分

登录查看自己的积分

我的C币

登录后查看C币余额

我的C币

登录后查看C币余额

我的收藏

我的收藏  我的下载

我的下载  下载帮助

下载帮助

前往需求广场,查看用户热搜

前往需求广场,查看用户热搜

信息提交成功

信息提交成功