Real-time Convolutional Neural Networks for

Emotion and Gender Classification

Octavio Arriaga

Hochschule Bonn-Rhein-Sieg

Sankt Augustin Germany

Email: octavio.arriaga@smail.inf.h-brs.de

Paul G. Pl

¨

oger

Hochschule Bonn-Rhein-Sieg

Sankt Augustin Germany

Email: paul.ploeger@h-brs.de

Matias Valdenegro

Heriot-Watt University

Edinburgh, UK

Email: m.valdenegro@hw.ac.uk

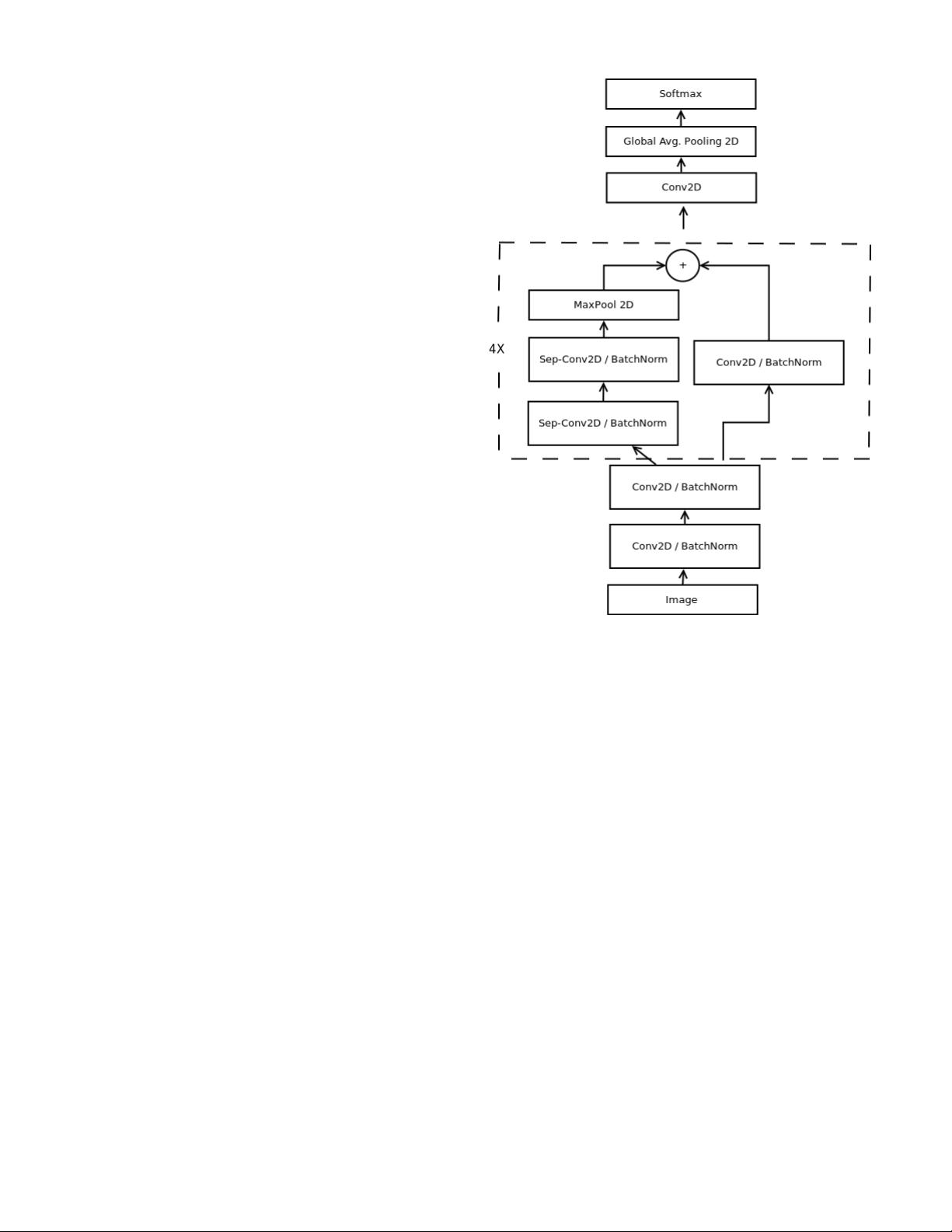

Abstract—In this paper we propose an implement a general

convolutional neural network (CNN) building framework for

designing real-time CNNs. We validate our models by creat-

ing a real-time vision system which accomplishes the tasks of

face detection, gender classification and emotion classification

simultaneously in one blended step using our proposed CNN

architecture. After presenting the details of the training pro-

cedure setup we proceed to evaluate on standard benchmark

sets. We report accuracies of 96% in the IMDB gender dataset

and 66% in the FER-2013 emotion dataset. Along with this we

also introduced the very recent real-time enabled guided back-

propagation visualization technique. Guided back-propagation

uncovers the dynamics of the weight changes and evaluates

the learned features. We argue that the careful implementation

of modern CNN architectures, the use of the current regu-

larization methods and the visualization of previously hidden

features are necessary in order to reduce the gap between slow

performances and real-time architectures. Our system has been

validated by its deployment on a Care-O-bot 3 robot used during

RoboCup@Home competitions. All our code, demos and pre-

trained architectures have been released under an open-source

license in our public repository.

I. INTRODUCTION

The success of service robotics decisively depends on a

smooth robot to user interaction. Thus, a robot should be

able to extract information just from the face of its user,

e.g. identify the emotional state or deduce gender. Interpret-

ing correctly any of these elements using machine learning

(ML) techniques has proven to be complicated due the high

variability of the samples within each task [4]. This leads to

models with millions of parameters trained under thousands of

samples [3]. Furthermore, the human accuracy for classifying

an image of a face in one of 7 different emotions is 65% ±

5% [4]. One can observe the difficulty of this task by trying

to manually classify the FER-2013 dataset images in Figure

1 within the following classes {“angry”, “disgust”, “fear”,

“happy”, “sad”, “surprise”, “neutral”}.

In spite of these difficulties, robot platforms oriented to

attend and solve household tasks require facial expressions

systems that are robust and computationally efficient. More-

over, the state-of-the-art methods in image-related tasks such

as image classification [1] and object detection are all based on

Convolutional Neural Networks (CNNs). These tasks require

CNN architectures with millions of parameters; therefore,

their deployment in robot platforms and real-time systems

Fig. 1: Samples of the FER-2013 emotion dataset [4].

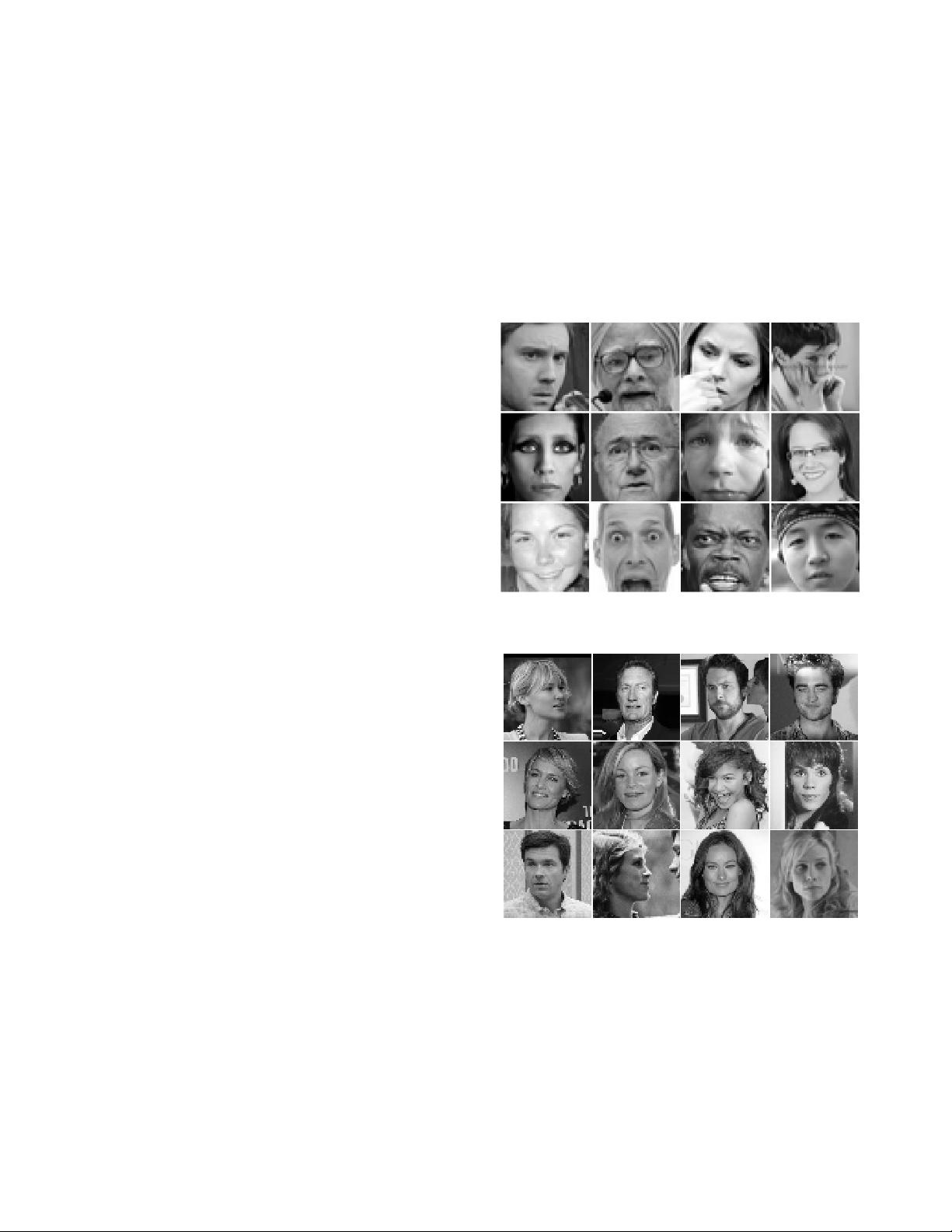

Fig. 2: Samples of the IMDB dataset [9].

becomes unfeasible. In this paper we propose an implement

a general CNN building framework for designing real-time

CNNs. The implementations have been validated in a real-time

facial expression system that provides face-detection, gender

classification and that achieves human-level performance when

classifying emotions. This system has been deployed in a

care-O-bot 3 robot, and has been extended for general robot