model on a smaller dataset conditioned on the representation learned by the first network. Decoupling

the networks enables them to be trained on independent data, which reduces the need to obtain high

quality multispeaker training data. We train the speaker embedding network on a speaker verification

task to determine if two different utterances were spoken by the same speaker. In contrast to the

subsequent TTS model, this network is trained on untranscribed speech containing reverberation and

background noise from a large number of speakers.

We demonstrate that the speaker encoder and synthesis networks can be trained on unbalanced and

disjoint sets of speakers and still generalize well. We train the synthesis network on 1.2K speakers

and show that training the encoder on a much larger set of 18K speakers improves adaptation quality,

and further enables synthesis of completely novel speakers by sampling from the embedding prior.

There has been significant interest in end-to-end training of TTS models, which are trained directly

from text-audio pairs, without depending on hand crafted intermediate representations [

17

,

23

].

Tacotron 2 [

15

] used WaveNet [

19

] as a vocoder to invert spectrograms generated by an encoder-

decoder architecture with attention [

3

], obtaining naturalness approaching that of human speech by

combining Tacotron’s [

23

] prosody with WaveNet’s audio quality. It only supported a single speaker.

Gibiansky et al. [

8

] introduced a multispeaker variation of Tacotron which learned low-dimensional

speaker embedding for each training speaker. Deep Voice 3 [

13

] proposed a fully convolutional

encoder-decoder architecture which scaled up to support over 2,400 speakers from LibriSpeech [

12

].

These systems learn a fixed set of speaker embeddings and therefore only support synthesis of voices

seen during training. In contrast, VoiceLoop [

18

] proposed a novel architecture based on a fixed

size memory buffer which can generate speech from voices unseen during training. Obtaining good

results required tens of minutes of enrollment speech and transcripts for a new speaker.

Recent extensions have enabled few-shot speaker adaptation where only a few seconds of speech

per speaker (without transcripts) can be used to generate new speech in that speaker’s voice. [

2

]

extends Deep Voice 3, comparing a speaker adaptation method similar to [

18

] where the model

parameters (including speaker embedding) are fine-tuned on a small amount of adaptation data to a

speaker encoding method which uses a neural network to predict speaker embedding directly from a

spectrogram. The latter approach is significantly more data efficient, obtaining higher naturalness

using small amounts of adaptation data, in as few as one or two utterances. It is also significantly

more computationally efficient since it does not require hundreds of backpropagation iterations.

Nachmani et al. [

10

] similarly extended VoiceLoop to utilize a target speaker encoding network to

predict a speaker embedding. This network is trained jointly with the synthesis network using a

contrastive triplet loss to ensure that embeddings predicted from utterances by the same speaker are

closer than embeddings computed from different speakers. In addition, a cycle-consistency loss is

used to ensure that the synthesized speech encodes to a similar embedding as the adaptation utterance.

A similar spectrogram encoder network, trained without a triplet loss, was shown to work for

transferring target prosody to synthesized speech [

16

]. In this paper we demonstrate that training a

similar encoder to discriminate between speakers leads to reliable transfer of speaker characteristics.

Our work is most similar to the speaker encoding models in [

2

,

10

], except that we utilize a network

independently-trained for a speaker verification task on a large dataset of untranscribed audio from tens

of thousands of speakers, using a state-of-the-art generalized end-to-end loss [

22

]. [

10

] incorporated

a similar speaker-discriminative representation into their model, however all components were trained

jointly. In contrast, we explore transfer learning from a pre-trained speaker verification model.

Doddipatla et al. [

7

] used a similar transfer learning configuration where a speaker embedding

computed from a pre-trained speaker classifier was used to condition a TTS system. In this paper we

utilize an end-to-end synthesis network which does not rely on intermediate linguistic features, and a

substantially different speaker embedding network which is not limited to a closed set of speakers.

Furthermore, we analyze how quality varies with the number of speakers in the training set, and find

that zero-shot transfer requires training on thousands of speakers, many more than were used in [7].

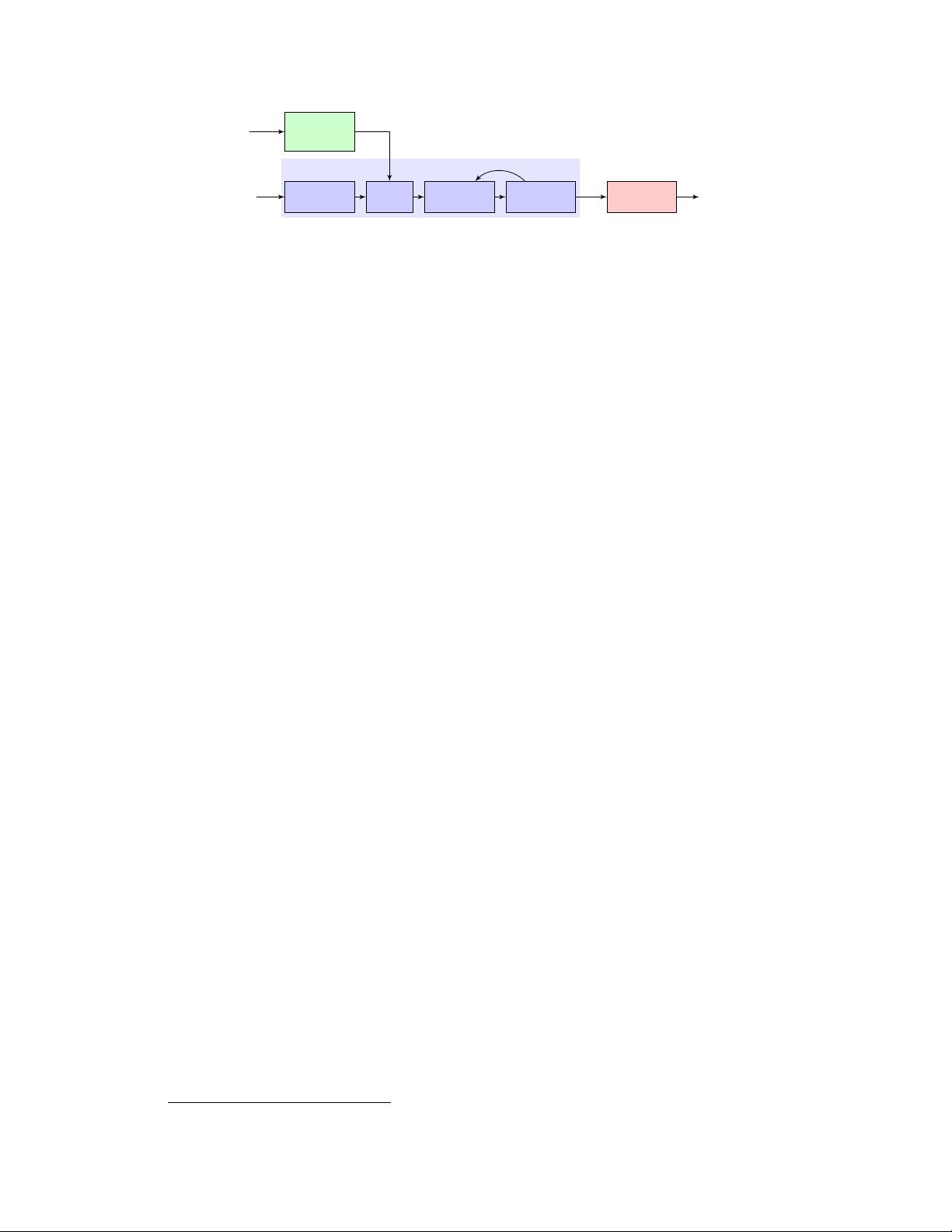

2 Multispeaker speech synthesis model

Our system is composed of three independently trained neural networks, illustrated in Figure 1: (1) a

recurrent speaker encoder, based on [

22

], which computes a fixed dimensional vector from a speech

2

我的内容管理

展开

我的内容管理

展开

我的资源

快来上传第一个资源

我的资源

快来上传第一个资源

我的收益 登录查看自己的收益

我的收益 登录查看自己的收益 我的积分

登录查看自己的积分

我的积分

登录查看自己的积分

我的C币

登录后查看C币余额

我的C币

登录后查看C币余额

我的收藏

我的收藏  我的下载

我的下载  下载帮助

下载帮助

前往需求广场,查看用户热搜

前往需求广场,查看用户热搜

信息提交成功

信息提交成功