Broadcast Channels

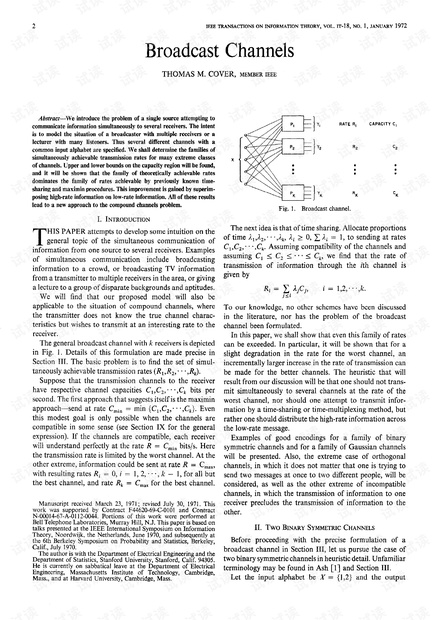

We introduce the problem of a single source attempting to communicate information simultaneously to several receivers. The intent is to model the situation of a broadcaster with multiple receivers or a lecturer with many listeners. Thus several different channels with a common input alphabet are specified. We shall determine the families of simultaneously achievable transmission rates for many extreme classes of channels. Upper and lower bounds on the capacity region will be found, and it will be shown that the family of theoretically achievable rates dominates the family of rates achievable by previously known time-sharing and maximin procedures. This improvement is gained by superimposing high-rate information on low-rate information. All of these results lead to a new approach to the compound channels problem.

- 粉丝: 18

- 资源: 74

我的内容管理

展开

我的内容管理

展开

我的资源

快来上传第一个资源

我的资源

快来上传第一个资源

我的收益 登录查看自己的收益

我的收益 登录查看自己的收益 我的积分

登录查看自己的积分

我的积分

登录查看自己的积分

我的C币

登录后查看C币余额

我的C币

登录后查看C币余额

我的收藏

我的收藏  我的下载

我的下载  下载帮助

下载帮助

前往需求广场,查看用户热搜

前往需求广场,查看用户热搜 信息提交成功

信息提交成功