没有合适的资源?快使用搜索试试~ 我知道了~

温馨提示

内容概要:本文提出了一种名为SimpleStrat的方法,用于提高大型语言模型(LLM)生成响应时的多样性。SimpleStrat采用分层抽样的方式,将解空间划分为多个层次,在推理阶段从选定的层次中采样。实验结果表明,与传统温度调节方法相比,SimpleStrat在保持生成质量的同时显著提高了多样性和覆盖范围。 适合人群:从事自然语言处理、机器学习和数据科学的研究人员和工程师,尤其是对提高语言模型生成多样性感兴趣的从业人员。 使用场景及目标:适用于需要生成多种可能答案的应用场景,如搜索规划、合成数据生成和预测不确定性估计等。SimpleStrat可以改善下游任务的性能,特别是在涉及多步推理的任务中。 其他说明:SimpleStrat不仅改进了LLM的生成多样性,还在各种基准测试中表现出了更高的召回率和更低的KL散度,验证了其有效性和鲁棒性。此外,该方法不需要额外训练,可以在现有的LLM基础上直接应用。

资源推荐

资源详情

资源评论

SIMPLESTRAT: DIVERSIFYING LANGUAGE MODEL

GENERATION WITH STRATIFICATION

Justin Wong

UC Berkeley

Yury Orlovskiy

UC Berkeley

Michael Luo

UC Berkeley

Sanjit A. Seshia

UC Berkeley

Joseph E. Gonzalez

UC Berkeley

(N/S) of Missouri

Compromise

Line

(E/W) of Mississippi River

Low Temp Sampling

SimpleStrat Sampling

High Temp Sampling

California

New York

Washington

Virginia

California

New York

Washington

Virginia

California

New York

Texas

Georgia

Figure 1: Stratfied Sampling vs Temperature Scaling Consider the LLM user request "Name a US

State." SimpleStrat employs auto-stratification to utilize the LLM to identify good dimensions of diversity,

for instance "East/West of the Mississippi River." Then, SimpleStrat uses stratified sampling to diversify

LLM generations.

ABSTRACT

Generating diverse responses from large language models (LLMs) is crucial for

applications such as planning/search and synthetic data generation, where diversity

provides distinct answers across generations. Prior approaches rely on increasing

temperature to increase diversity. However, contrary to popular belief, we show not

only does this approach produce lower quality individual generations as tempera-

ture increases, but it depends on model’s next-token probabilities being similar to

the true distribution of answers. We propose SimpleStrat, an alternative approach

that uses the language model itself to partition the space into strata. At inference, a

random stratum is selected and a sample drawn from within the strata. To measure

diversity, we introduce CoverageQA, a dataset of underspecified questions with

multiple equally plausible answers, and assess diversity by measuring KL Diver-

gence between the output distribution and uniform distribution over valid ground

truth answers. As computing probability per response/solution for proprietary

models is infeasible, we measure recall on ground truth solutions. Our evaluation

show using SimpleStrat achieves higher recall by 0.05 compared to GPT-4o and

0.36 average reduction in KL Divergence compared to Llama 3.

1 INTRODUCTION.

Large language models (LLMs) are routinely resampled in order to get a wide set of plausible

generations. Three key settings where this is important are: 1) improving downstream accuracy with

planning or search for agentic tasks (i.e. Tree-of-thought (Yao et al., 2024), AgentQ (Putta et al.,

2024)), 2) estimating prediction uncertainty (Aichberger et al., 2024), and 3) generating diverse

datasets for post-training (Dubey et al., 2024) and fine-tuning (Dai et al., 2023). All these use cases

rely on the model generating multiple plausible generations for the same prompt when multiple

answers exists.

1

arXiv:2410.09038v2 [cs.CL] 14 Oct 2024

(N/S) of Missouri

Compromise Line

(E/W) of Mississippi River

Name a US

State

Auto-Stratification

LLM

LLM

Name a US State,

where

❖E of Mississippi River

❖S of Missouri Comp.

LLM

Sampler

Prompt Distribution

West

North

South

East

0.34 0.34

0.18 0.14

Name a US State,

where

❖E of Mississippi River

❖S of Missouri Comp.

Name a US State,

where

❖E of Mississippi River

❖S of Missouri Comp.

Name a US State,

where

❖E of Mississippi River

❖S of Missouri Comp.

Heuristic Estimation Probabilistic Prompting

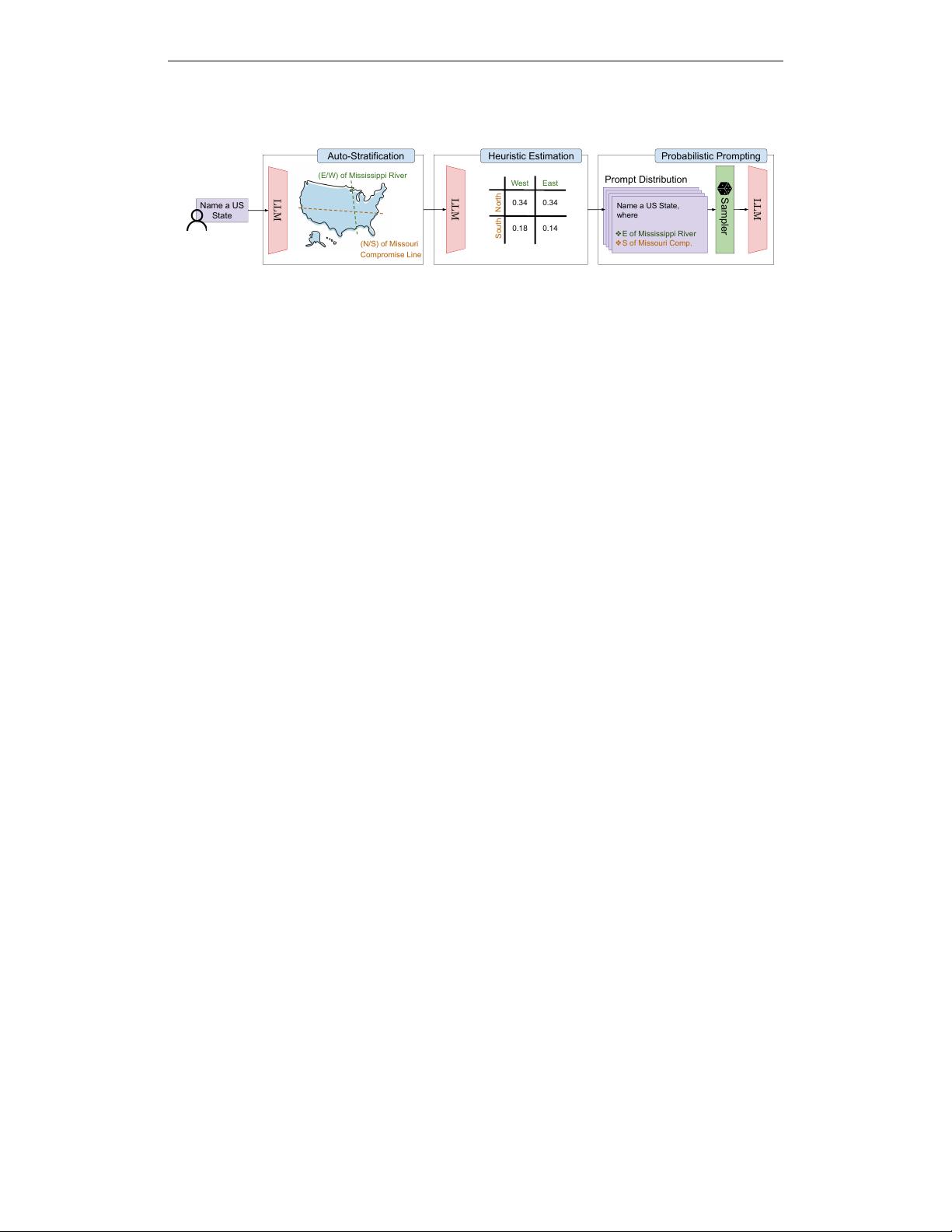

Figure 2: SimpleStrat workflow. SimpleStrat employs 3 phases: 1) auto-stratification to identify good

dimensions of diversity that divide the solution space into equal partitions, 2) heuristic estimation to estimate the

proportion of solutions in each stratum, and 3) probabilistic prompting where a concrete prompt is randomly

sampled from the prompt distribution specified by the previous two phases. Critically, diverse resampling comes

from both the random choice of prompt as well as the temperature of the LLM decoding.

Naively, increasing temperature, a parameter that controllably flattens an LLM’s softmax, can

improve an LLM’s generation diversity. However, temperature introduces two problems. First, higher

temperatures degrades generation quality. Recent evidence suggests removing temperature scaling

is desirable for multi-step reasoning to reduce errors compounding (Zhang et al., 2024). This is

especially critical in syntax sensitive settings like code generation where low temperatures (

≤ 0.15

)

are often used. Second, controlling for temperature does not necessarily improve diversity in the

answer space. In Figure 1, we illustrate increasing temperature doesn’t lead to meaningful increase

in diversity if the model is excessively confident and suffers from mode collapse. When asked to

"Name a US State," the model heavily skews towards answering "California", high temperature only

marginally softens the skew while surfacing incorrect answers and hurting instruction following.

Our goal is to improve diversity when resampling LLMs, even in cases of severe mode collapse

in next-token probabilities without manual intervention. Our analysis reveals that GPT-4 assigns

87% of its logit weight to "California" when prompted to name a US state. This observed bias

can be attributed to the worsening of calibration due to post-training as reported in the GPT-4 tech

report (OpenAI et al., 2024). This stark bias mirrors human cognitive bias, exemplified by the

blue-seven phenomenon—where individuals disproportionately select blue and seven when asked

to choose a random color and number. To counteract similar biases in human populations, social

scientists, particularly in political polling, employ stratified sampling techniques (Simpson, 1951;

Howell, 1992; Morris, 2022). We propose adapting this method to address mode collapse in LLMs.

We propose SimpleStrat, a training-free sampling approach to increase diversity. SimpleStrat improves

LLM generation diversity without degradation to generation quality while ensuring that an LLM’s

outputs are aligned with the true distribution of answers. SimpleStrat consist of three stages: auto-

stratification, heuristic estimation, and probabilistic prompting. Even if a language model cannot

generate diverse solutions, we find that it can be prompted to identify useful partitions of the solution

space based on the user request. We call this process auto-stratification. In Fig. 1, SimpleStrat

identifies two semantically significant strata from user request, "Name a US State": "(East/West) of

the Mississippi River" and "(North/South) of the Missouri Compromise Line."

Next, the heuristic estimation computes the joint probabilities across all strata. Back to Fig. 1,

SimpleStrat then outputs the probability for all four possible regions in US. Finally, SimpleStrat

samples from the joint probability distribution to augment the original user prompt with the selected

stratas. We note that this approach to diversity is orthogonal to increasing temperature and hence

does not affect generation quality.

We evaluate SimpleStrat on underspecified questions, specifically questions that have more than one

plausible answer. However, unlike ambiguous questions more widely, an answer to an underspecified

question can be easily verified to be a valid without additional context. These questions capture

settings where the user is indifferent to the particular answer as long as it’s valid or in settings where

we wish to resample to get a set of candidates solutions. We introduce CoverageQA, a benchmark of

underspecified questions with on average 28.7 equally plausible answers.

We measure diversity by computing the Kullback-Leibler (KL) Divergence from the response distri-

bution to a uniform distribution over all valid answers. By computing the response distribution using

next-token probabilities, we show SimpleStrat samples from a less biased distribution. For proprietary

2

models where we cannot close form express the response distribution, we measure the model’s

coverage via recall of ground-truth solutions over 100 samples. On CoverageQA, SimpleStrat leads

to 0.36 reduction in KL Divergence on average on Llama 3 models and a consistent 0.05 increase

in recall. We show gains on top of temperature scaling leading to improved diversity orthogonal to

increasing temperature.

Concretely, our work contributes the following:

•

CoverageQA dataset of 105 under-specified questions automatically generated from Wiki-

Data (Vrande

ˇ

ci

´

c & Krötzsch, 2014) annotated with on average 28.7 valid solutions per

question.

•

We propose SimpleStrat a training-free approach for improving diversity with auto-

stratification and probabilistic prompting.

•

We demonstrate SimpleStrat improves diversity on CoverageQA with 0.36 reduction in KL

Divergence on average on Llama 3 models and a consistent 0.05 increase in recall across all

temperatures for GPT-4o.

2 RELATED WORK.

Temperature Scaling. Going back as far as Platt scaling (Platt, 2000) and later applied to neural

networks (Hinton, 2015; Guo et al., 2017), temperature scaling controls the randomness of probability

distributions

1

. For dataset generation with LLMs, Chung et al. (2023) extends temperature-based

diversity by additionally downsampling previously sampled tokens. To address the decrease in quality,

they advocate for human intervention to manually filter out irrelevant diversity and manually fixing

wrong answers in QA tasks. We show in our work temperature scaling leaves much to be desired.

Improving Language Model Diversity with Search. In autoregressive generation, choices over

early tokens tend to have more impact on the eventual completion. Beam search ameliorates this

bias by allowing for multiple candidates in searching for the probability maximizing completion,

Maximum a Posteriori (MAP) Lowerre & Reddy (1976). At the end of the search, beam search will

have multiple candidate solutions encountered during search. Diverse Beam Search (DBS) proposes

introducing an auxiliary dissimilarity objective quantifying the diversity among candidates in the

beam (Vijayakumar et al., 2016). Especially on the task of image captioning, DBS shows improve-

ment for discovering higher probability completions and discovering diverse continuations. Our

improvements are orthogonal to beam search and our in-context approach corrects for inaccuracies in

the modeled likelihoods of candidate solutions.

Other approaches (Samvelyan et al., 2024; Bradley et al., 2023) based on MAP-Elites (Mouret &

Clune, 2015) require manual determined dimensions of relevant diversity and discretization of the

solution space into equally-sized bins. Diversity is then achieved by mutations and evolutionary

methods to cover adjacent bins. This search is potentially slow if the seed set of solutions do not

already provide coverage over the solutions space. Our approach does not need seed solutions and

avoids manually identifying dimensions of diversity. Instead, we rely solely on capabilities within the

model.

In-context Methods to Increase Diversity. When LLMs were first introduced, LMs were used to

augment existing datasets with more diversity (Wei & Zou, 2019; Ng et al., 2020; Dai et al., 2023).

As natural language is difficult to guarantee correctness, the space of augmentations is conservatively

limited to thesaurus based synonym replacement. More recently, Language Model Crossover proposes

presenting a random subset of existing data points to an LLM and ask it to hallucinate more data

points that likely came from the same distribution Meyerson et al. (2023). This is limited combining

aspects of existing data points into new generations. Although these methods address the limitations

of using the model’s token probabilities by in-context learning, they are ineffective at generating

meaningful diversity. They are limited to either a human identified domains of interest or trivial

variations sourced from synonyms or minicking random subsets of the existing dataset.

Applications of Diversity. As shown by Raventós et al. (2024), dataset diversity is crucial for model

generalization. Below sufficient coverage of the desired task, the model will resort to memorization,

1

Use of temperature parameter goes back at least to Verhulst’s development of logistic regression in response

to Malthus’ An Essay on Principle of Population (Malthus, 1798; Verhulst, 1838).

3

but when sufficient diversity is presented it will learn to generalize. As LLMs are increasingly

used for generating synthetic data (Dubey et al., 2024), methods for diversity will be critical. This

insight follows from extensive work demonstrating the benefits of data augmentation for bias mit-

igation (Sharmanska et al., 2020) and domain adaptation (Huang et al., 2018; Dunlap et al., 2023;

Trabucco et al., 2023).

In code and math applications, checking validity efficiently enables more aggressive augmentations.

One such augmentation for diversifying the languages supported by the model, data is translated to

different natural or programming language (Chen et al., 2023; Cassano et al., 2023). In other domains

such as images, text-to-image models have been used to do diversify data into uncommon settings. In

the setting of diversifying an accumulating dataset, these methods can take advantage of an existing

source of variance (for translation) or set of previously generated data points. Our primary focus is

on settings where SimpleStrat is unaware of past data samples to support a wider set of applications.

Ambiguous or Underspecified Datasets. ClariQ (Aliannejadi et al., 2020), CLAQUA (Xu et al.,

2019), and AmbigQA (Min et al., 2020) focus on assessing LM’s ability to formulate clarifying

questions. These question tend to have only 2 candidate solutions, as there exists a ground truth

clarifying question whose answer fully specifies the question. Ambiguous Trivia QA (Kuhn et al.,

2022) also looks at under-specified questions, but assume a user has contextual information that’s

hidden. For instance, "Where in England was she born?" or "Who was the first woman to make a

solo flight across this ocean?". We distinguish our underspecified question setting in this paper as

one where the user is indifferent. In this setting, the given an answer it should be easy to verify the

answer is correct without additional hidden context.

Coding datasets like Description2Code (Caballero et al., 2016), Wiki2SQL (Zhong et al., 2017),

SPIDER (Yu et al., 2019), code-contest (Li et al., 2022), Apps (Hendrycks et al., 2021), and Leetcode

Hard Shinn et al. (2023) admit multiple valid answers. However, the space of valid implementations

is infinite, making diversity difficult to measure, and good coding practices enforce preferences

among valid implementations. We additionally construct CoverageQA to have an exhaustive list of

ground-truth answers in order to measure the impact of diversity on coverage.

3 METHOD

3.1 WORKFLOW OVERVIEW

As illustrated in 2, SimpleStrat consist of three stages, 1) auto-stratification, 2) heuristic estimation,

and 3) probabilistic prompting. For each unique user prompt, the outputs of the first two stages can

be cached to avoid recomputing feed-forwards.

3.2 AUTO-STRATIFICATION

For a given user request,

r

user

, we call

S

, the space of valid solutions. In many settings, the space of

potential solutions,

S

may be naturally partitioned based on geography, parity, or demographics. The

partition function,

P : S → L

, assigns any solution

s

from

S

to a partition label

l

j

in

L

the set of parti-

tion labels. Partition functions are most useful if they’re as balanced as possible. A balanced partition

function minimizes

imbalance(P, L) = max

l∈L

(|{s | P (s) = l}|) − min

l∈L

(|{s | P (s) = l}|)

.

The goal of auto-stratification is to search for a set of partition functions

P = {P

1

, P

2

, ..., P

n

}

, that

are balanced. Traditionally, in settings where there are oft-overlooked or a large or infinite number

of valid solutions, stratified sampling can ensure our limited budget of samples covers the space of

solutions evenly.

Based on this insight, we prompt the language model to identify promising dimensions of diversity.

Concretely, the language model proposes good clarifying questions that will potentially eliminate

half of the potential solutions based on the user request. These clarifying questions tend to align with

semantically significant differences. In the running example, when asked, "Name a US State," the

states can be partitioned based on East or West of the Mississippi River. See App. C for full prompt.

4

剩余18页未读,继续阅读

资源评论

豪AI冰

- 粉丝: 73

- 资源: 68

上传资源 快速赚钱

我的内容管理

展开

我的内容管理

展开

我的资源

快来上传第一个资源

我的资源

快来上传第一个资源

我的收益 登录查看自己的收益

我的收益 登录查看自己的收益 我的积分

登录查看自己的积分

我的积分

登录查看自己的积分

我的C币

登录后查看C币余额

我的C币

登录后查看C币余额

我的收藏

我的收藏  我的下载

我的下载  下载帮助

下载帮助

前往需求广场,查看用户热搜

前往需求广场,查看用户热搜最新资源

资源上传下载、课程学习等过程中有任何疑问或建议,欢迎提出宝贵意见哦~我们会及时处理!

点击此处反馈

安全验证

文档复制为VIP权益,开通VIP直接复制

信息提交成功

信息提交成功