Proceedings of the 55th Annual Meeting of the Association for Computational Linguistics, pages 1–10

Vancouver, Canada, July 30 - August 4, 2017.

c

2017 Association for Computational Linguistics

https://doi.org/10.18653/v1/P17-1001

Proceedings of the 55th Annual Meeting of the Association for Computational Linguistics, pages 1–10

Vancouver, Canada, July 30 - August 4, 2017.

c

2017 Association for Computational Linguistics

https://doi.org/10.18653/v1/P17-1001

Adversarial Multi-task Learning for Text Classification

Pengfei Liu Xipeng Qiu Xuanjing Huang

Shanghai Key Laboratory of Intelligent Information Processing, Fudan University

School of Computer Science, Fudan University

825 Zhangheng Road, Shanghai, China

{pfliu14,xpqiu,xjhuang}@fudan.edu.cn

Abstract

Neural network models have shown their

promising opportunities for multi-task

learning, which focus on learning the

shared layers to extract the common and

task-invariant features. However, in most

existing approaches, the extracted shared

features are prone to be contaminated by

task-specific features or the noise brought

by other tasks. In this paper, we propose

an adversarial multi-task learning frame-

work, alleviating the shared and private la-

tent feature spaces from interfering with

each other. We conduct extensive exper-

iments on 16 different text classification

tasks, which demonstrates the benefits of

our approach. Besides, we show that the

shared knowledge learned by our proposed

model can be regarded as off-the-shelf

knowledge and easily transferred to new

tasks. The datasets of all 16 tasks are pub-

licly available at http://nlp.fudan.

edu.cn/data/

1 Introduction

Multi-task learning is an effective approach to

improve the performance of a single task with

the help of other related tasks. Recently, neural-

based models for multi-task learning have be-

come very popular, ranging from computer vision

(Misra et al., 2016; Zhang et al., 2014) to natural

language processing (Collobert and Weston, 2008;

Luong et al., 2015), since they provide a conve-

nient way of combining information from multiple

tasks.

However, most existing work on multi-task

learning (Liu et al., 2016c,b) attempts to divide the

features of different tasks into private and shared

spaces, merely based on whether parameters of

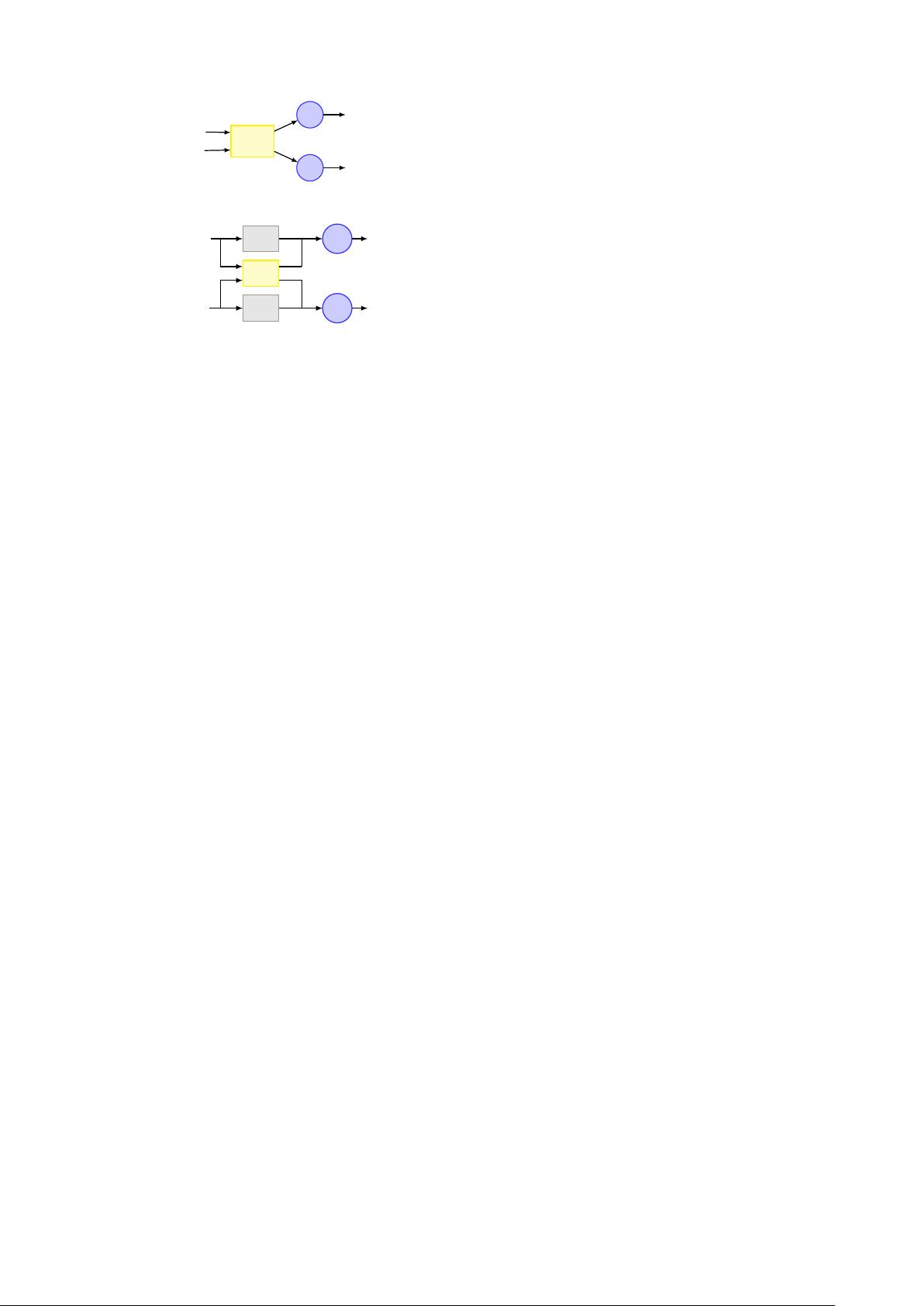

A B

(a) Shared-Private Model

A B

(b) Adversarial Shared-Private Model

Figure 1: Two sharing schemes for task A and task

B. The overlap between two black circles denotes

shared space. The blue triangles and boxes repre-

sent the task-specific features while the red circles

denote the features which can be shared.

some components should be shared. As shown in

Figure 1-(a), the general shared-private model in-

troduces two feature spaces for any task: one is

used to store task-dependent features, the other is

used to capture shared features. The major lim-

itation of this framework is that the shared fea-

ture space could contain some unnecessary task-

specific features, while some sharable features

could also be mixed in private space, suffering

from feature redundancy.

Taking the following two sentences as exam-

ples, which are extracted from two different senti-

ment classification tasks: Movie reviews and Baby

products reviews.

The infantile cart is simple and easy to use.

This kind of humour is infantile and boring.

The word “infantile” indicates negative senti-

ment in Movie task while it is neutral in Baby task.

However, the general shared-private model could

place the task-specific word “infantile” in a

shared space, leaving potential hazards for other

tasks. Additionally, the capacity of shared space

could also be wasted by some unnecessary fea-

tures.

To address this problem, in this paper we

propose an adversarial multi-task framework, in

which the shared and private feature spaces are in-

1