Visualizing and Understanding Convolutional Networks

1.1. Related Work

Visualizing features to gain intuition about the net-

work is common practice, but mostly limited to the 1st

layer where projections to pixel space are possible. In

higher layers this is not the case, and there are limited

methods for interpreting activity. (Erhan et al., 2009)

find the optimal stimulus for each unit by perform-

ing gradient descent in image space to maximize the

unit’s activation. This requires a careful initialization

and does not give any information about the unit’s in-

variances. Motivated by the latter’s short-coming, (Le

et al., 2010) (extending an idea by (Berkes & Wiskott,

2006)) show how the Hessian of a given unit may be

computed numerically around the optimal response,

giving some insight into invariances. The problem is

that for higher layers, the invariances are extremely

complex so are poorly captured by a simple quadratic

approximation. Our approach, by contrast, provides a

non-parametric view of invariance, showing which pat-

terns from the training set activate the feature map.

(Donahue et al., 2013) show visualizations that iden-

tify patches within a dataset that are responsible for

strong activations at higher layers in the model. Our

visualizations differ in that they are not just crops of

input images, but rather top-down projections that

reveal structures within each patch that stimulate a

particular feature map.

2. Approach

We use standard fully supervised convnet models

throughout the paper, as defined by (LeCun et al.,

1989) and (Krizhevsky et al., 2012). These models

map a color 2D input image x

i

, via a series of lay-

ers, to a probability vector ˆy

i

over the C different

classes. Each layer consists of (i) convolution of the

previous layer output (or, in the case of the 1st layer,

the input image) with a set of learned filters; (ii) pass-

ing the responses through a rectified linear function

(relu(x) = max(x, 0)); (iii) [optionally] max pooling

over local neighborhoods and (iv) [optionally] a lo-

cal contrast operation that normalizes the responses

across feature maps. For more details of these opera-

tions, see (Krizhevsky et al., 2012) and (Jarrett et al.,

2009). The top few layers of the network are conven-

tional fully-connected networks and the final layer is

a softmax classifier. Fig. 3 shows the model used in

many of our experiments.

We train these models using a large set of N labeled

images {x, y}, where label y

i

is a discrete variable

indicating the true class. A cross-entropy loss func-

tion, suitable for image classification, is used to com-

pare ˆy

i

and y

i

. The parameters of the network (fil-

ters in the convolutional layers, weight matrices in the

fully-connected layers and biases) are trained by back-

propagating the derivative of the loss with respect to

the parameters throughout the network, and updating

the parameters via stochastic gradient descent. Full

details of training are given in Section 3.

2.1. Visualization with a Deconvnet

Understanding the operation of a convnet requires in-

terpreting the feature activity in intermediate layers.

We present a novel way to map these activities back to

the input pixel space, showing what input pattern orig-

inally caused a given activation in the feature maps.

We perform this mapping with a Deconvolutional Net-

work (deconvnet) (Zeiler et al., 2011). A deconvnet

can be thought of as a convnet model that uses the

same components (filtering, pooling) but in reverse, so

instead of mapping pixels to features does the oppo-

site. In (Zeiler et al., 2011), deconvnets were proposed

as a way of performing unsupervised learning. Here,

they are not used in any learning capacity, just as a

probe of an already trained convnet.

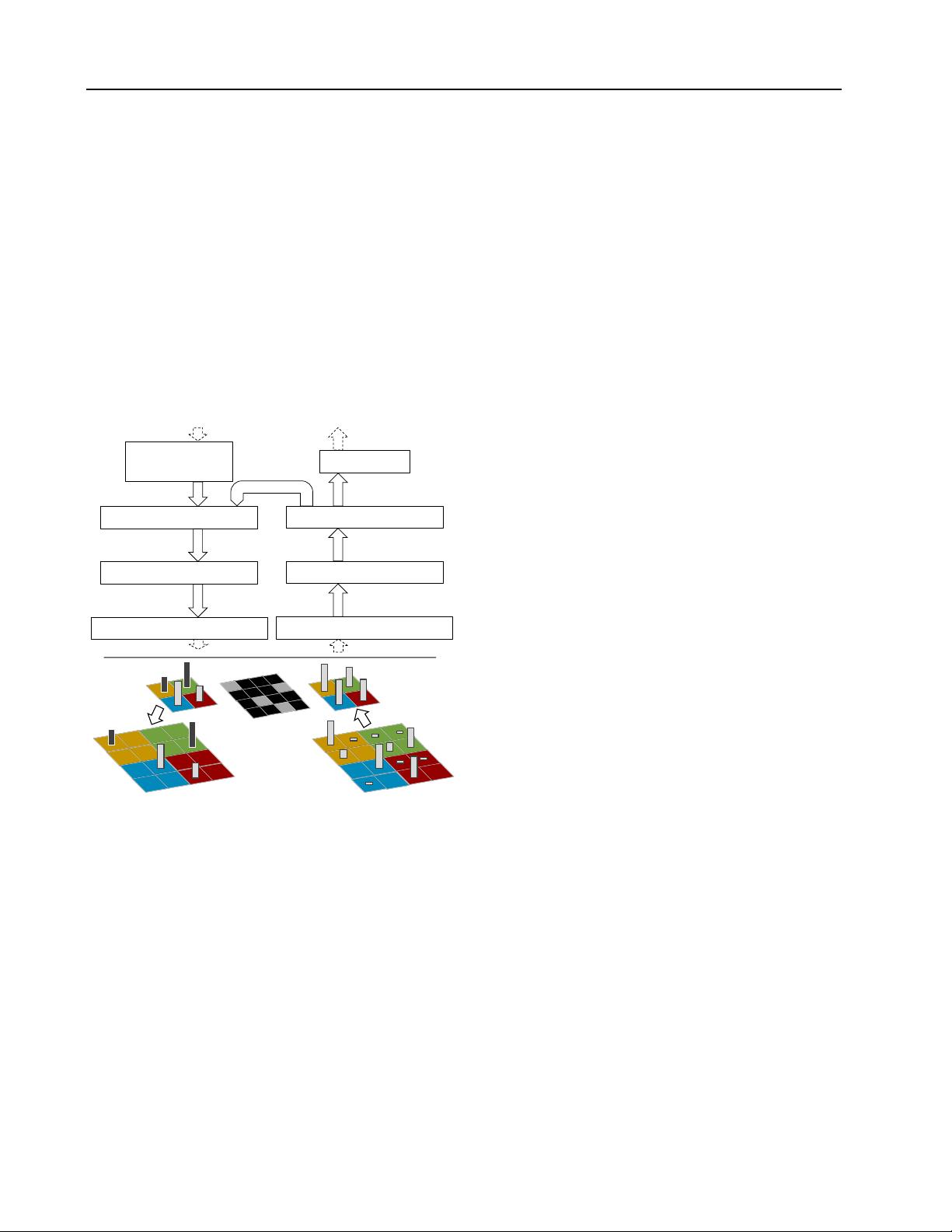

To examine a convnet, a deconvnet is attached to each

of its layers, as illustrated in Fig. 1(top), providing a

continuous path back to image pixels. To start, an

input image is presented to the convnet and features

computed throughout the layers. To examine a given

convnet activation, we set all other activations in the

layer to zero and pass the feature maps as input to

the attached deconvnet layer. Then we successively

(i) unpool, (ii) rectify and (iii) filter to reconstruct

the activity in the layer beneath that gave rise to the

chosen activation. This is then repeated until input

pixel space is reached.

Unpooling: In the convnet, the max pooling opera-

tion is non-invertible, however we can obtain an ap-

proximate inverse by recording the locations of the

maxima within each pooling region in a set of switch

variables. In the deconvnet, the unpooling operation

uses these switches to place the reconstructions from

the layer above into appropriate locations, preserving

the structure of the stimulus. See Fig. 1(bottom) for

an illustration of the procedure.

Rectification: The convnet uses relu non-linearities,

which rectify the feature maps thus ensuring the fea-

ture maps are always positive. To obtain valid fea-

ture reconstructions at each layer (which also should

be positive), we pass the reconstructed signal through

a relu non-linearity.

Filtering: The convnet uses learned filters to con-

volve the feature maps from the previous layer. To

我的内容管理

展开

我的内容管理

展开

我的资源

快来上传第一个资源

我的资源

快来上传第一个资源

我的收益 登录查看自己的收益

我的收益 登录查看自己的收益 我的积分

登录查看自己的积分

我的积分

登录查看自己的积分

我的C币

登录后查看C币余额

我的C币

登录后查看C币余额

我的收藏

我的收藏  我的下载

我的下载  下载帮助

下载帮助

前往需求广场,查看用户热搜

前往需求广场,查看用户热搜

信息提交成功

信息提交成功