Deeply learned face representations are sparse, selective, and robust

Yi Sun

1

Xiaogang Wang

2,3

Xiaoou Tang

1,3

1

Department of Information Engineering, The Chinese University of Hong Kong

2

Department of Electronic Engineering, The Chinese University of Hong Kong

3

Shenzhen Institutes of Advanced Technology, Chinese Academy of Sciences

sy011@ie.cuhk.edu.hk xgwang@ee.cuhk.edu.hk xtang@ie.cuhk.edu.hk

Abstract

This paper designs a high-performance deep convo-

lutional network (DeepID2+) for face recognition. It

is learned with the identification-verification supervisory

signal. By increasing the dimension of hidden repre-

sentations and adding supervision to early convolutional

layers, DeepID2+ achieves new state-of-the-art on LFW

and YouTube Faces benchmarks.

Through empirical studies, we have discovered three

properties of its deep neural activations critical for the high

performance: sparsity, selectiveness and robustness. (1) It

is observed that neural activations are moderately sparse.

Moderate sparsity maximizes the discriminative power of

the deep net as well as the distance between images. It is

surprising that DeepID2+ still can achieve high recognition

accuracy even after the neural responses are binarized. (2)

Its neurons in higher layers are highly selective to identities

and identity-related attributes. We can identify different

subsets of neurons which are either constantly excited or

inhibited when different identities or attributes are present.

Although DeepID2+ is not taught to distinguish attributes

during training, it has implicitly learned such high-level

concepts. (3) It is much more robust to occlusions, although

occlusion patterns are not included in the training set.

1. Introduction

Face recognition achieved great progress thanks to ex-

tensive research effort devoted to this area [31, 33, 6, 24,

37, 27, 25, 23, 38]. While pursuing higher performance is

a central topic, understanding the mechanisms behind it is

equally important. When deep neural networks begin to ap-

proach human on challenging face benchmarks [27, 25, 23]

such as LFW [13], people are eager to know what has been

learned by these neurons and how such high performance is

achieved. In cognitive science, there are a lot of studies [30]

on analyzing the mechanisms of face processing of neurons

in visual cortex. Inspired by those works, we analyze the

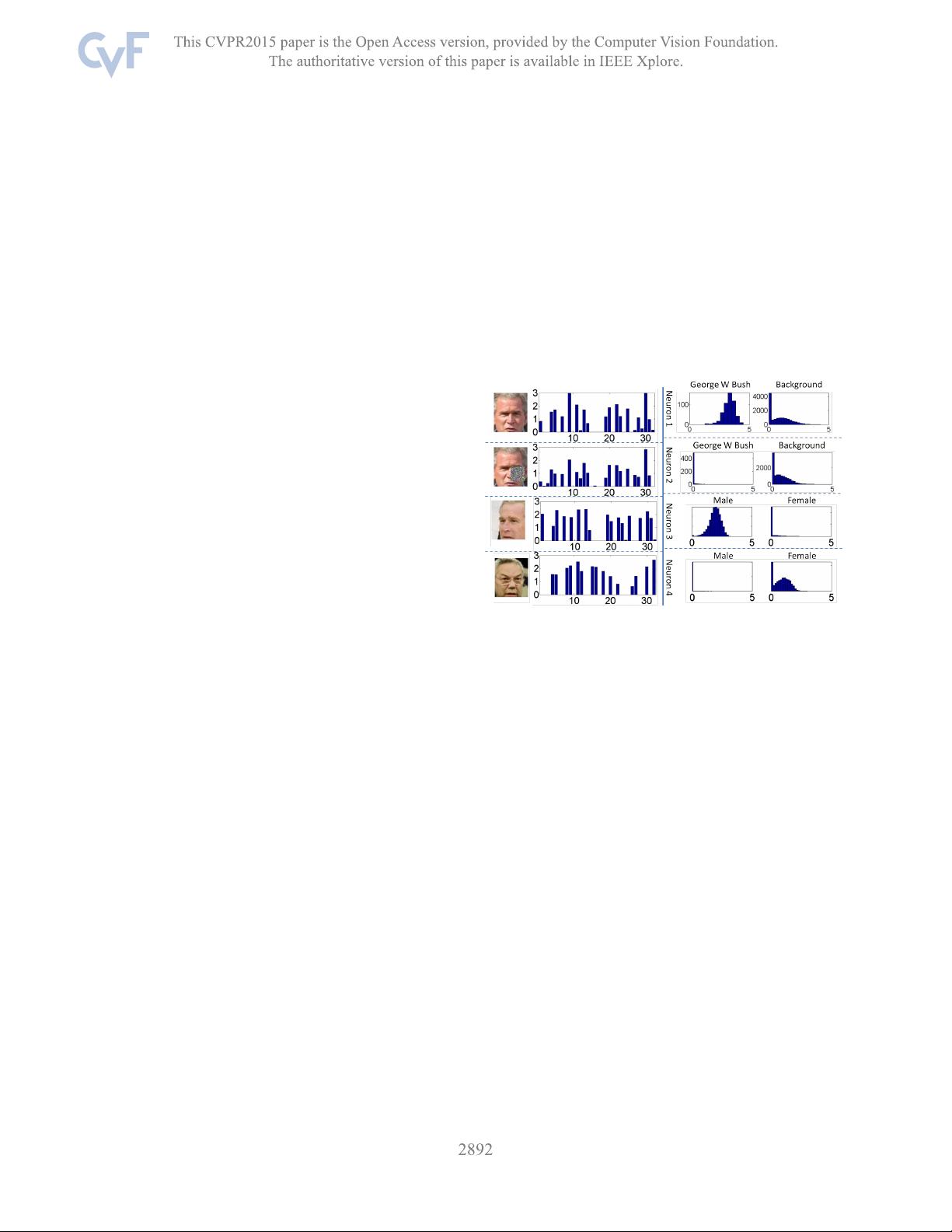

Figure 1: Left: neural responses of DeepID2+ on images

of Bush and Powell. The second face is partially occluded.

There are 512 neurons in the top hidden layer of DeepID2+.

We subsample 32 for illustration. Right: a few neurons

are selected to show their activation histograms over all

the LFW face images (as background), all the images

belonging to Bush, all the images with attribute Male, and

all the images with attribute Female. A neuron is generally

activated on about half of the face images. But it may

constantly have activations (or no activation) for all the

images belonging to a particular person or attribute. In this

sense, neurons are sparse, and selective to identities and

attributes.

behaviours of neurons in artificial neural networks in a

attempt to explain face recognition process in deep nets,

what information is encoded in neurons, and how robust

they are to corruptions.

Our study is based on a high-performance deep convo-

lutional neural network (deep ConvNet [15, 16]), referred

to as DeepID2+, proposed in this paper. It is improved

upon the state-of-the-art DeepID2 net [23] by increasing the

dimension of hidden representations and adding supervision

to early convolutional layers. The best single DeepID2+

net (taking both the original and horizontally flipped face

images as input) achieves 98.70% verification accuracy on

1

我的内容管理

展开

我的内容管理

展开

我的资源

快来上传第一个资源

我的资源

快来上传第一个资源

我的收益 登录查看自己的收益

我的收益 登录查看自己的收益 我的积分

登录查看自己的积分

我的积分

登录查看自己的积分

我的C币

登录后查看C币余额

我的C币

登录后查看C币余额

我的收藏

我的收藏  我的下载

我的下载  下载帮助

下载帮助

前往需求广场,查看用户热搜

前往需求广场,查看用户热搜

信息提交成功

信息提交成功