没有合适的资源?快使用搜索试试~ 我知道了~

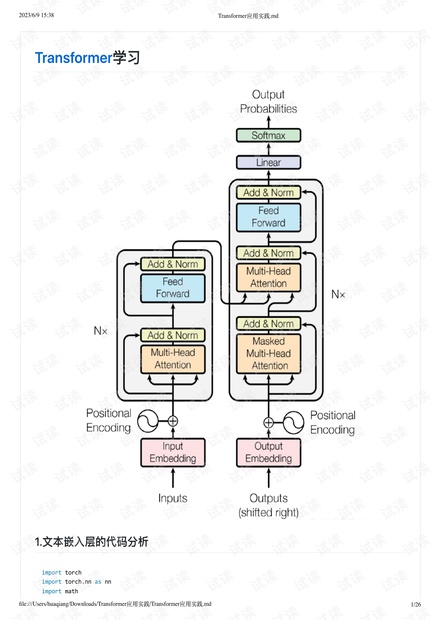

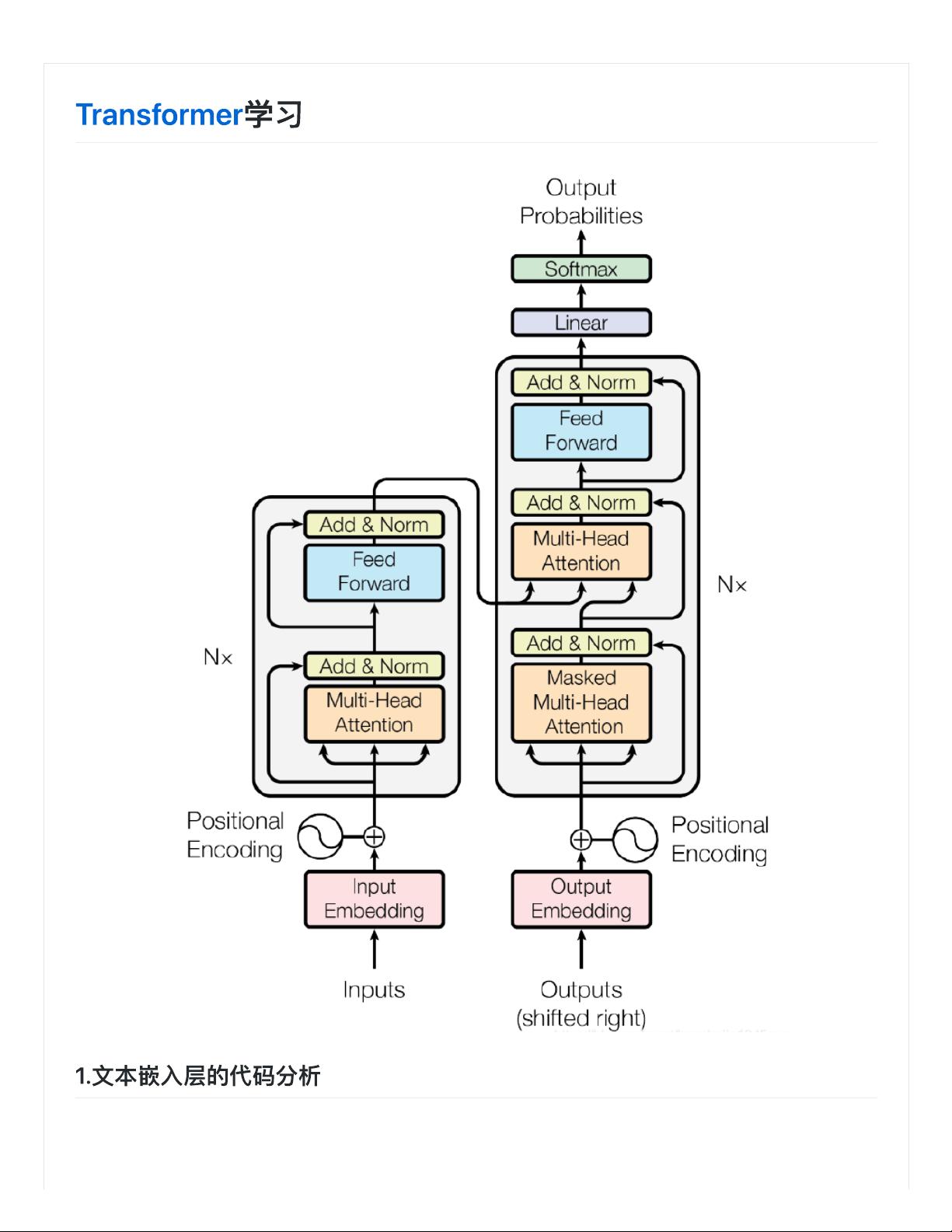

Transformer应用实践(学习篇)

需积分: 1 2 下载量 104 浏览量

2023-06-09

15:49:07

上传

评论

收藏 3.4MB PDF 举报

温馨提示

试读

26页

将B站作为一个学习软件的简单实践,将某培训机构的关于Transformer实战的教程,对照实操,对Transformer有了更清晰的认识(最终的训练评估代码执行报错,未能解决,略有遗憾),将学习过程整理成册备阅;

资源推荐

资源详情

资源评论

2023/6/9 15:38

Transformer应⽤实践.md

file:///Users/huaqiang/Downloads/Transformer应⽤实践/Transformer应⽤实践.md

1/26

import torch

import torch.nn as nn

import math

2023/6/9 15:38

Transformer应⽤实践.md

file:///Users/huaqiang/Downloads/Transformer应⽤实践/Transformer应⽤实践.md

2/26

from torch.autograd import Variable #

import numpy as np

class Embeddings(nn.Module):

def __init__(self, d_model, vocab):

# d_model:

# vocab:

super(Embeddings, self).__init__()

self.lut = nn.Embedding(vocab, d_model) # Embedding

self.d_model = d_model

def forward(self, x):

# x lut d_model

return self.lut(x) * math.sqrt(self.d_model)

#

embedding = nn.Embedding(10, 3) #

embedding(torch.LongTensor([[1,0,3,0],[5,0,7,0]]))

tensor([[[ 0.1369, -1.1720, -0.3088],

[-0.2342, 0.0257, -0.1409],

[-2.0039, 0.6588, 1.1285],

[-0.2342, 0.0257, -0.1409]],

[[-0.7278, -0.5684, 0.8189],

[-0.2342, 0.0257, -0.1409],

[-1.5166, 0.1493, 0.2819],

[-0.2342, 0.0257, -0.1409]]], grad_fn=<EmbeddingBackward>)

embedding = nn.Embedding(10, 3, padding_idx=0)

embedding(torch.LongTensor([[1,0,3,0],[5,0,7,0]]))

tensor([[[-0.1809, -0.1365, 1.2656],

[ 0.0000, 0.0000, 0.0000],

[-0.4129, 0.9765, 1.2057],

[ 0.0000, 0.0000, 0.0000]],

[[ 1.8736, -1.4908, -0.4024],

[ 0.0000, 0.0000, 0.0000],

[ 0.4857, 0.1198, -0.8273],

[ 0.0000, 0.0000, 0.0000]]], grad_fn=<EmbeddingBackward>)

d_model = 512 #

vocab = 1000 #

x = Variable(torch.LongTensor([[100,2,421,508],[491,998,1,221]]))

x

2023/6/9 15:38

Transformer应⽤实践.md

file:///Users/huaqiang/Downloads/Transformer应⽤实践/Transformer应⽤实践.md

3/26

tensor([[100, 2, 421, 508],

[491, 998, 1, 221]])

emb = Embeddings(d_model, vocab)

embr = emb(x)

embr.shape

torch.Size([2, 4, 512])

class PositionalEncoding(nn.Module):

def __init__(self, d_model, dropout, max_len=5000):

# d_model:

# dropout: 0

# max_len:

super(PositionalEncoding, self).__init__()

self.dropout = nn.Dropout(p=dropout) # exp: 0.2

# 0 max_len * d_model

pe = torch.zeros(max_len, d_model)

#

position = torch.arange(0, max_len).unsqueeze(1)

#

div_term = torch.exp(torch.arange(0, d_model , 2) * -(math.log(10000.0) / d_model))

pe[:, 0::2] = torch.sin(position * div_term)

pe[:, 1::2] = torch.cos(position * div_term)

#

pe = pe.unsqueeze(0)

# buffer( )

self.register_buffer('pe',pe)

def forward(self, x):

# x:

# 5000

x = x + Variable(self.pe[:, :x.size(1)], requires_grad=False)

return self.dropout(x)

#

x = torch.tensor([1,2,3,4])

torch.unsqueeze(x, 0)

torch.squeeze(x, 0)

tensor([1, 2, 3, 4])

dropout = 0.1

2023/6/9 15:38

Transformer应⽤实践.md

file:///Users/huaqiang/Downloads/Transformer应⽤实践/Transformer应⽤实践.md

4/26

max_len = 60

x = embr

x.shape

torch.Size([2, 4, 512])

pe = PositionalEncoding(d_model, dropout, max_len)

pe_result = pe(x)

pe_result.shape

torch.Size([2, 4, 512])

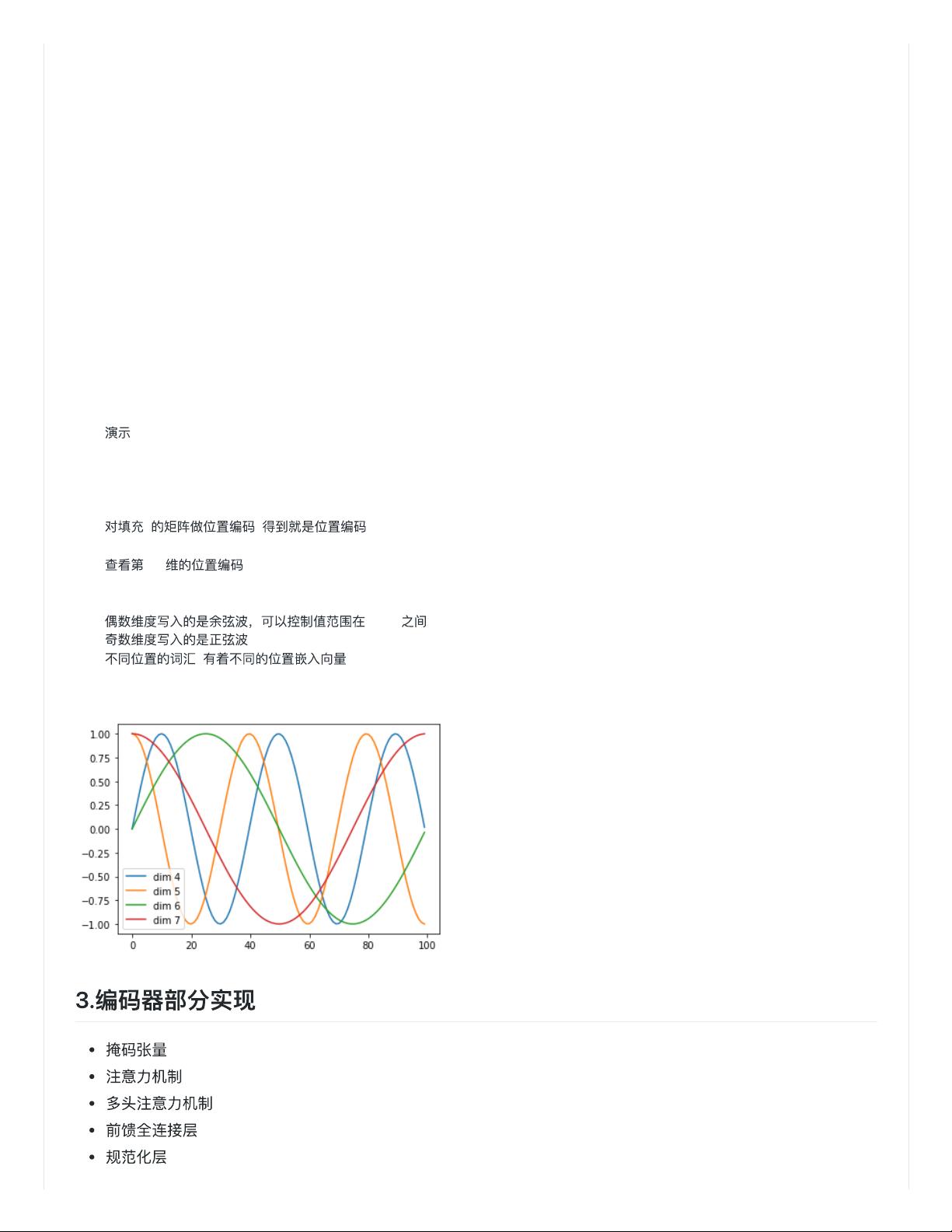

#

import matplotlib.pyplot as plt

import numpy as np

pe = PositionalEncoding(20, 0.0)

# 0

y = pe(Variable(torch.zeros(2,100,20)))

# 4~8

plt.plot(np.arange(100), y[0,:,4:8].data.numpy())

plt.legend(["dim %d"%p for p in range(4,8)])

# -1~+1

#

#

plt.show()

剩余25页未读,继续阅读

资源评论

baby_hua

- 粉丝: 2369

- 资源: 4

上传资源 快速赚钱

我的内容管理

展开

我的内容管理

展开

我的资源

快来上传第一个资源

我的资源

快来上传第一个资源

我的收益 登录查看自己的收益

我的收益 登录查看自己的收益 我的积分

登录查看自己的积分

我的积分

登录查看自己的积分

我的C币

登录后查看C币余额

我的C币

登录后查看C币余额

我的收藏

我的收藏  我的下载

我的下载  下载帮助

下载帮助

前往需求广场,查看用户热搜

前往需求广场,查看用户热搜安全验证

文档复制为VIP权益,开通VIP直接复制

信息提交成功

信息提交成功